Well with all this (Hyper-V) Clustering, Virtualization, System Center Suite, Exchange 2010 & Lync, SQL Servers, iSCSI demands on my lab network I really need to refresh my hard ware. It sounds a bit like a paradox but such is life for the people building all this stuff. Yes, they still need some hardware, pretty beefy machines actually, to set it all up, test it, break it, fix it and keep learning. I’ve depleted my 4 years old lab material which in which I can’t put more than 4 GB RAM. Now that I have finished all my infrastructure projects for 2010 I have time to focus on improving my old setup. Or at least I hope. Things are very busy. Thanks to W2K8R2 SP1 beta I could use Dynamic Memory which helped to keep churning away with these and various Exchange setups but now with Lync coming into the picture I want and need an upgrade. A couple of SQL Servers in various high availability setups help eat any remaining resources resources . Add to that the fact that I want to do some private cloud testing so there it is. I need hosts with at least an Intel Quad Core (i7) and at least 16 GB of DDR3 memory. They should have room for extra NIC cards. And I always try to get some speedy disks where it matters. Now since Windows Server 2008 R2 added support for Second Level Address Translation (SLAT), which Intel calls Extended Page Tables (EPT) and which AMD calls Nested Page Tables (NPT) or Rapid Virtualization Indexing (RVI), we can make use of better graphics cards. Until now none of my processors had SLAT support. With the Intel i7 (Nehalem) processor I’m good to go. As all machine in my lab are Intel so I’m sticking with them for Hyper-V migrations as that doesn’t work between brands.

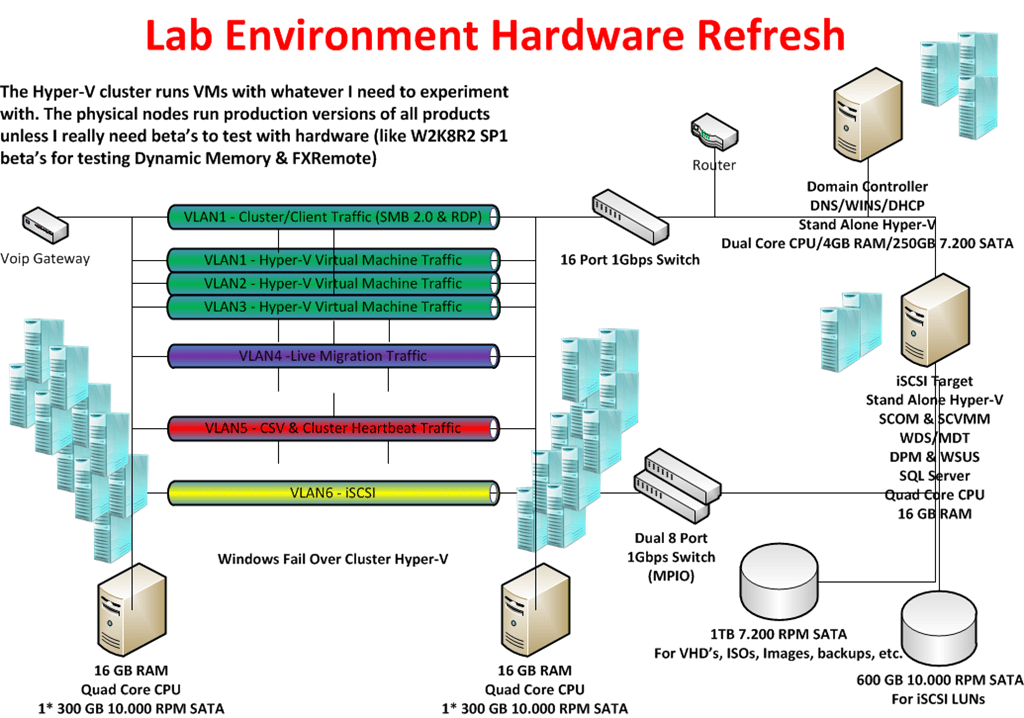

So here’s an logical overview of my setup. This is what I already in place with my current hardware but have now drawn with my coveted hardware refreshment ![]() Oh, yes the dual 1Gbps switches for iSCSI are new for this setup. I’m adding one so I can play with MPIO in the lab.

Oh, yes the dual 1Gbps switches for iSCSI are new for this setup. I’m adding one so I can play with MPIO in the lab.

For disks I use 300GB – 16MB – 10.000 rpm and 600GB –32MB – 10.000rpm Raptors in combination with an external eSATA 1TB/2TB Western Digital Black Disk for storage of VHD’s, Images, backups etc. I have to buy some extra now. The faster disks are expensive but a lab environment needs some performance as waiting around for servers & virtual machines becomes a major of annoyance when you need to get work done. The 10.000 rpm disks are great for iSCSI storage for which I use the iSCSI Target from Windows 2008 R2 Storage server via my TechNet subscription.

All this kit should keep me up and running from 2011 until the end of 2014. Is this expensive? Yes and no. I can recuperate my 1 Gbps Intel NIC’s and most of my hard disks. I already have my network switches, monitors and KVM switches. So in all it’s the new motherboards, CPU’s and memory that will eat the most of the budget. It’s a sum to put out but here’s a note to all IT Pro’s out there. You need to invest in yourself every now and then.

I’ve blogged about this before in https://blog.workinghardinit.work/2010/02/04/having-a-lab-using-it/. Self improvement and learning is a continuous process that never ends. Sure it does have some peak moments in financial costs when you need equipment. Remember you don’t need to buy it all at once. Talk to you employer about this if you’re not self employed. Look at how much a 5 day advanced course or a conference costs. You can use a lab to learn and experiment for many years to come. So basically the potential ROI is very good. In the end, what my employers and customers get out of this is knowledge, insight, skills and results. Think about it, it helps to put the investment in perspective. Sure, I invest more than just the hardware, my time which is very valuable to me. You can’t maker more time, everyone has the same 24 hours in a day. Now it really helps if you like this stuff and have fun whilst learning new technologies or setting up a proof of concept. In a way what people put into their job and knowledge is an indicator of their professionalism. You do not become an expert by working 9 to 5 and only learning when a course is provided. It’s not going to happen. Even a genius who puts in the effort stands out amongst his or her peers. The same goes for you, but be smart about it. You can work yourself to death and not accomplish anything. So smart & hard is the way to go.