Benefits of delivering updates to the integration services via Windows Updates

In Windows Server vNext aka the Technical Preview the integration services are being delivered through Windows Update (and as such the well know tools such a s WSUS, …). This is significant in reducing the operational burden to make sure they are up to date. Many of us turned to PowerShell scripting to handle this task. So did I and I still find myself tweaking the scripts once in a while for a condition I had not dealt with before or just to get better feedback or reporting. Did I ever tell you that story about the cluster where a 100VMs did not have a virtual DVD drive (they removed them to improve performance) … that was yet another improvement to my script => detect the absence of a virtual DVD drive. In this day and age, virtualization has both scaled up and out with ever more virtual machines per host and in total. The process of having to load an ISO in a virtual DVD drive inside a virtual machine to install upgrades to integration services seems arcane and it’s very timely that it has been replaced by an operation process more befitting a Cloud OS ![]() .

.

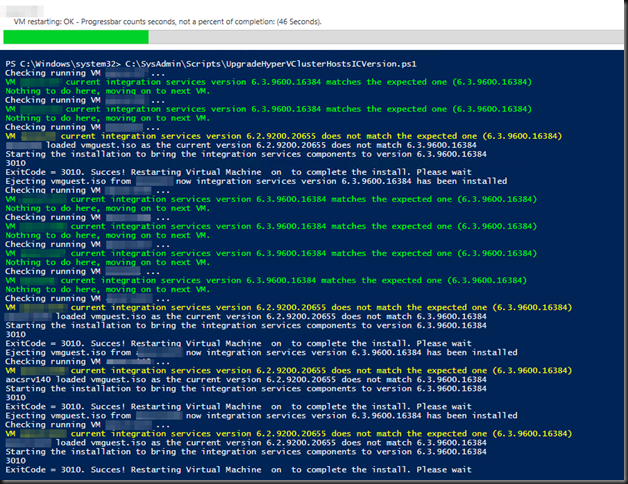

I have optimized this process with some PowerShell scripting and it wasn’t to painful anymore. The script upgrades all the VMs on the hosts and even puts them back in the state if found them in (Stopped, Saved, Running). A screenshot of the script in action below.

I’m glad that it’s now integrated through Windows Update and part of other routine maintenance that’s done on the guests anyway.

But is not only good news for us “on premises” system administrators and integrators. It’s also important for service/cloud providers and (hosted) private cloud hosters. This change means that the tenants have control of updates to the integration services of their virtual machines. They update their Windows virtual machines with all updates during their normal patch cycles and now this includes the integration services. This provides operation ease (single method) and avoids some of the discussions about when to upgrade the integration services.

Legacy Operating Systems

Shortly after the release of the Windows Server Technical Preview, updates to integration services for Windows guests began being distributed through Windows Update. This means that on that version the vmguest.iso is no longer needed and as such it’s no longer included with Hyper-V. This means that if you run an unsupported (most often legacy) version of Windows you’ll need to grab the latest possible vmguest.iso from an W2K12R2 Hyper-V host and try to install that and see if it works.

What about Linux and FreeBSD?

Well nothing has changed and how that’s taken care of you can read here: Linux and FreeBSD Virtual Machines on Hyper-V