In October 2010 Aidan Finn (MVP) his book “Mastering Hyper-V Deployment” was released and in November three copies of this book landed on my desk. I bought them (pre order) via Amazon. Nope I did not get them as a gift or anything. Why Three? Well that’s the number of people I wanted to get up to speed about Hyper-V and virtualization management and operations in a Microsoft environment.

His book takes you along a journey through a Hyper-V project that will teach you about virtualization in all it’s aspects. It also touches on many supporting technologies and products such as System Center Virtual Machine Manager 2008 R2, System Center Essentials 2010, Data Protection manager 2010 and System Center Operations Manager 2007 R2. No one book can be the only source of knowledge and understanding, but using this book as a start for both new and experienced IT Pros to learn about virtualization with Hyper-V will give you the best possible start. Consider it going to an Ivy league college on a scholarship paid for by Aidan’s experience and hard work. The subsidized tuition fee is the price of the book.

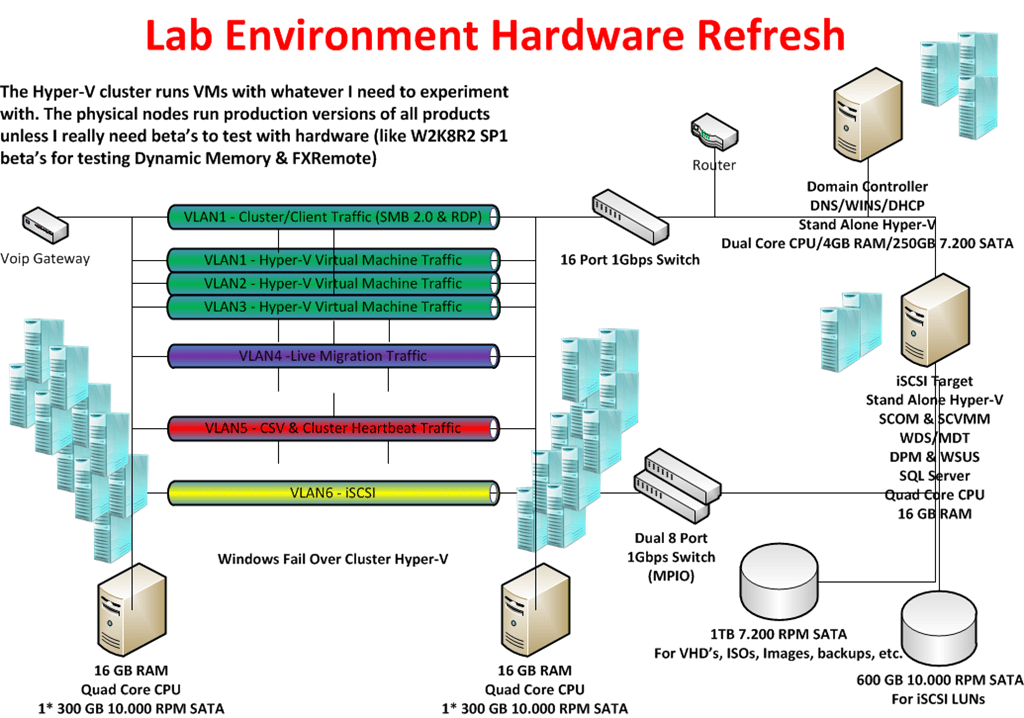

We feel a bit sorry that Aidan only got one copy so we made a group picture of the gang of three on the desk of our newest team member. He got a copy of the book together with 4 recycled PC’s and a TechNet subscription to build a lab.

If you know people who want or need to learn about Hyper-V, you’d do well to make sure they get this book and have them set up a lab to play with the technologies. Those efforts will pay off big time when they implement their solutions in the wild. If Ireland is doomed it won’t be because of smart & hardworking Irish IT professionals like Aidan. You see when you design, build and support IT solutions that your customers depend on 24/7 you can not hide behind false promises, you can’t fake away from the fact when “stuff” doesn’t work or hide behind vast amounts of papers & documents void of any substance. Nope, you are responsible for everything and anything you build. Aidan backed and supported by some very knowledgeable colleagues has made that burden a bit lighter for you to bear with this book. Aidan’s blog lives here: http://www.aidanfinn.com/