Introduction

cluster running Windows 8 Server Developer Preview to test the SMB2 Scale Out functionality and I smiling. In my previous blog Transparent Failover & Node Fault Tolerance With SMB 2.2 Tested I already tested the transparent failover with a more traditional active-passive file cluster and that was pretty neat. But there are two things to note:

- The most important one to me is that the experience with transparent failover isn’t as fluid for the end user as it should be in my opinion. That freeze is a bit to long to be comfortable. Whether that will change remains to be seen. It’s early days yet.

- The entire active-passive concept doesn’t scale very well to put it mildly. Whether this is important to you depends on your needs. Today one beefy well, configured server can server up a massive amount of data to a large number of users. So in a lot of environments this might not be an issue at all (it’s OK not to be running a 300.000 user global file server infrastructure, really

).

).

So bring in “File Server For Scale-Out Application Data” which is an active/active cluster. This is intended for use by applications like SQL server & Hyper.-V for example. It’s high speed and low drag high available file sharing based on SMB 2.2, Clusters Shared Volumes and failover clustering. The thing is, at this moment, it is not aimed at end user file sharing (hence it’s name ““File Server For Scale-Out Application Data”. When I heard that, I was a going “come on Microsoft, get this thing going for end user data as well”. Now that I have tested this in the lab, I want this only more. Because the experience is much more fluid. So I have to ask Microsoft to please get this setup supported in a production environment for all file sharing purposes! This is so awesome as an experience for both applications AND end users. The other approach that would work (except perhaps for scaling) is making the transparent failover for an active-passive file cluster more fluid. But again, early days yet.

Setting Up The Lab

Build a “File Server for scale-out application data” cluster

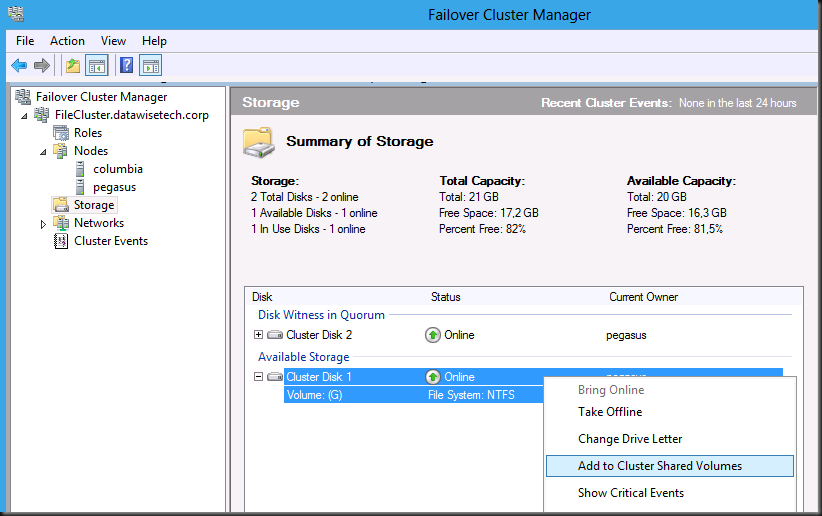

You need three virtual machines running Windows 8, two to build the cluster and one to use as a client.Once you have the cluster you configure storage to be used as a Clustered Shared Volume (CSV)

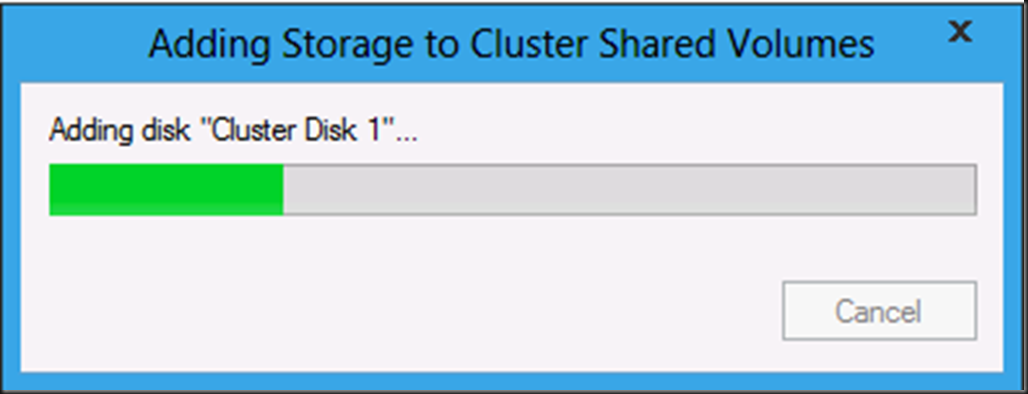

You’ll see the progress bar adding the storage to CSV

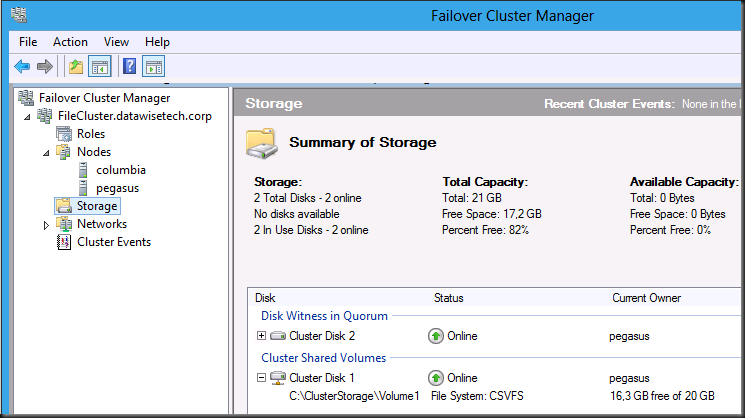

And voila you have CSV storage configured. Note that you don’t have to enable it any more and that there are no more warnings that this is only supported for Hyper-V data.

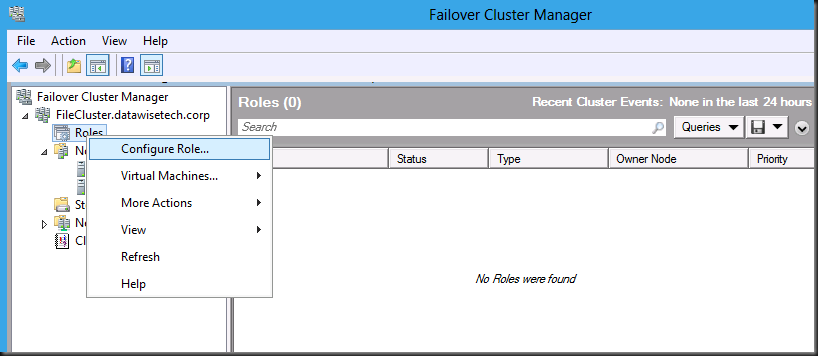

Now navigate to Role, right click and select “Configure Roles”

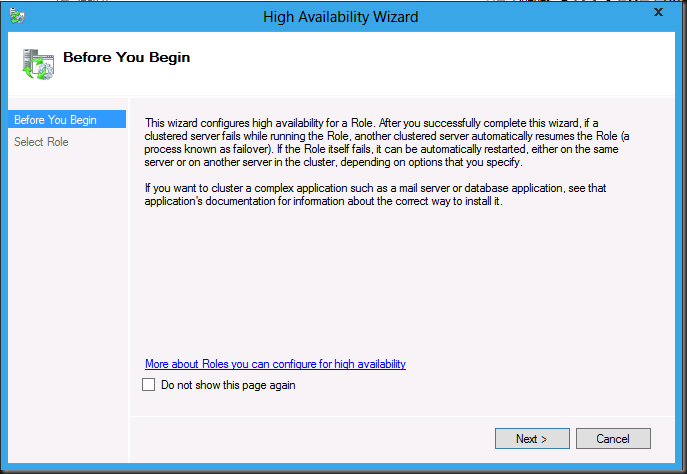

This brings up the High Availability Wizard

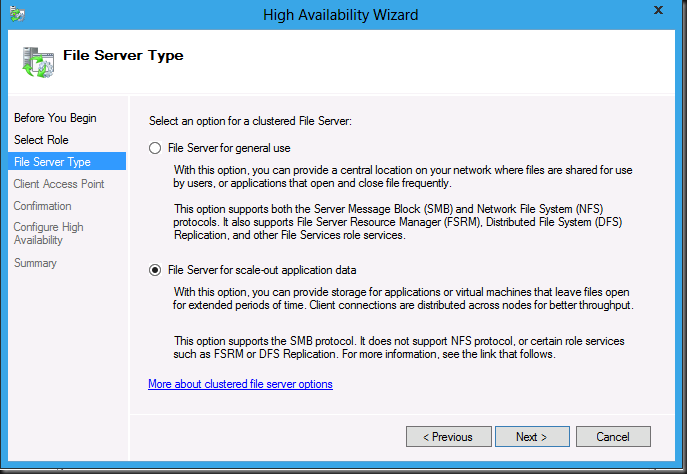

Click Next and select “File Server for scale-out application data”

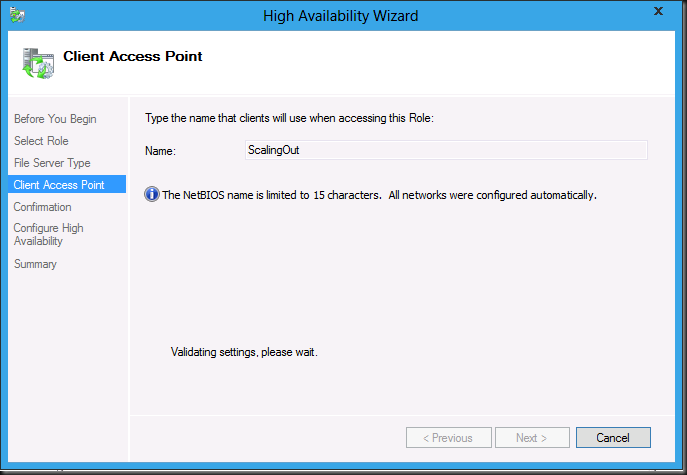

Give the Client Access Point a name

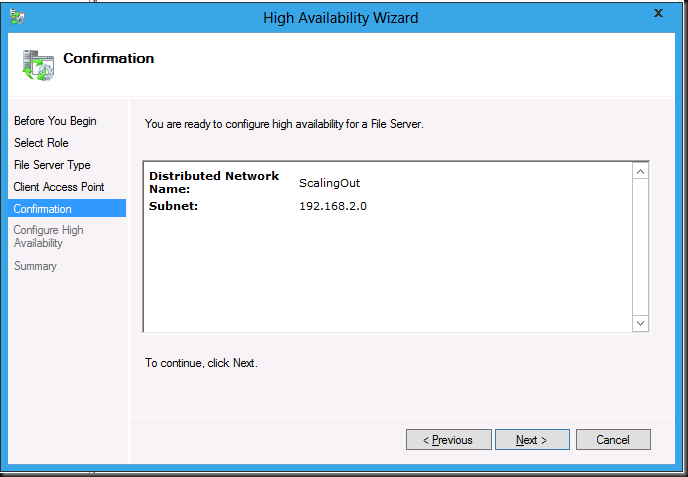

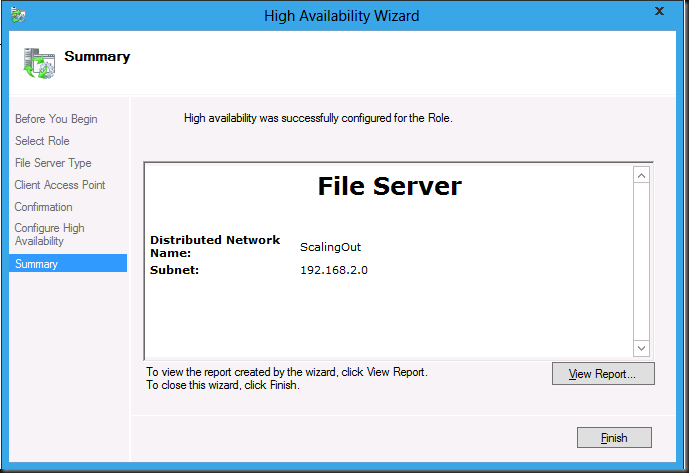

Click Next and on the following wizard page click confirm

And voila you’re done. Do notice the wizards skips the “Configure High Availability” step here.

Get a share up and running for use

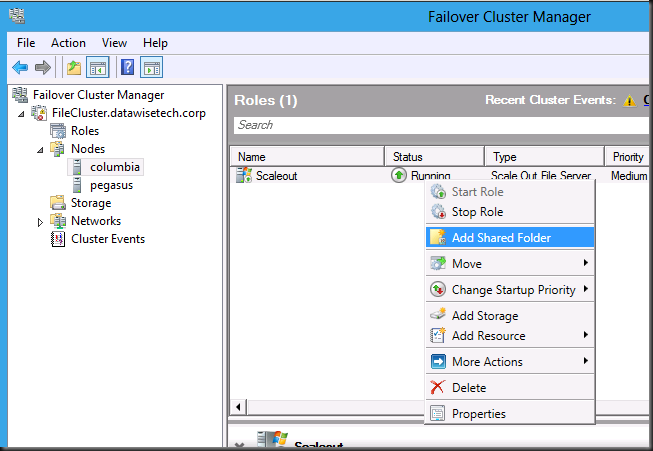

Don’t make the mistake of trying to double click on the you see in the Role. Go to the node who’s the owner of the role and navigate to the role “ScaleOut”, right click and select add shared folder.

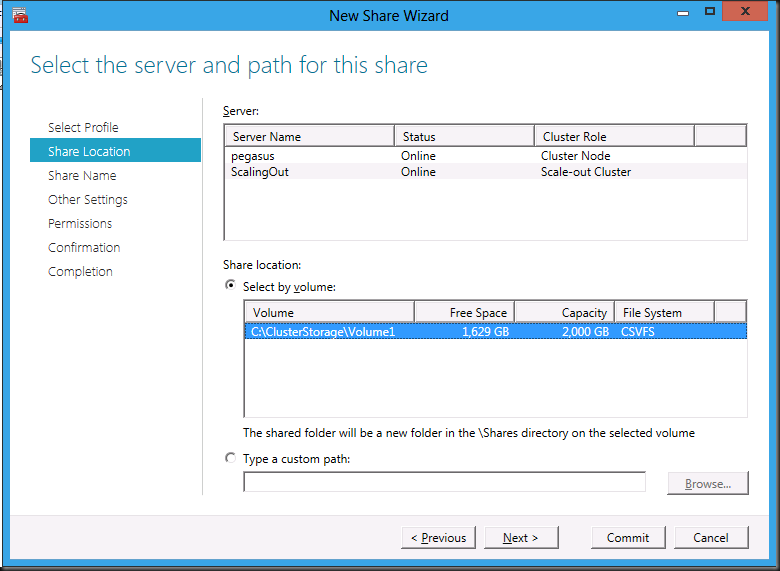

Select the cluster shared volume on the server “ScalingOut” which is actually the client access point.

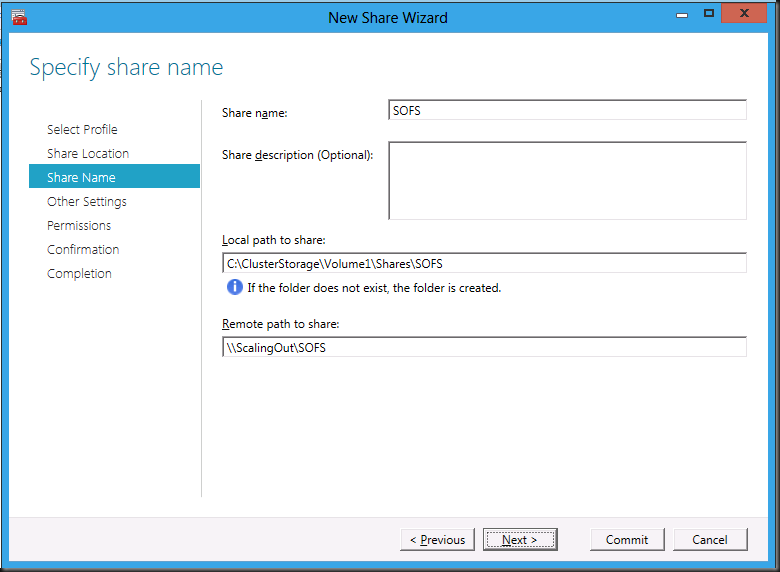

I gave the share the name SOFS (Scale Out File Share)

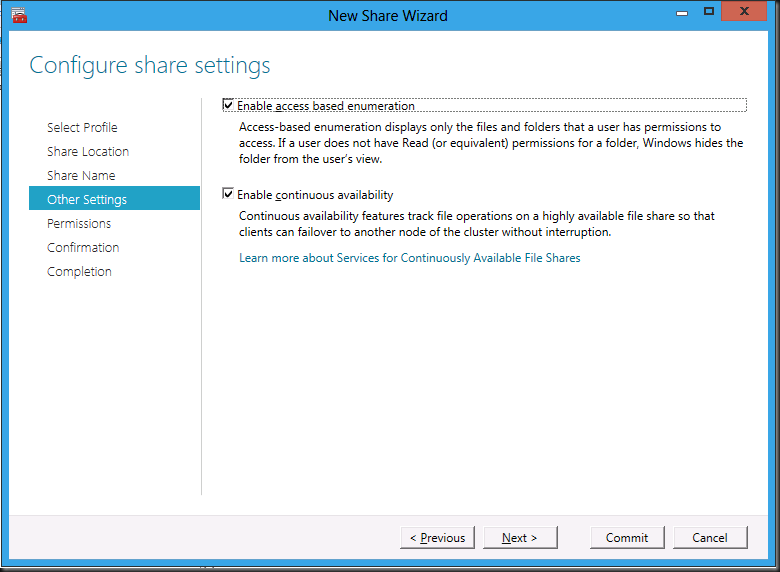

I like Access Based Enumerations so I enable this next to Enable continuous availability that is enabled by default.

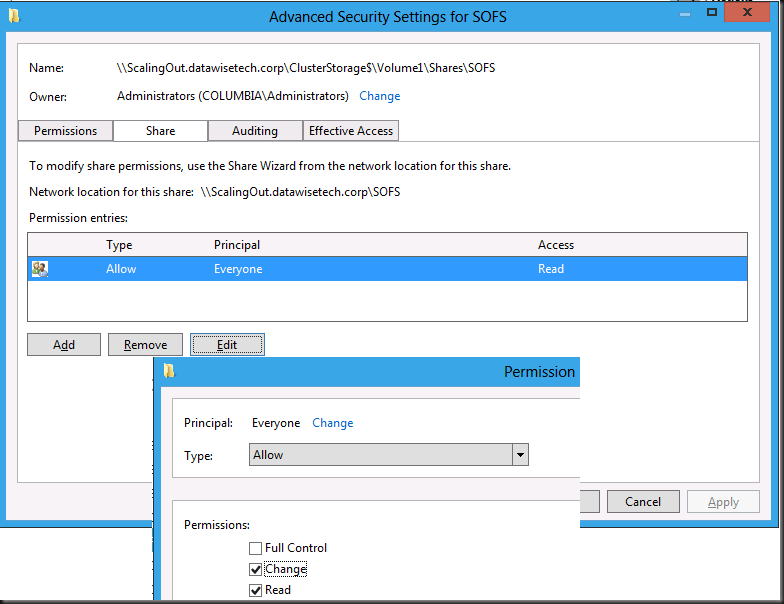

Than you get to the permissions settings. Here you have to make sue you set the share permissions to more than read if you want to do some writing to the share. Nothing new here ![]()

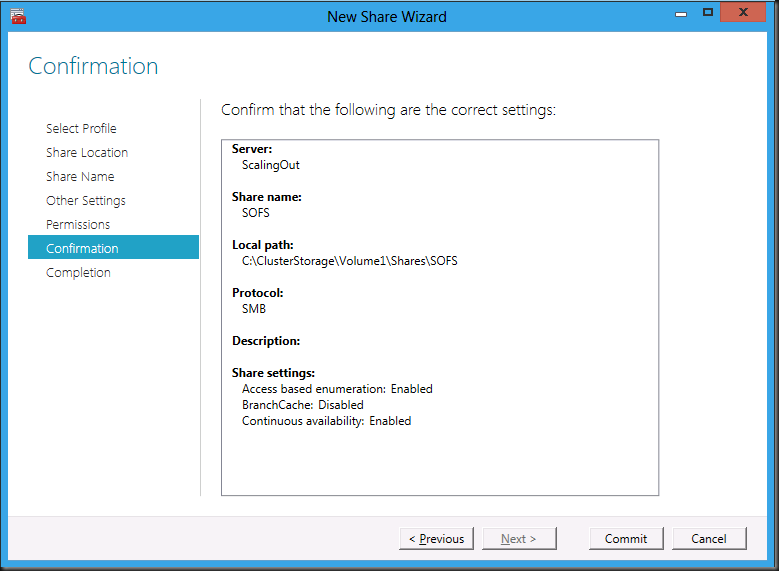

After that you’re almost done. Confirm your settings & click Commit

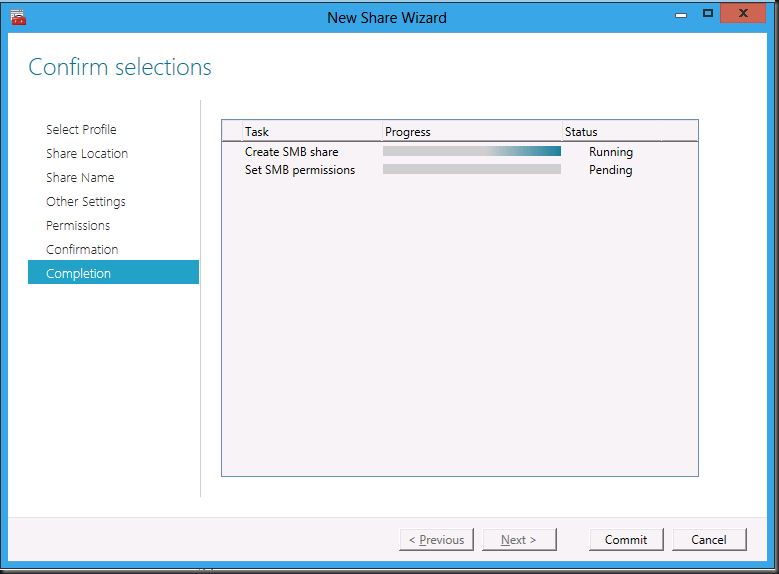

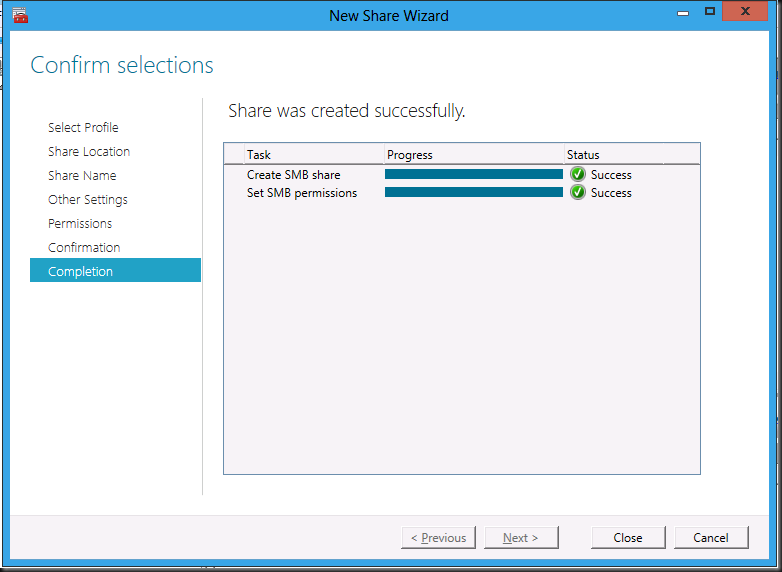

Watch the wizard do it’s magic

And it’s all setup

Play Time

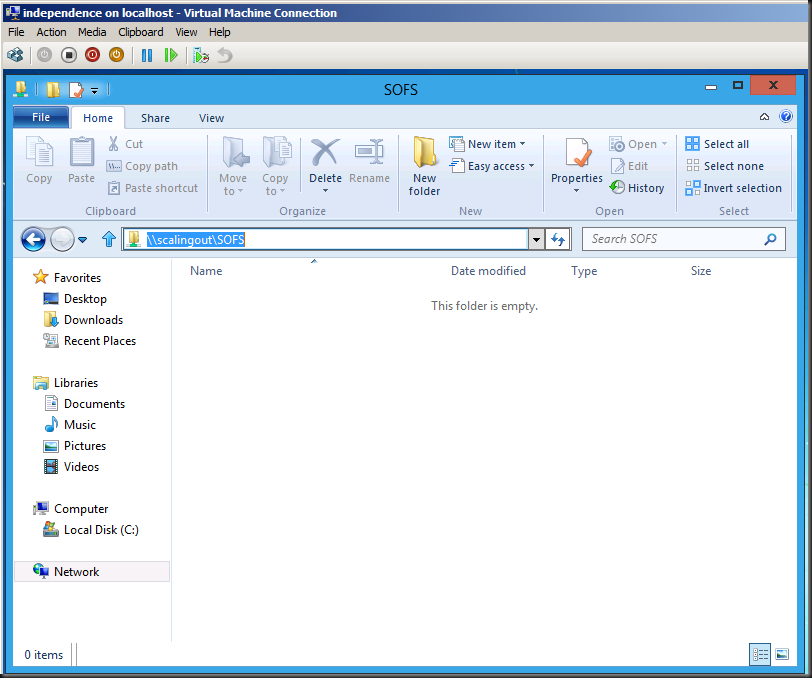

We have a third node “Independence” running Windows 8 Server to use as a client. As you can see we can easily navigate to the “server” via the access point.

And yes that’s about all you have to do. You can see the ease of name space management at work here.

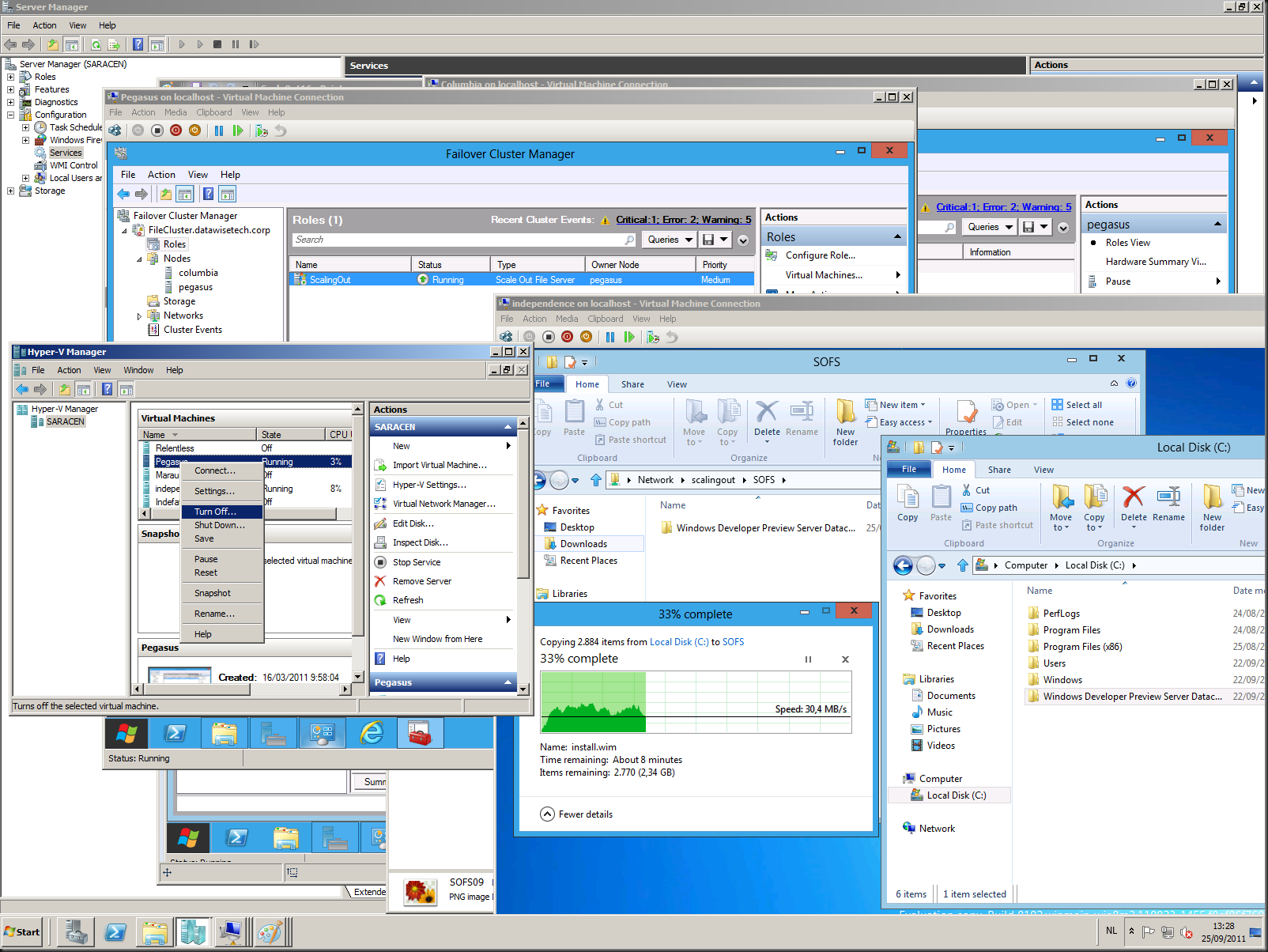

Now let’s copy some data and turn of a one of the cluster nodes, the one that owns the role for example …

I was copying the content of the Windows 8 Server folder from Independence and failed over the node, the client did not notice anything. I turned off the node holding the role and still the client did only notice as short delay (a couple of seconds max). This was a complete transparent experience. I cannot stress enough how much I want this technology for my business customers. You can patch, repair, replace, file server nodes at will at any given moment en no application or user has to notice a thing. People, this is Walhalla. This is is the place where brave file server administrators that have served their customers well over the years against all odds have the right to go. They’ve earned this! Get this technology in their hands and yes even for end user file data. Or at least make the transparent failover for user file sharing as fluid. Make it happen Microsoft! And while I’m asking, will there ever be a SMB 2.2 installable client for Windows 7? In SP2, please?!

Learn more here by watching the sessions from the Build conference at http://www.buildwindows.com/Sessions

Noticed bugs

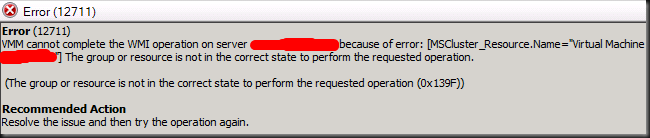

The shares don’t always show up in the share pane, after failover.

Conclusion

This is awesome, this is big, this is a game changer in the file serving business. Listen, file services are not dead, far from it. It wasn’t very sexy and we didn’t get the holey grail of high availability for that role as of yet until now. I have seen the future and it looks great. Set up a lab people and play at will. Take down servers in any way imaginable and see your file activities survive without at hint of disruption. As long a you make sure that you have multiple nodes in the cluster and that if these are virtual machines they always reside on different nodes in a failover cluster it will take a total failure of the entire cluster to bring you file services down. So how do you like them apples?