Visualize an Always On VPN device tunnel connection while disabling the disconnect button

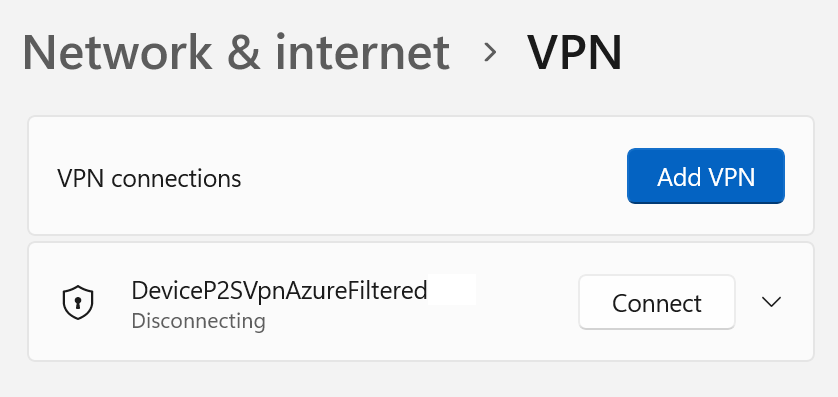

The need to visualize an Always On VPN device tunnel connection while disabling the disconnect button arises when the user experiences connectivity issues. End users should be able to communicate quickly to their support desk whether or not they have a connected Always On VPN device tunnel. They usually do not see the device VPN tunnel in the modern UI. Only user VPN tunnels show up. Naturally, we don’t want them to disconnect the device VPN or change its properties, so we want to disable the “disconnect” and the “advanced setting buttons. Since a device VPN tunnel runs as a “SYSTEM,” they cannot do this anyway. The GUI shows “Disconnecting” but never complete.

Refreshing the GUI correctly shows “Connected” again. However, it makes sense to disable the buttons to indicate this. So how to we set all of this up?

Visualize an Always On VPN device tunnel connection

Visualizing the Always On VPN device tunnel in the modern GUI is something we achieve via the registry. Scripting deploying these registry settings via GPO or Intune is the way to go.

New-Item -Path ‘HKLM:\SOFTWARE\Microsoft\Flyout\VPN’ -Force New-ItemProperty -Path ‘HKLM:\Software\Microsoft\Flyout\VPN\’ -Name ‘ShowDeviceTunnelInUI’ -PropertyType DWORD -Value 1 -Force

Disable the disconnect button and the advanced options buttons

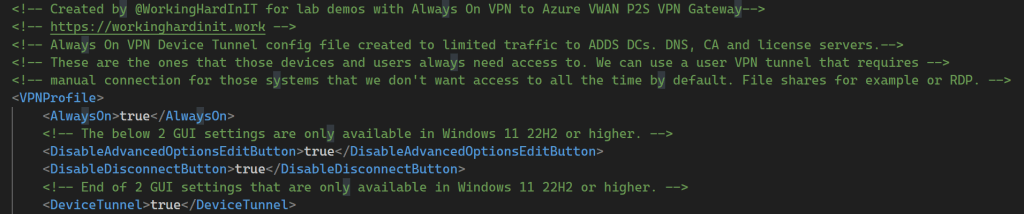

Now that the Always On VPN device tunnel is visible in the GUI, we want to disable the disconnect button and the advanced options buttons. How? Well, we can do this in Windows 11 22H2 or more recent versions. For this, we add the following to the VPN configuration file.

<!-- The below 2 GUI settings are only available in Windows 11 22H2 or higher. --><DisableAdvancedOptionsEditButton>true</DisableAdvancedOptionsEditButton><DisableDisconnectButton>true</DisableDisconnectButton>

<!– These GUI settings below are only available in Windows 11 22H2 or higher. –> <DisableAdvancedOptionsEditButton>true</DisableAdvancedOptionsEditButton> <DisableDisconnectButton>true</DisableDisconnectButton>

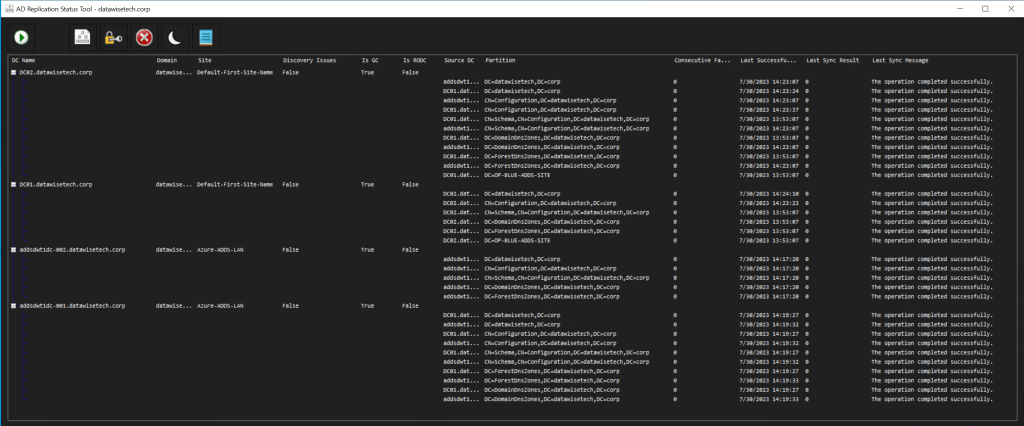

Results

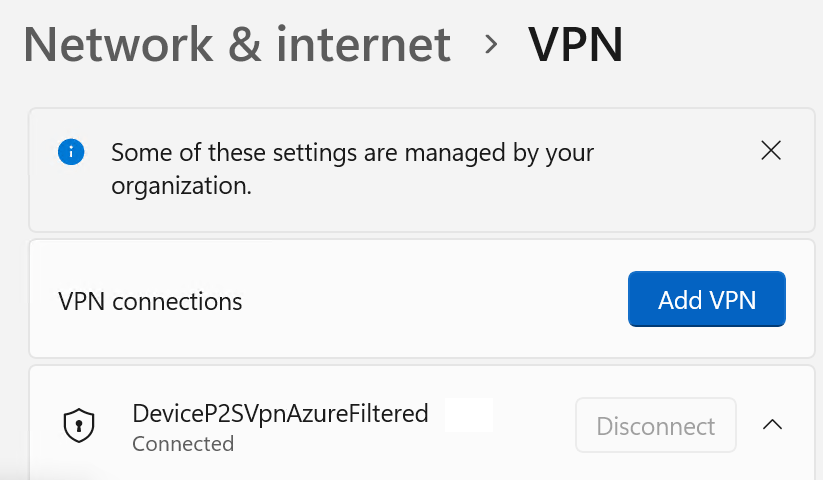

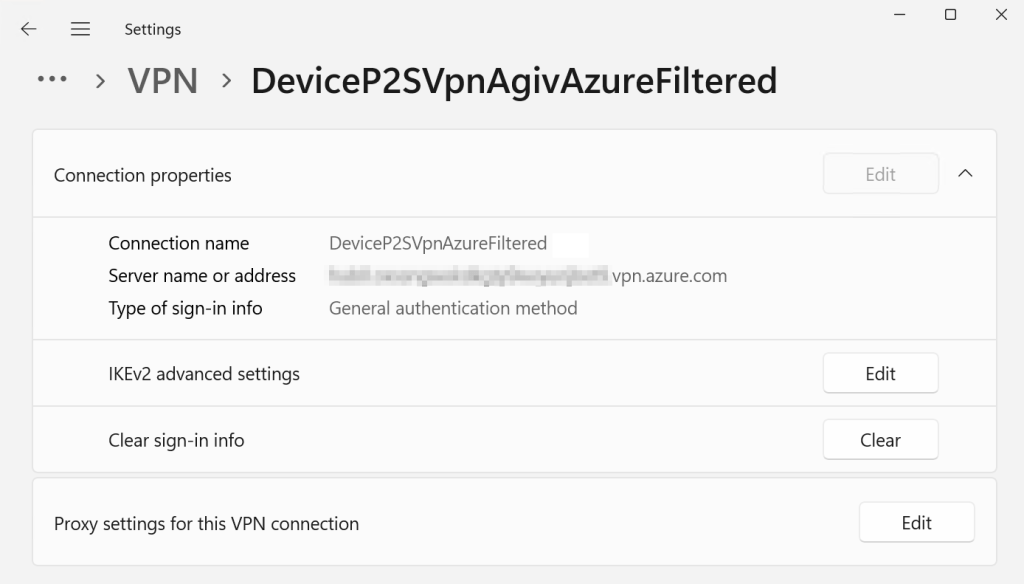

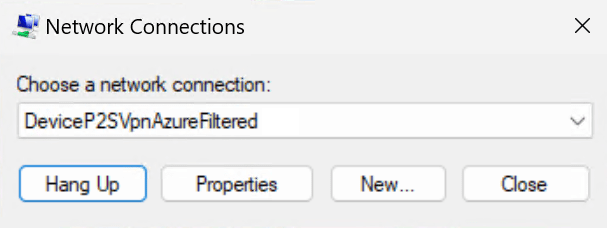

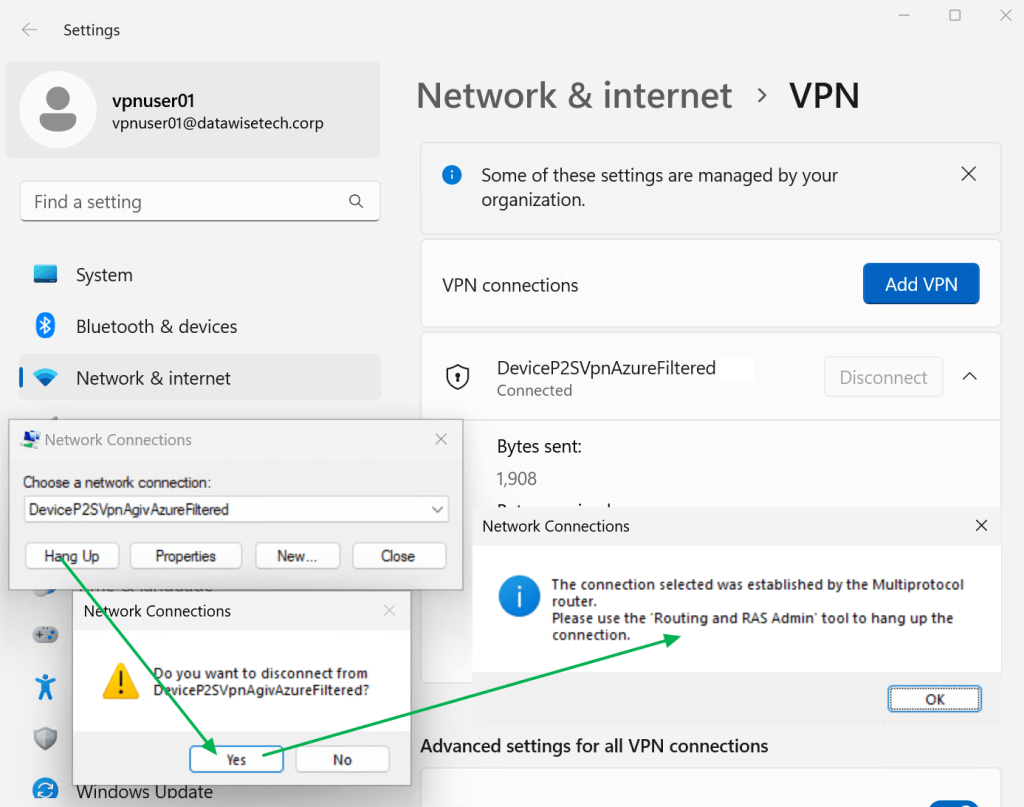

For an administrative account, the Always On VPN device tunnel is visible, but the buttons are dimmed (greyed out).

As before, the administrator can still use the rasphone GUI to hang up the Always On VPN device tunnel or edit the properties like before. Usually, you’ll configure the setting with Intune or via GPO with Powershell and custom XML. There is also a 3rd party option for configuring Always On VPNs via GPO (AOVPN Dynamic Profile Configurator).

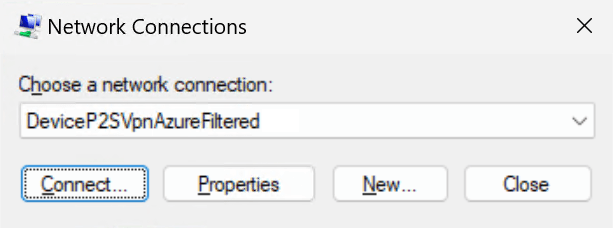

For a non-administrator user account, the GUI looks precisely the same. The big difference is that when such a user launches the rasphone GUI, they cannot “Hang Up” the connection. The error message may not be the clearest, but in the end, a user with non-administrative rights cannot disconnect the Always On VPN device tunnel.

So now we have the best of both worlds. An administrator and a standard user can see that the Always On VPN device tunnel is connected. Remember that disabling the buttons requires Windows 11 22H2 or more recent. This blog was written using 23H2. The administrator can use the rasphone GUI or rasdial CLI to access the Always On VPN device tunnel like before.

Conclusion

Device VPN tunnels are supposed to be connected at all times, whether a user is logged on or not. It is also something that users are not supposed to be concerned about in contrast to a user VPN tunnel. However, it can be beneficial to see whether the Always On VPN device tunnel is connected. That is most certainly so when talking to support about an issue. We showed you how to achieve this, combined with disabling the “disconnect” and “advanced” options buttons), in this blog post.