Introduction

The moment I figured out that Storage Live Migration (in certain scenarios) and Shared Nothing Live Migration leverage SMB 3.0 and as such Multichannel and RDMA in Windows Server 2012 I was hooked. I just couldn’t let go of the concept of leveraging RDMA for those scenarios. Let me show you the value of my current favorite network design for some demanding Hyper-V environments. I was challenged a couple of time on the cost/port of this design which is, when you really think of it, a very myopic way of calculating TCO/ROI. Really it is. And this week at TechEd North America 2013 Microsoft announced that all types of Live Migrations support Multichannel & RDMA (next to compression) in Windows Server 2012 R2. Watch that in action at minute 39 over here at Understanding the Hyper-V over SMB Scenario, Configurations, and End-to-End Performance. You should have seen the smile on my face when I heard that one! Yes standard Live Migration now uses multiple NIC (no teaming) and RDMA for lightning fast VM mobility & storage traffic. People you will hit the speed boundaries of DDR3 memory with this! The TCO/ROI of our plans just became even better, just watch the session.

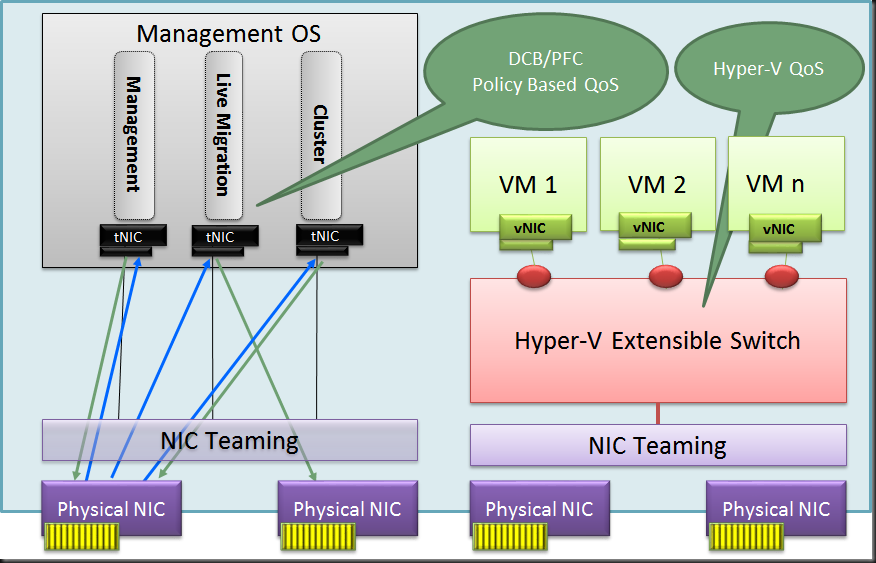

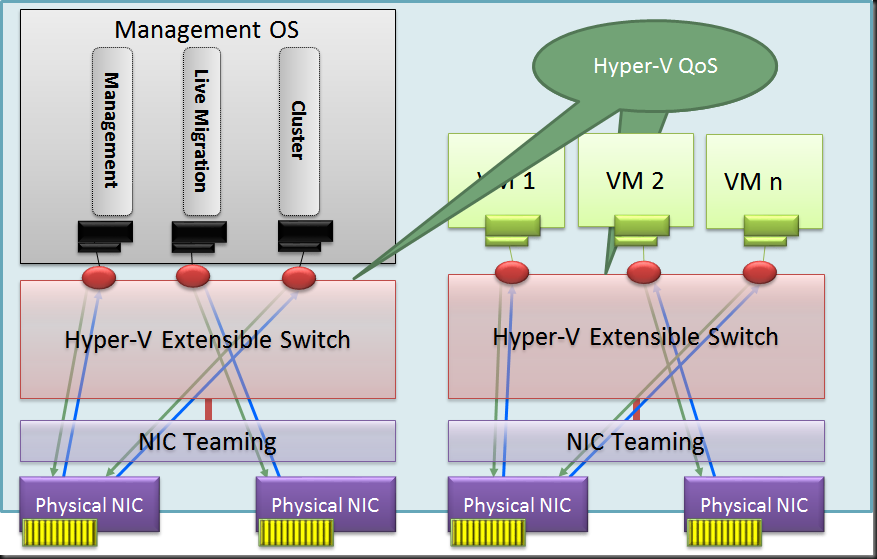

So why might I use more than two 10Gbps NIC ports in a team with converged networking for Hyper-V in Windows 2012? It’s a great solution for sure and a combined bandwidth of 2*10Gbps is more than what a lot of people have right now and it can handle a serious workload. So don’t get me wrong, I like that solution. But sometimes more is asked and warranted depending on your environment.

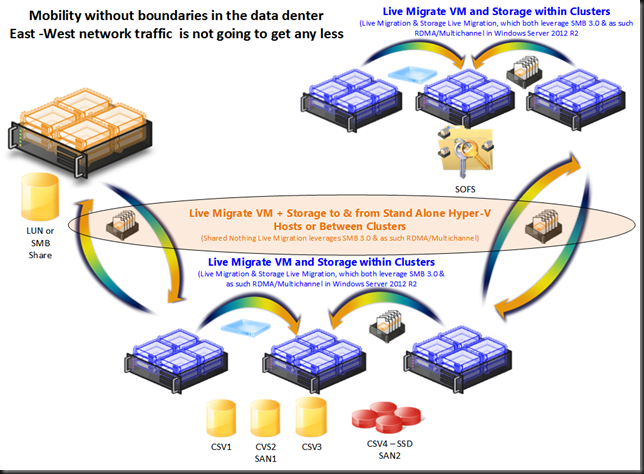

The reason for this is shown in the picture below. Today there is no more limit on the VM mobility within a data center. This will only become more common in the future.

This is not just a wet dream of virtualization engineers, it serves some very real needs. Of cause it does. Otherwise I would not spend the money. It consumes extra 10Gbps ports on the network switches that need to be redundant as well and you need to have 10Gbps RDMA capable cards and DCB capable switches. So why this investment? Well I’m designing for very flexible and dynamic environments that have certain demands laid down by the business. Let’s have a look at those.

The Road to Continuous Availability

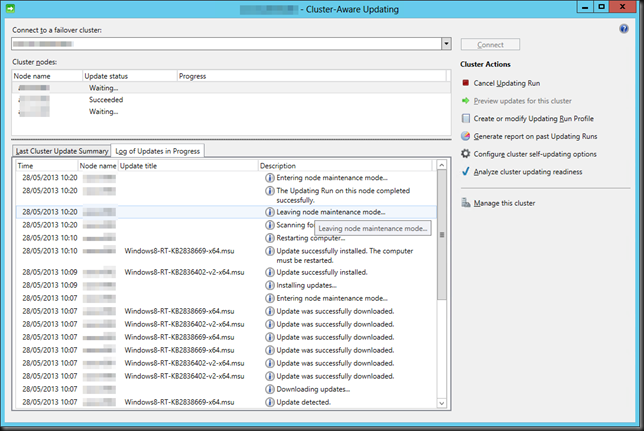

All maintenance operations, troubleshooting and even upgraded/migrations should be done with minimal impact to the business. This means that we need to build for high to continuous availability where practical and make sure performance doesn’t suffer too much, not noticeably anyway. That’s where the capability to live migrate virtual machines of a host, clustered or not, rapidly and efficiently with a minimal impact to the workload on the hosts involved comes into play.

Dynamics Environments won’t tolerate downtime

We also want to leverage our resources where and when they are needed the most. And the infrastructure for the above can also be leveraged for that. Storage live migration and even Shared Nothing Live Migration can be used to place virtual machine workloads where they are getting the resources they need. You could see this as (dynamically) optimizing the workload both within and across clusters or amongst standalone Hyper-V nodes. This could be to a SSD only storage array or a smaller but very powerful node or cluster in regards to CPU, memory and Disk IO. This can be useful in those scenarios where scientific applications, number crunching or IOPS intesive software or the like needs them but only for certain times and not permanently.

Future proofing for future storage designs

Maybe you’re an old time fiber channel user or iSCSI rules your current data center and Windows Server 2012 has not changed that. But that doesn’t mean it will not come. The option of using a Scale Out File Server and leverage SMB 3.0 file shares to providing storage for Hyper-V deployments is a very attractive one in many aspects. And if you build the network as I’m doing you’re ready to switch to SMB 3.0 without missing a heart beat. If you were to deplete the bandwidth x number of 10Gbps can offer, no worries you’ll either use 40Gbps and up or Infiniband. If you don’t want to go there … well since you just dumped iSCSI or FC you have room for some more 10Gbps ports ![]()

Future proofing performance demands

Solutions tend to stay in place longer than envisioned and if you need some long levity and a stable, standard way of doing networking, here it is. It’s not the most economical way of doing things but it’s not as cost prohibitive as you think. Recently I was confronted again with some of the insanities of enterprise IT. A couple of network architects costing a hefty daily rate stated that 1Gbps is only for the data center and not the desktop while even arguing about the cost of some fiber cable versus RJ45 (CAT5E). Well let’s look beyond the North – South traffic and the cost of aggregating band all the way up the stack with shall we? Let me tell you that the money spent on such advisers can buy you in 10Gbps capabilities in the server room or data center (and some 1Gbps for the desktops to go) if you shop around and negotiate well. This one size fits all and the ridiculous economies of scale “to make it affordable” argument in big central IT are not always the best fit in helping the customers. Think a little bit outside of the box please and don’t say no out of habit or laziness!

Conclusion

In some future blog post(s) we’ll take a look at what such a network design might look like and why. There is no one size fits all but there are not to many permutations either. In our latest efforts we had been specifically looking into making sure that a single rack failure would not bring down a cluster. So when thinking of the rack as a failure domain we need to spread the cluster nodes across multiple racks in different rows. That means we need the network to provide the connectivity & capability to support this, but more on that later.