The Issues

I recently had to go and fix some issues with a couple of virtual machines in SCVMM 2008 R2. There was one that failed to live migrate with following error:

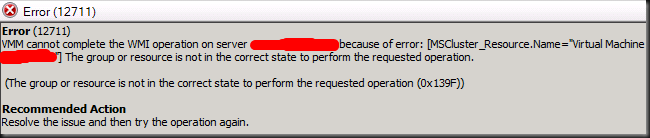

Error (12711)

VMM cannot complete the WMI operation on server HopelessVm.test.lab because of

error: [MSCluster_ResourceGroup.Name=" df43bf60-7216-47ed-9560-7561d24c7dc8"] The cluster group could not be found.

(The cluster group could not be found (0×1395))

Recommended Action

Resolve the issue and then try the operation again

Other than that it looked fine and could be managed with SCVMM 2008 R2. Another one was totally wrecked it seemed. It was in a failed state after an attempted live migration. You couldn’t do anything with it anymore. Repair was “available” but every option there failed so basically that was the end of the game with that VM. Both issues can be resolved with the approach I’ll describe below.

The Cause

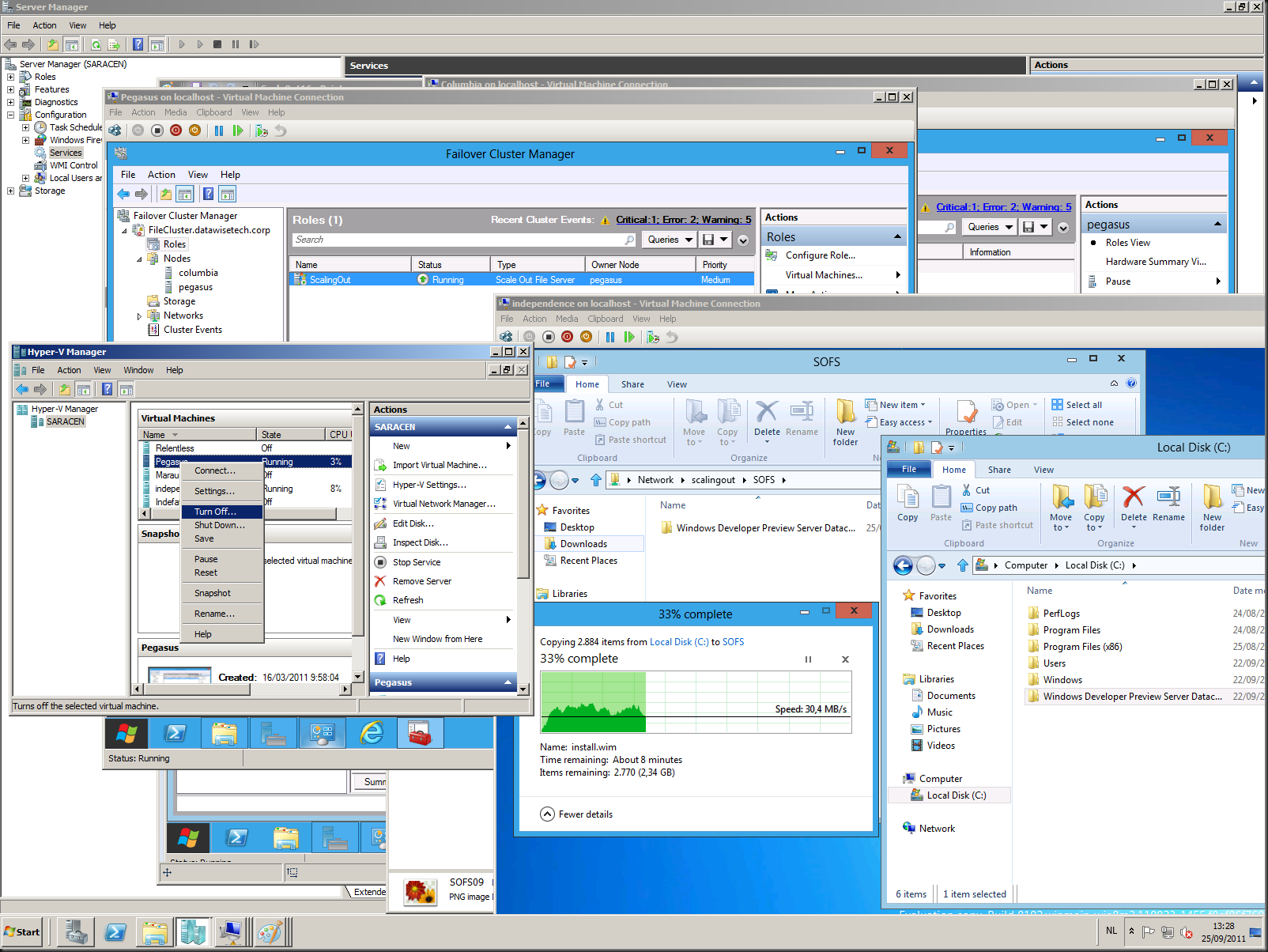

After some investigation the cause of this was the fact that this virtual machine had been removed from the failover cluster as a resource was exported & imported using Hyper-V manager on one of the cluster nodes. It was then added back to the failover cluster again to make them high available. All this was done without removing it from SCVMM 2008 R2. By the way, as mentioned above in “The Issues” this can get even worse than just failing live migrations. The same scenario can lead to virtual machines going into a failed state that you can’t repair (retry or undo fail) or ignore and basically you’re stuck at that point. You can’t even stop, start, shutdown the virtual machine anymore, not one single operation works in SCVMM while in the failover cluster GUI and in hyper-v manager everything is fully operational. This is important to note, as the services are fully on line and functional. It’s just in SCVMM that you’re in trouble.

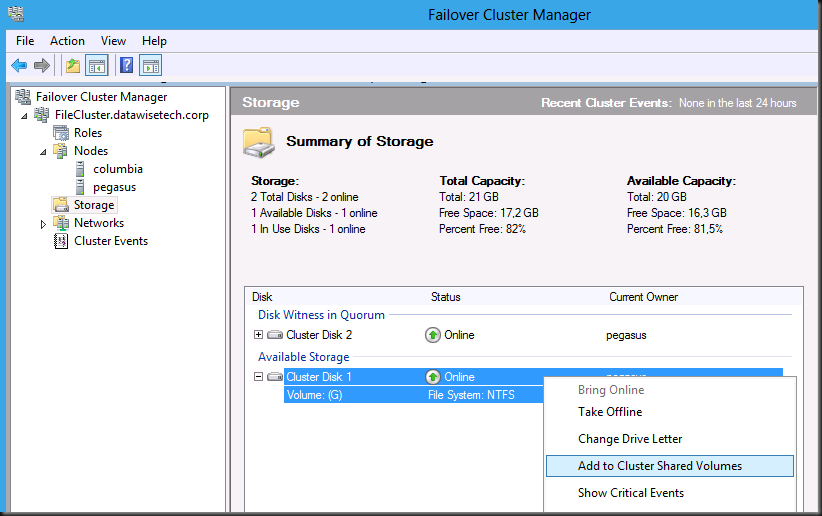

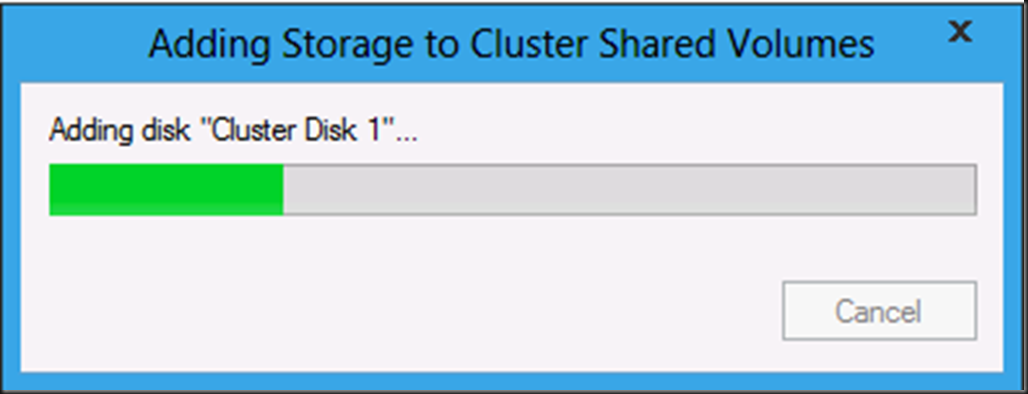

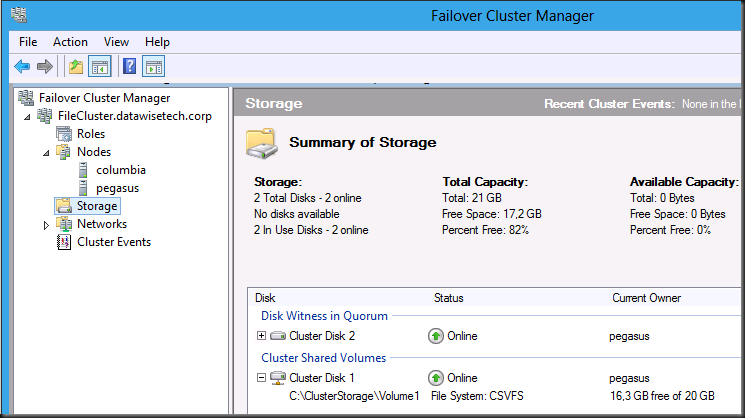

Why did they do it this way? They did it to move the VM to a new CSV. The fact that you delete the VM files when deleting a VM with SCVmm2008R2 made them use Hyper-V manager instead. Now this approach (whatever you think of it) can work but then you need to delete the VM in SCVMM2008R2 after exporting the virtual machine AND before proceeding with the import and making the virtual machine highly available.

People get creative in how to achieve things due to inconsistencies, differences in functionality between Hyper-V Manger and SCVMM 2008R2 (in the latter especially the lack of complete control over naming, files & folders, export/migration behavior) as well as the needs of the failover cluster can lead to some confusing scenarios.

The Supported Fix

Now the easy way to fix this is to export the virtual machine again and delete it in SCVMM 2008 R2. That will remove the virtual machine object from SCVMM, the failover cluster en Virtual Machine Manager. However this virtual machine was so large (50Gb + 750 GB data disk) that there was no room for an export to be made. Secondly an export of such a large VM takes a considerable time and it has to be off line for this operation. This is annoying as SCVMM might be uncooperative at this point, the virtual machine is online en performing it’s duties for the business. So this presented us with a bit of a problem. Stopping the virtual machine, Exporting it using Hyper-V Manager will cause it to go missing in SCVMM 2012 and then you can delete it, importing the virtual machine again and adding it to the failover cluster causes down time.

The Root Cause

Why does this happen? Well when you import a virtual machine into a failover cluster is creates a new unique ID for the virtual machine Resource Group . This happens always. Choosing to reuse an existing ID during import in Hyper-V Manager has nothing to do with this. But VMM uses ID/names to identify a VM, independent of the cluster. So when you did not remove the VM from SCVMM before adding the VM back to the cluster you get a different cluster group ID in the cluster than you have in SCVMM. They both have the same name but there is a disconnect leading to the issues described above.

By the way exporting & importing a VM without first removing the virtual machine from the failover cluster leads to some issues in the Failover cluster so don’t do that either ![]()

The “No Down Time” Fix

This is not the first time we need to dive in to the SCVMM database to fix issues. One of my main beef about SCVMM other than inconsistency with the other tools and its lack of control & options in some scenarios is the fact that it doesn’t have enough self-maintenance intelligence & functionality. This leads to the workaround above which are slow and rather annoying or consist of messing around in the SCVMM database, which isn’t exactly supported. Mind you Microsoft has published some T-SQL to clean up such issues themselves. See You cannot delete a missing VM in SCVMM 2008 or in SCVMM 2008 R2 and RemoveMissingVMs. See also my blog SCVMM 2008 R2 Phantom VM guests after Blue Screen post on this subject.

The usual tricks of the trade like refreshing the virtual machine configuration in the failover cluster GUI don’t work here. Neither does the solution to this error described Migrating a System Center Virtual Machine Manager 2008 VM from one cluster to another fails with error 12711. The error is the same but not the cause.

# Add the VMM cmdlets Add-PSSnapin microsoft.systemcenter.virtualmachinemanager # Connect to the VMM server Get-VMMServer –ComputerName MySCVMMServer.test.lab # Grab the problematic VM and put it into the object $vm $vm = Get-VM –name “HopelessVM” #Force a refresh refresh-vm -force $vm

In the end we have to fix the mismatch between the VMResourceGroupID in failover cluster and SCVMM by editing the database.

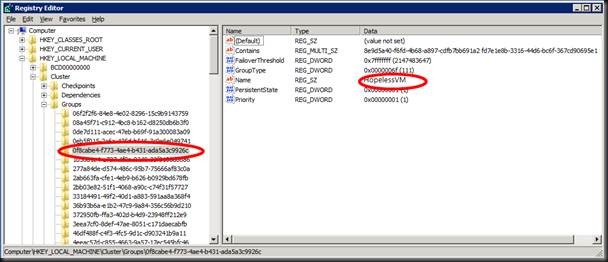

First you navigate to the registry key HKEY_LOCAL_MACHINEClusterGroups on one the cluster nodes, do a find for the problematic VM’s name and grab the name of its key, this is the VMResourceGroupID the cluster knows and works with? So now we have the correct VMResourceGroupID: 0f8cabe4-f773-4ae4-b431-ada5a3c9926c

Now you connect to the SCVMM database and run following query to find the VMResourceGroupID that SCVMM thinks that VM has and that it uses causing the issues

SELECT VMResourceGroupID FROM tbl_WLC_VMInstance WHERE ComputerName = 'hopelessVM.test.lab' GO

The results:

VMResourceGroupID

————————————————–

df43bf60-7216-47ed-9560-7561d24c7dc8

(1 row(s) affected)

The trick than is to simply update that value to the one you just got from the registry by running:

UPDATE tbl_WLC_VMInstance SET VMResourceGroupID = '0f8cabe4-f773-4ae4-b431-ada5a3c9926c' WHERE VMResourceGroupID = 'df43bf60-7216-47ed-9560-7561d24c7dc8' GO

Than you need some patience & refresh the GUI a few times. Things will turn back to normal, but in between you might seem some “missing” statuses appear for your problematic VM. These go away fast however. If not you can always use the Microsoft provided script to remove missing VM’s as mentioned above in RemoveMissingVMs.

Warning

What I described above is something you can do to fix these issues fast and effectively when needed. But I’m not telling you this is the way to go, let alone that this is supported. Make sure you have backups of your VMs, Hosts, SCVMM database etc. It only takes one mistake or misinterpretation to royally shoot yourself in your foot ![]() . It hurts like hell; recovery is long and seldom complete. On top of that it might generate a vacancy in your company whilst you’re escorted out of the building. Be careful out there.

. It hurts like hell; recovery is long and seldom complete. On top of that it might generate a vacancy in your company whilst you’re escorted out of the building. Be careful out there.