Introduction

By reading Aidan Finn his blog You Pause A Clustered Hyper-V Host And Low Priority VMs are QUICK MIGRATED! you will learn something about how virtual machine priorities work during the pausing and draining of a clustered Hyper-V host. They are either Live or quick migrated depending on the value of the MoveTypeThreshold cluster parameter for resources of the type “Virtual Machine”. By default it’s at 2000 and that happens to be the value of the virtual machine priority “Low”.

Changing this value can alter the default behavior. For example setting the MoveTypeThreshold value to 1000 using PowerShell

Get-ClusterResourceType “Virtual Machine” | Set-ClusterParameter MoveTypeThreshold 1000

makes sure that only VMs with a priority set to “No Auto Restart” are quick migrated. The low priority machines would than also live migrate where by default they quick migrate.

- Virtual Machines with Priority equal to or higher than the value specified in MoveTypeThreshold will be moved using Live Migration.

- Virtual Machines with Priority lower than the value specified in MoveTypeThreshold will be moved using Quick Migration.

Virtual Machine Priorities

3000 = High

2000 = Medium

1000 = Low

0 = Virtual machine does not restart automatically.

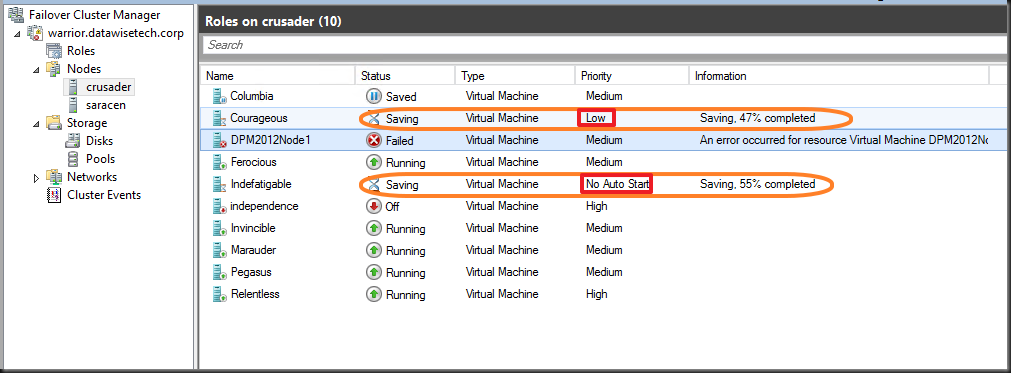

Another Scenario to be aware of to avoid surprises

Note that al this also comes into play in other scenario’s. One of them is when you attempt to start a guest that requires more resources than available on the host. Preemption kicks in and the lower priority virtual machines go into a saved state. If you didn’t plan for this it could be a bit of a surprise, causing service interruption. What’s also important to know is that preemption kicks in even when there is no chance that putting lower priority virtual machines into saved mode will free enough resources for (all) the VMs you’re trying to start. So that service interruption might do you no good. If this is the case the Low priority VMS come back up when there are sufficient resources left. Do note however that the ones set top “No Auto Restart” remain in a saved state. Look below for an example on how this could happen.

How does this happen?

Let’s say you have a brand new VM that has gotten 16GB of RAM as requested by the business. When that large memory guest starts it will fail due to the fact that there are not enough memory resources available on the host that only has 16GB available. But as it attempts to start, the need for memory resources is detected and preemption comes into play. The guests with “Low” and “No Auto Restart” priorities are put into a saved state as the large memory VM has the default medium priority and the MoveTypeTreshold is at the default of 2000. You need to be ware of this behavior. Preemption kicks in and the machines are still saving while starting the large memory VM has already failed as they couldn’t free enough resources anyway.

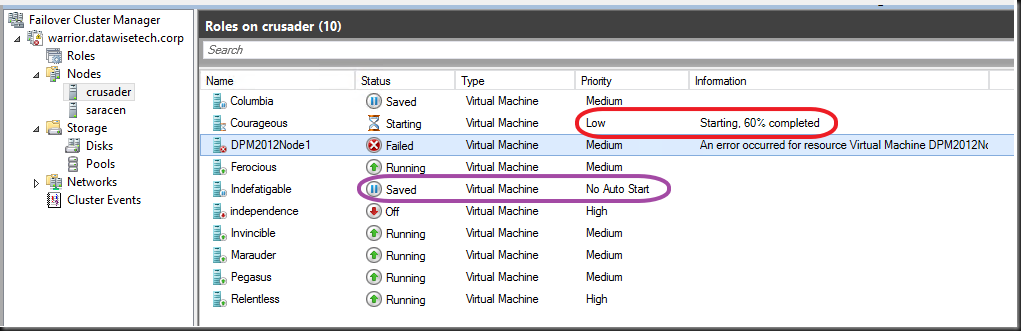

The good new is that, as you can see below, is that the low priority guest starts again after starting the large memory guest has failed. No use keeping it saved as it can run and service customers. So the service interruption for this VM is limited but it does happen. Please also note that the guest set to No Auto Restart doesn’t come up again as it’s priority status says exactly that. So, this one becomes collateral damage.

As you can see it’s important to know how priorities and preemption work together and behave. It also good to know that changing the threshold come into play in more situations that just pausing & draining a host of during a fail over. While the cluster will try it’s best to keep as many VMs up and running you might have some unintended consequences under certain conditions. A good understanding of this can prevent you from being bitten here. So build a small cheap lab so you can play with stuff. This helps to gain a better understanding of how features work and behave. If you want to play some more, set the priority of the memory hungry VM to high you’ll see even more interesting things happen.