Introduction

With Windows Server 2012 R2 (Preview) we can leverage SMB to do Live Migrations. That means we can now offload the process to the NIC if they support RDMA, save on CPU cycles and potentially get VMs moves a lot faster without impacting the performance of running VMs on the involved hosts. Perhaps it’s even faster than over TCP/IP. Sounds great so let’s do some testing.

- We have a dual port 10Gbps Mellanox RDMA card (RoCE) in each host. One pair of the ports are interconnected via a direct attach cable. The other one is connected over a Force10 S4810 switch. We’re using in box Windows Server 2012 R2 preview drivers for everything as we have found drivers not to install properly (or not at all) on this release and cause issues.

- We are using one VM running Windows 2012 RTM with upgraded Integration Services components. This VM has 4 vCPUs and 55GB of fixed memory assigned. For this purpose we had no workload running in the VM. The servers are standard DELL PowerEdge R720 kit running the Windows Server 2012 Preview bits.

Results

No Performance tweaking

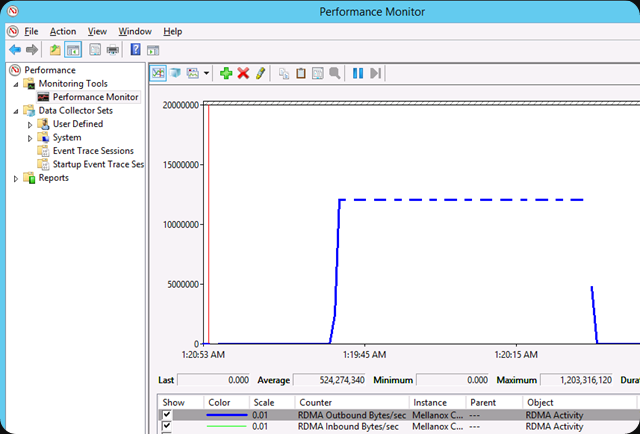

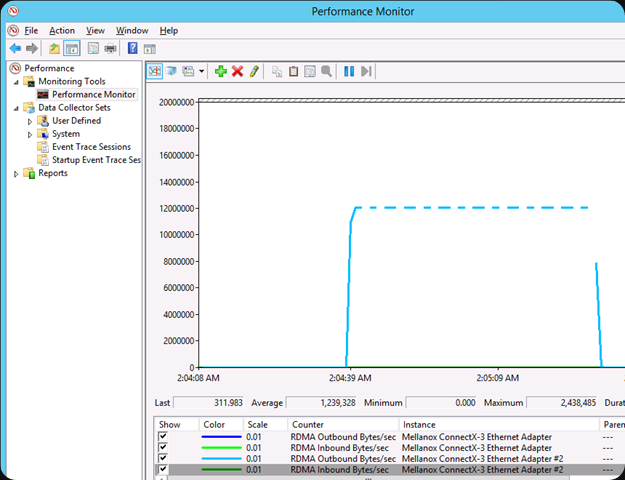

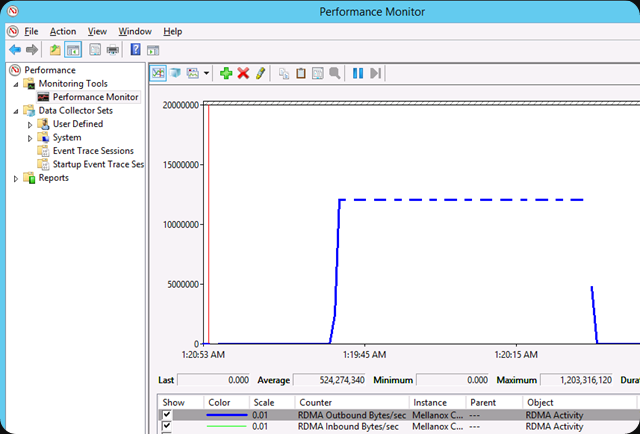

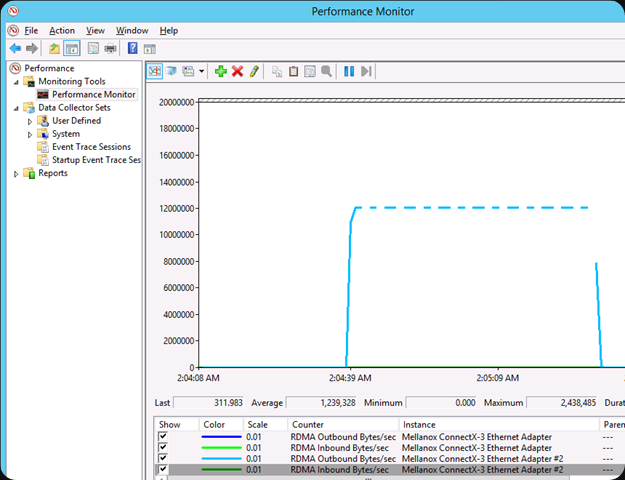

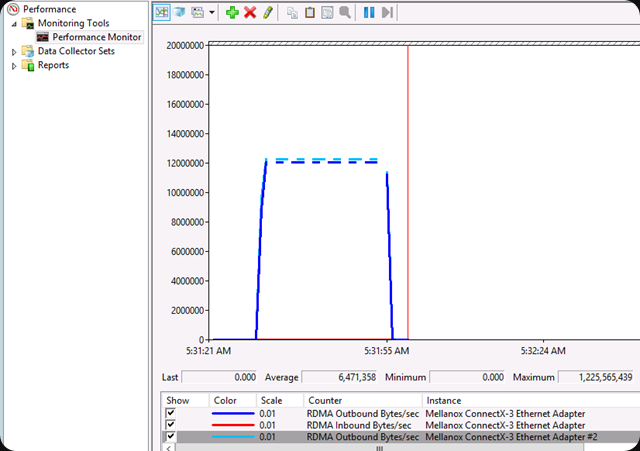

Live Migration over RDMA in action. Here we are using 1 10Gbps RoCE RDMA NIC. Here we are moving via the NIC port that goes over the S4810 Switch.

As you can see the entire process took 74 seconds. RDMA did not kick in until after 19 seconds had past since the start.

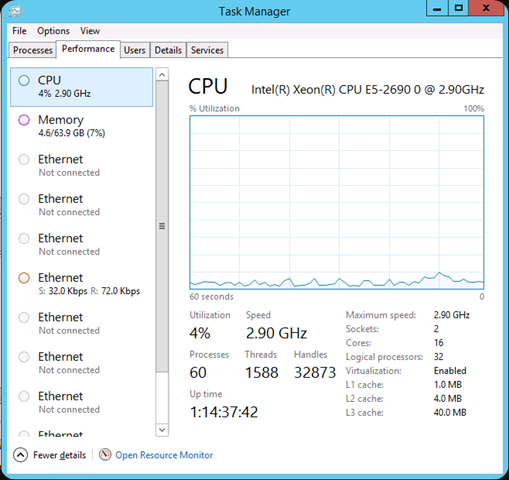

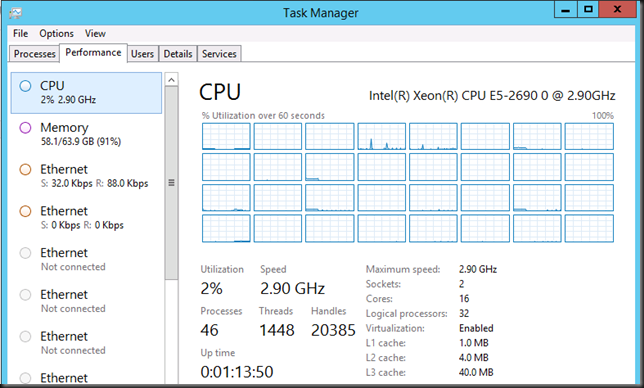

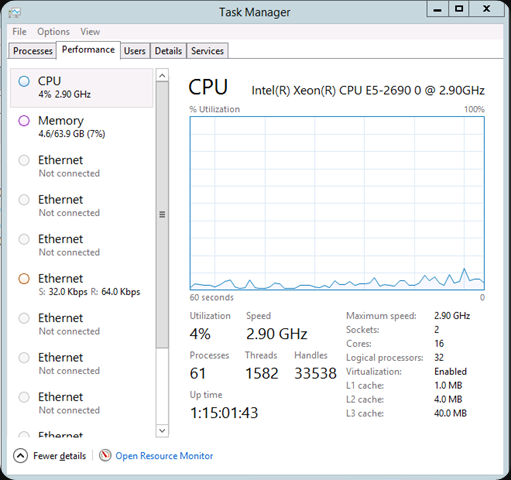

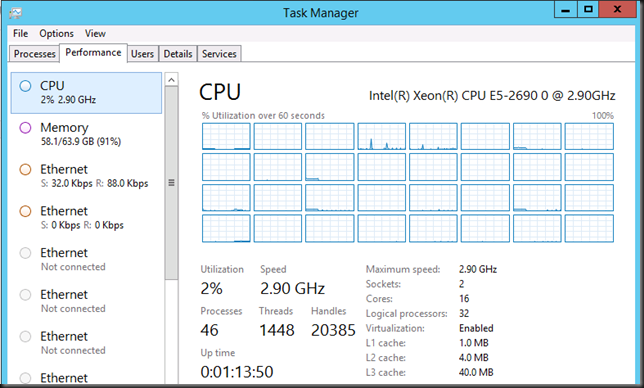

The CPU load remains low, which is where you’ll find the biggest benefit of RDMA with live migrations.

No let’s put two RDMA RoCE ports into play and see what that does for us. We now Live Migrate the 55GB memory VM in 52-54 seconds. Not bad. Again we saw over 20 seconds time pass before RDMA kicks in.

Again we see that CPU usage remains low. This is just a quick screenshot. On a hyper-V node you’ll need to dive into Performance Monitor to get some real info.

Let’s repeat this exercise and see what happens if we move the traffic over the NOC ports that are directly attached. That will give us an indication about the configuration of the switch. Configuring RoCE DCB features like PFC/ETS is not exactly a well documented process at the moment and often I feel like a magician’s apprentice.

Once more we see that it takes about 20 seconds for RDMA to kick in and that the time rises to 79 seconds. It fluctuates between 74-79 seconds actually?

The CPU load was low again. So both paths seem to perform comparable.

Live Migrations over SMB seem to function faster using two RDMA ports but not twice as fast. These are the preview bits so nothing definitive yet. And sorry, I cannot do 40Gbps or 56Gbps Infiniband tests. Unless you want to donate the gear and pay for the power, time & reporting  .

.

Max Performance Tweaking

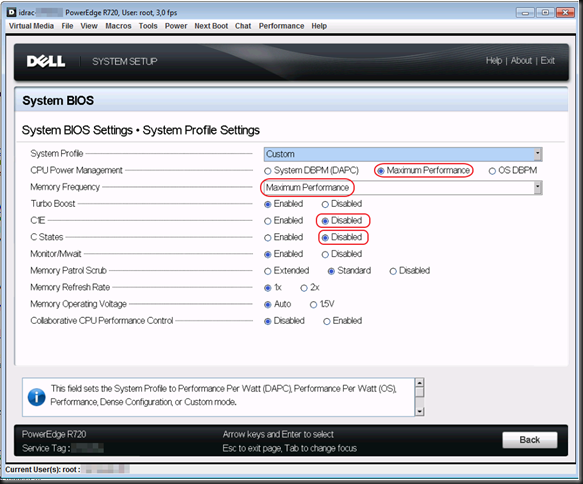

As my readers very well know I tweak my nodes for best performance. The savings of energy (power, cooling) have to come from making the most out of every node and shutting them down when not needed (Dynamic Optimization/Power Optimization in System Center). I still have a standing order to tale away any physical limitations possible for the business.

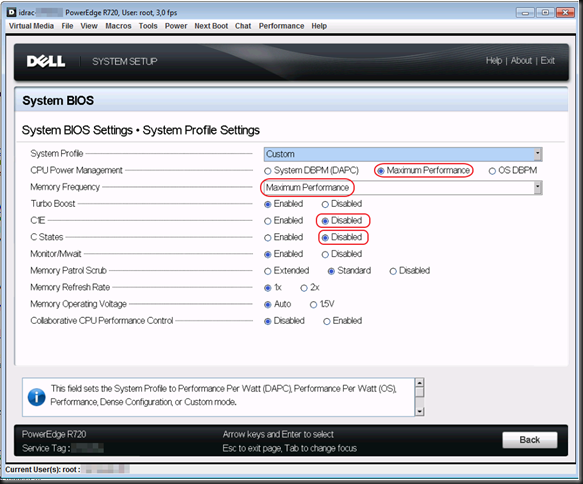

While Windows Server 2012 (R2) has made tremendous strides to better use of the available bandwidth of a 10Gbps pipes out of the box I still dive in to the BIOS to turn of the C/C1E states and set the CPU Power Management and Memory Frequency to Maximum performance. Have a look at this blog post Still Need To Optimizing Power Settings On DELL 12th Generation Servers For Lightning Fast Hyper-V Live Migrations? on how to do this with DELL Generation 12 Servers. It also contains a link to the older generations guidance.

As you can imagine I was quite interested to see if the settings effect RDMA as well. So let’s have a look with these settings here:

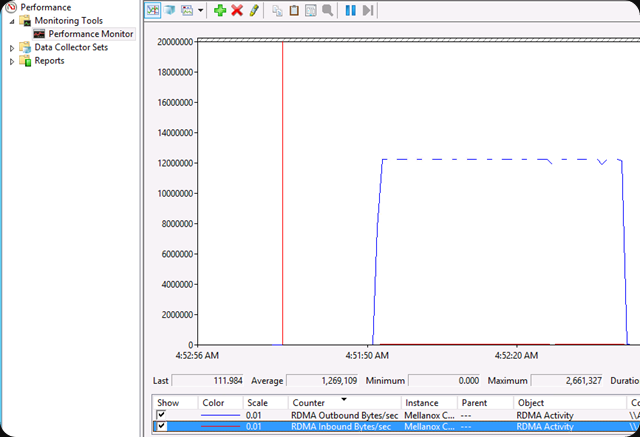

One RDMA NIC used (Mellanox, RoCE, 10Gbps)

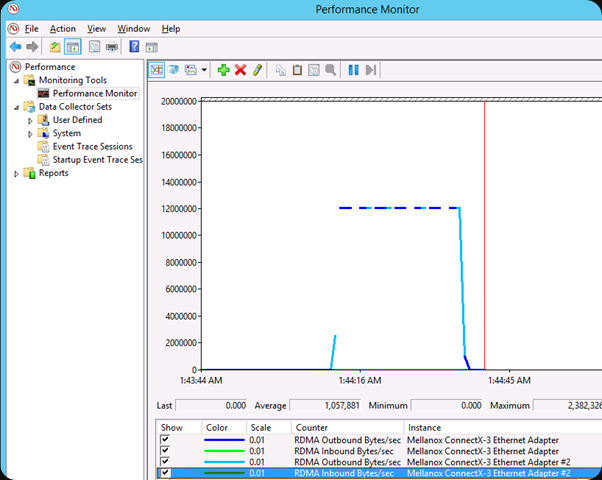

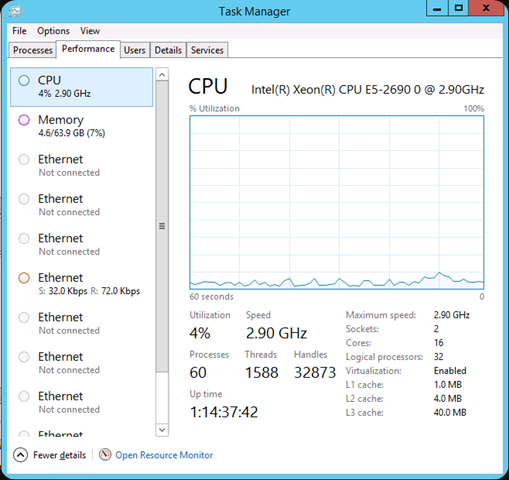

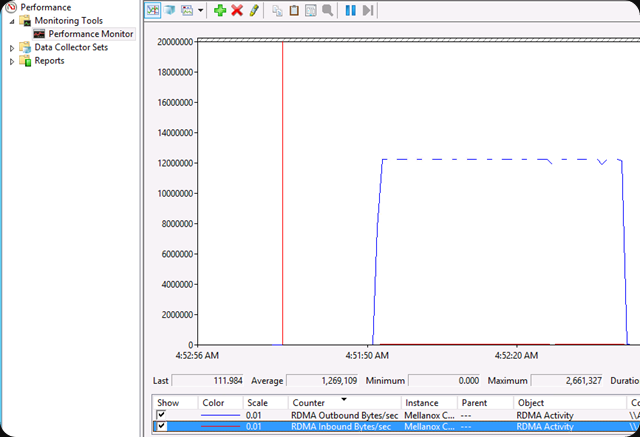

54 seconds for that 55GB memory (fixed) VM. We also note that the delay of 19-20 seconds before RDMA kicks in has dropped to 3-4 seconds, which is quite interesting. Basically this makes it as fast as 2 RDMA NICs without performance tweaking.

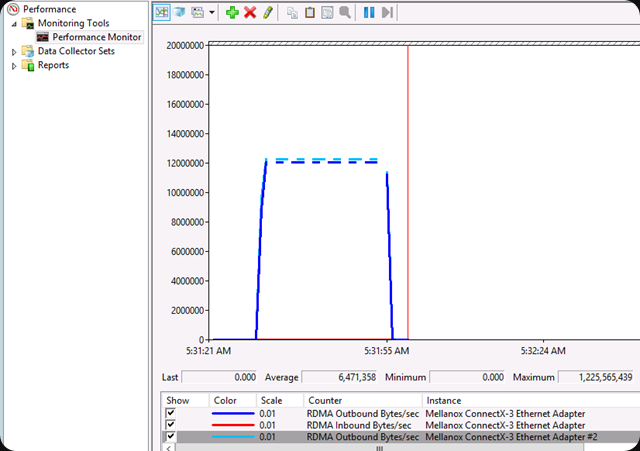

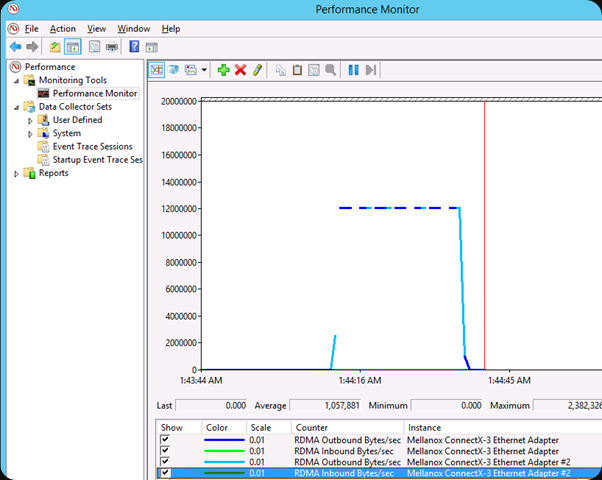

Two RDMA NICs used (Mellanox, RoCE, 10Gbps)

30 seconds flat, in a repetitive manner, for that 55GB memory (fixed) VM. Again we note that the delay of 19-20 seconds before RDMA kicks in is again 3-4 seconds. So this is about 45% better than without the power optimization.

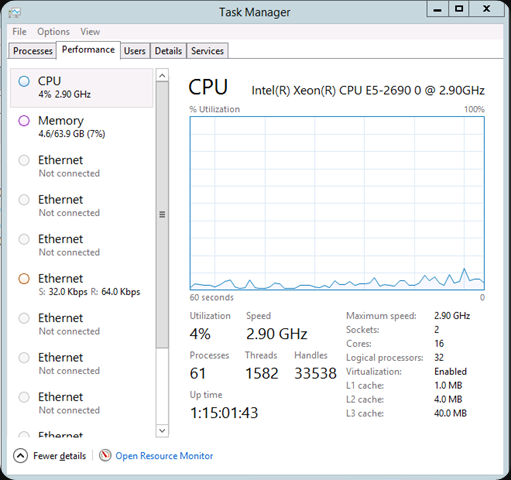

What is the CPU doing during all this? Well taking care of the VM load, not spending it on network interrupts  . Again, this is a quick screenshot. On a hyper-V node you’ll need to dive into Performance Monitor to get some real info.

. Again, this is a quick screenshot. On a hyper-V node you’ll need to dive into Performance Monitor to get some real info.

By now you must all be eager to see how this compares against Live Migration over TCP/IP, Multichannel and with Compression. That’s material for other blogs.

Why am I doing this?

We need to get the most out of every € or $ we spent. It’s not that we don’t have any cash left or so but why buy more servers & higher end gear to get better results when the answer lies in the correct configuration & better choices when designing a solution. It’s going to be a while before this knowledge becomes main stream and widely available. Years probably and why wait. It takes time to experiment but the results & ROI are great. Why spend another 50.000 to another 100.000 Euro on Servers, 10Gbps cards & switch ports if you don’t need to? Count the cost to host, power & cool them and you’ll see that this time is an investment. You could also conclude to leverage the cloud but wasting VM cycles there is also money you have better uses for, so testing will also be needed.