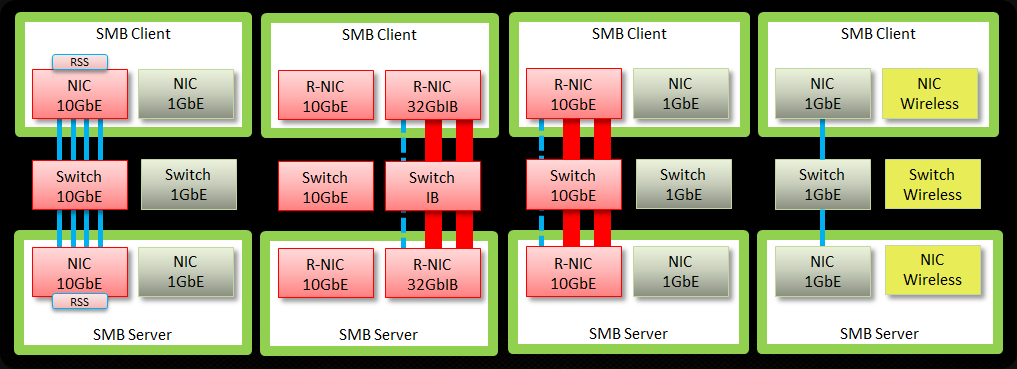

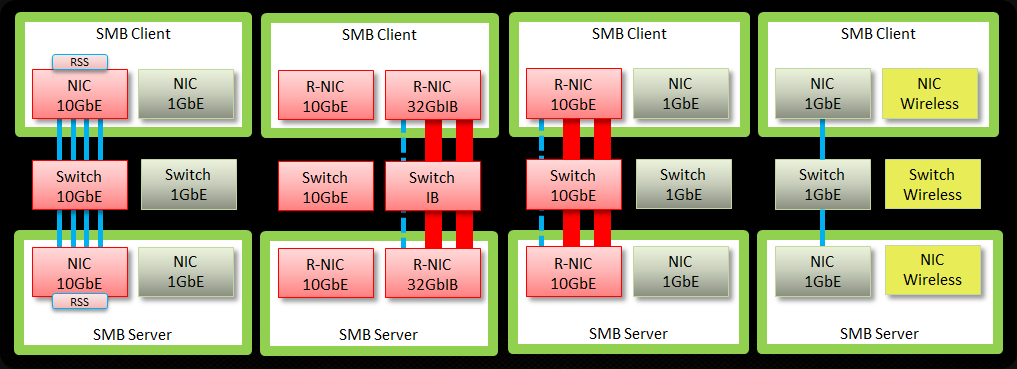

Most of you might remember this slide by Jose Barreto on SMB Multichannel Auto Configuration in one of his many presentations:

- Auto configuration looks at NIC type/speed => Same NICs are used for RDMA/Multichannel (doesn’t mix 10Gbps/1Gbps, RDMA/non-RDMA)

- Let the algorithms work before you decide to intervene

- Choose adapters wisely for their function

You can fine tune things if and when needed (only do this when this is really the case) but let’s look at this feature in action.

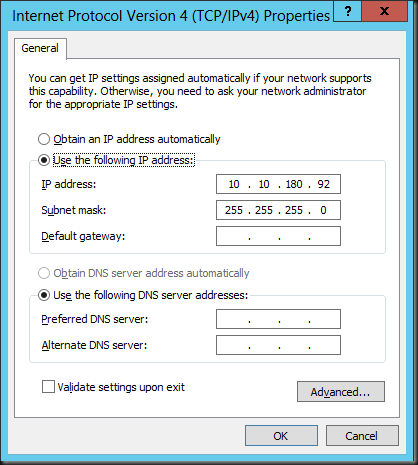

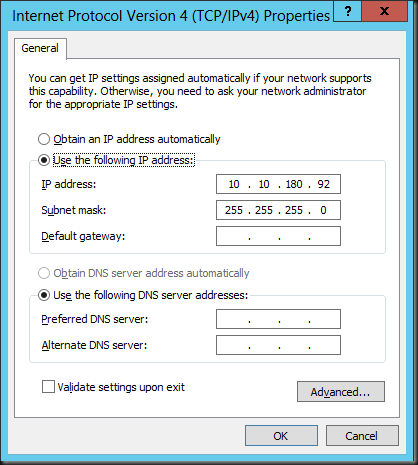

So let’s look at this in real life. For this test we have 2 * X520 DA 10Gbps ports using 10.10.180.8X/24 IP addresses and 2 * Mellanox 10Gbps RDMA adaptors with 10.10.180.9X/24 IP addresses. No teaming involved just multiple NIC ports. Do not that these IP addresses are on different subnet than the LAN of the servers. Basically only the servers can communicate over them, they don’t have a gateway, no DNS servers and are as such not registered in DNS either (live is easy for simple file sharing).

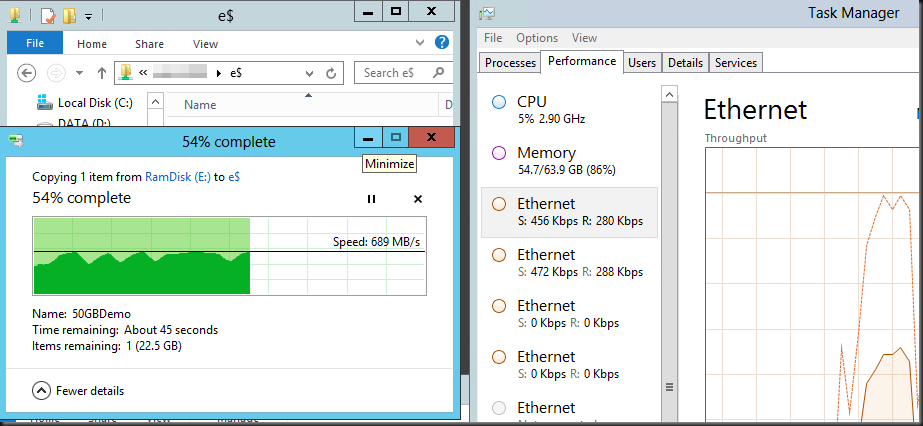

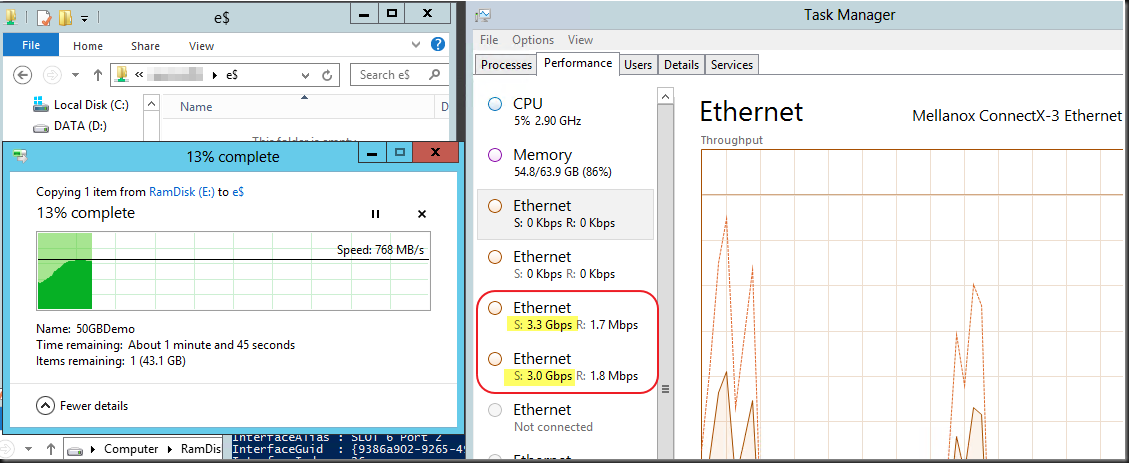

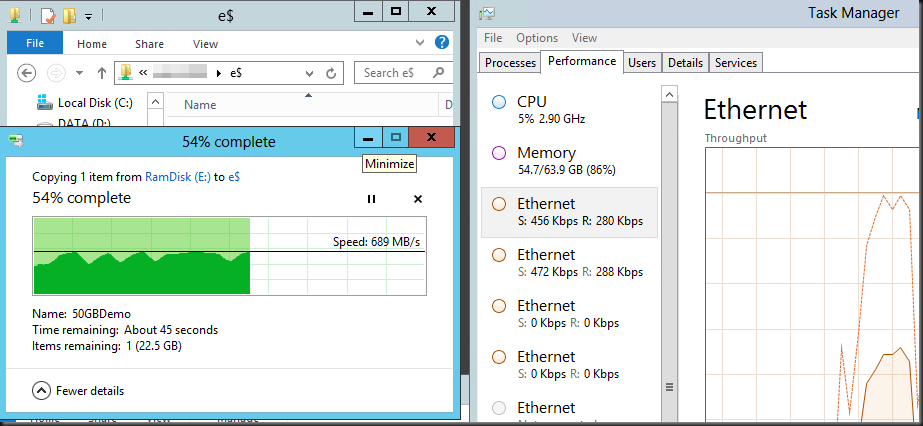

Let’s try and copy a 50Gbps fixed VHDX file from server1 to server2 using the DNS name of the target host (pixelated), meaning it will resolve to that host via DNS and use the LAN IP address 10.10.100.92/16 (the host name is greyed out). In the below screenshot you see that the two RDMA capable cards are put into action. The servers are not using the 1Gbps LAN connection. Multichannel looked at the options:

- A 1Gbps RSS capable Link

- Two 10Gbps RSS capable Links

- Two 10Gbps RDMA capable links

Multichannel concluded the RDMA card is the best one available and as we have two of those it use both. In other words it works just like described.

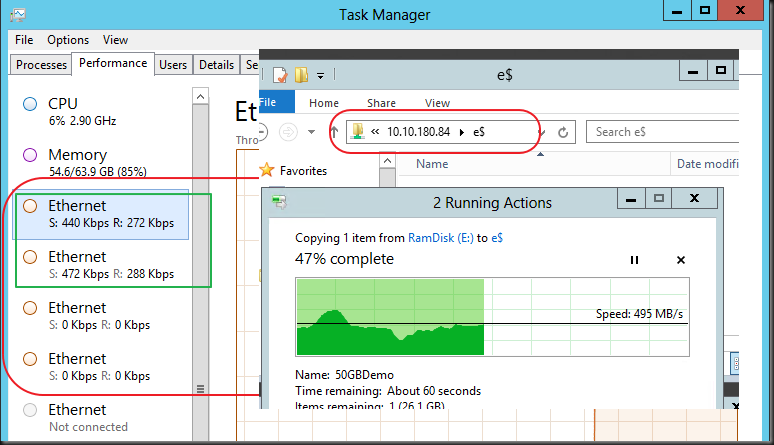

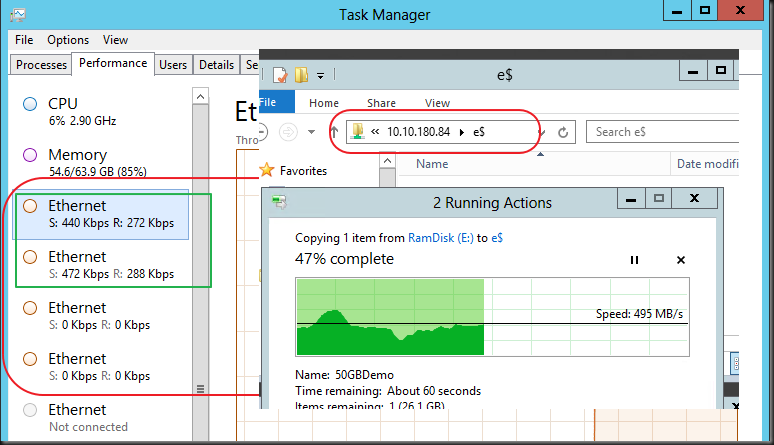

Even if we try to bypass DNS and we copy the files explicitly via the IP address (10.10.180.84) assigned to the Intel X520 DA cards Multichannel intelligence detects that it has two better cards that provide RDMA available and as you can see it uses the same NICs as in the demo before. Nifty isn’t it

If you want to see the other NICs in action we can disable the Mellanox card and than Multichannel will choose the two X520 DA cards. That’s fine for testing but in real life you need a better solution when you need to manually define what NICs can be used. This is done using PowerShell  (take a look at Jose Barrto’s blog The basics of SMB PowerShell, a feature of Windows Server 2012 and SMB 3.0 for more info).

(take a look at Jose Barrto’s blog The basics of SMB PowerShell, a feature of Windows Server 2012 and SMB 3.0 for more info).

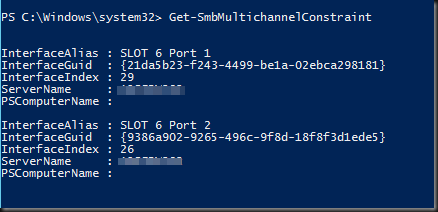

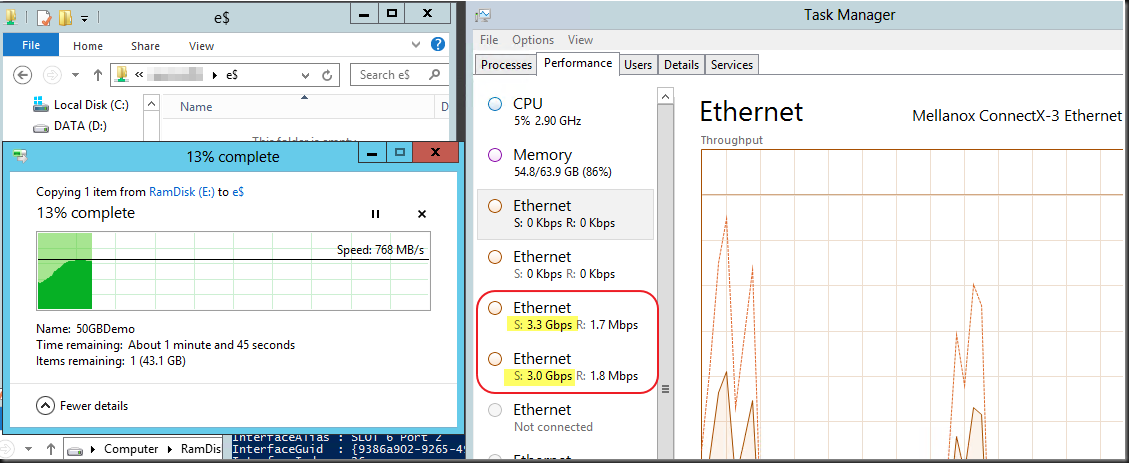

New-SmbMultichannelConstraint –ServerName SERVER2 –InterfaceAlias “SLOT 6 Port 1”, “SLOT 6 Port 2”

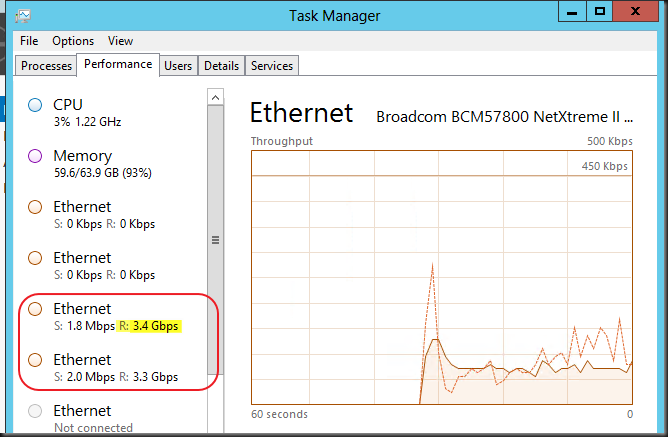

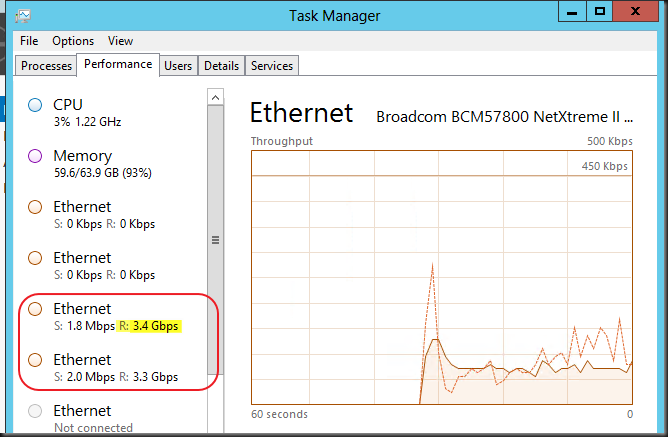

This tells a server it can only use these two NICs which in this example are the two Intel X520 DA 10Gbps cards to access Server2. So basically you configure/tell the client what to use for SMB 3.0 traffic to a certain server. Note the difference in send/receive traffic between RDMA/Native 10Gbps.

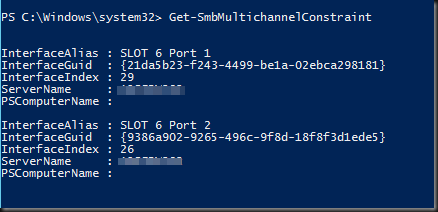

On Server1, the client you see this:

On Server2, the server you see this:

Which is indeed the constraint set up as we can verify with:

Get-SmbMultichannelConstraint

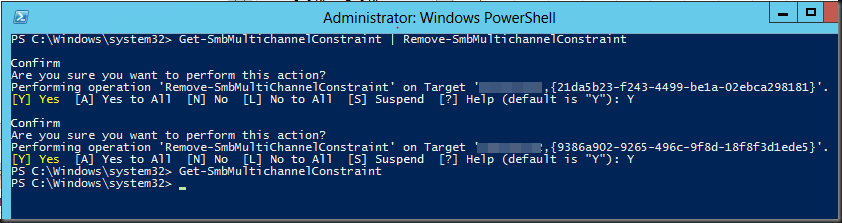

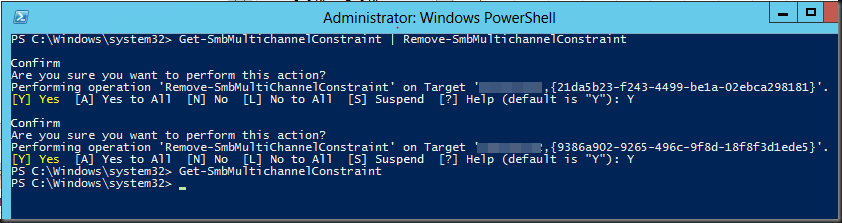

We’re done playing so let’s clean up all the constraints:

Get-SmbMultichannelConstraint | Remove-SmbMultichannelConstraint

Seeing this technology it’s now up to the storage industry to provide the needed capacity and IOPS I a lot more affordable way. Storage Spaces have knocked on your door, that was the wake up call  . In an environment where we throw lots of data around we just love SMB 3.0

. In an environment where we throw lots of data around we just love SMB 3.0