Hans Vredevoort posted a nice blog entry recently on the defragmentation of Clustered Shared Volumes and asked for some feedback & experiences on this subject. He describes the process used and steps taken to defrag your CSV storage and notes that there may be third party products that can handle this automatically. Well yes, there are. Two of the most know defragmentation products support Cluster Shared Volumes and automate the process described by Hans in his blog. Calvin made a very useful suggestion to use Redirected Access instead of Maintenance mode. This is what the commercial tools like Raxco PerfectDisk and Diskeeper also do.

As the defragmentation of Cluster Shared Volumes requires them to be put into Redirected Access you should not have “always on” defragmentation running in a clustered Hyper-V node. Sure the software will take care of it all for you but the performance hit is there and is considerable. I might just use this point here as yet another plug for 10 Gbps networks for CSV. Also note that the defragmentation has to run on the current owner or coordinator node. Intelligent defragmentation software should know what node to run the defrag on, move the ownership to the desired node that is running the defragmentation or just runs it on all nodes and skips the CSVs storage it isn’t the coordinator for. The latter isn’t that intelligent. John Savill did a great blog post on this before Windows 2008 R2 went RTM for Windows IT Pro Magazine where he also uses PowerShell scripts to move the ownership of the storage to the node where he’ll perform the defragmentation and retrieves the GUID of the disk to use with the defrag command. You can read his blog post here and see how our lives have improved with the commands he mentions would be available in the RTM version of W2K8R2 (Repair-ClusterSharedVolume with –defrag option).

For more information on Raxco PerfectDisk you can take a look at the Raxco support article, but the information is rather limited. You can also find some more information from Diskeeper on this subject here. I would like to add that you should use defragmentation intelligently and not blindly. Do it with a purpose and in a well thought out manner to reap the benefits. Don’t just do it out of habit because you used to do it in DOS back in the day.

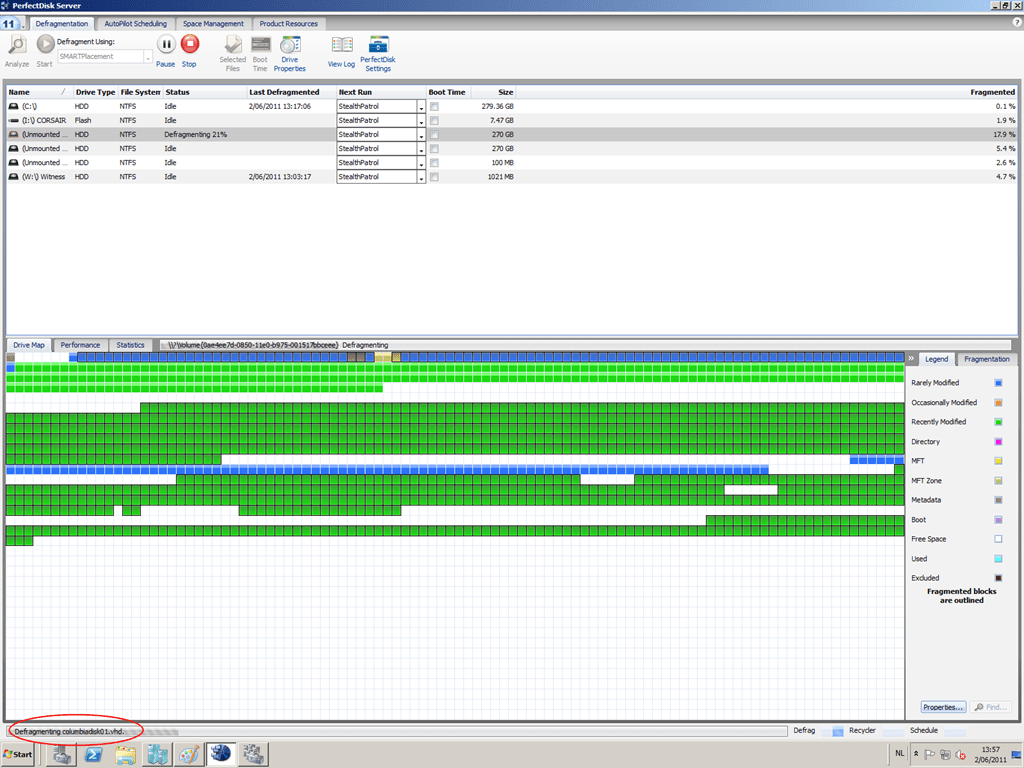

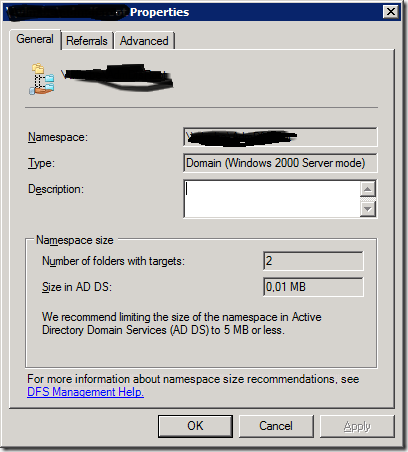

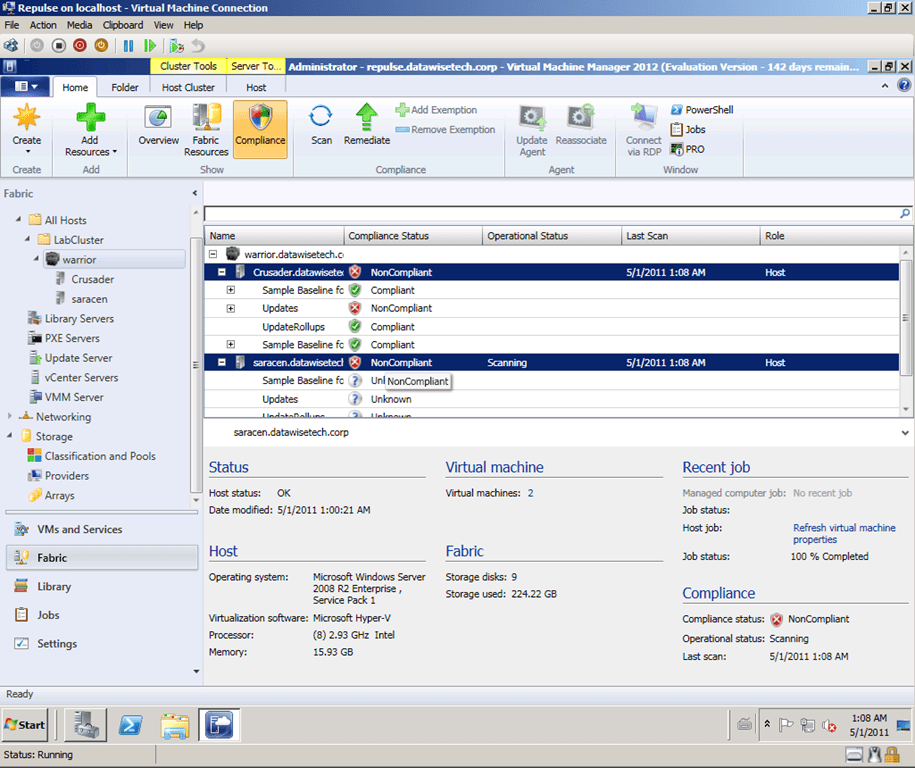

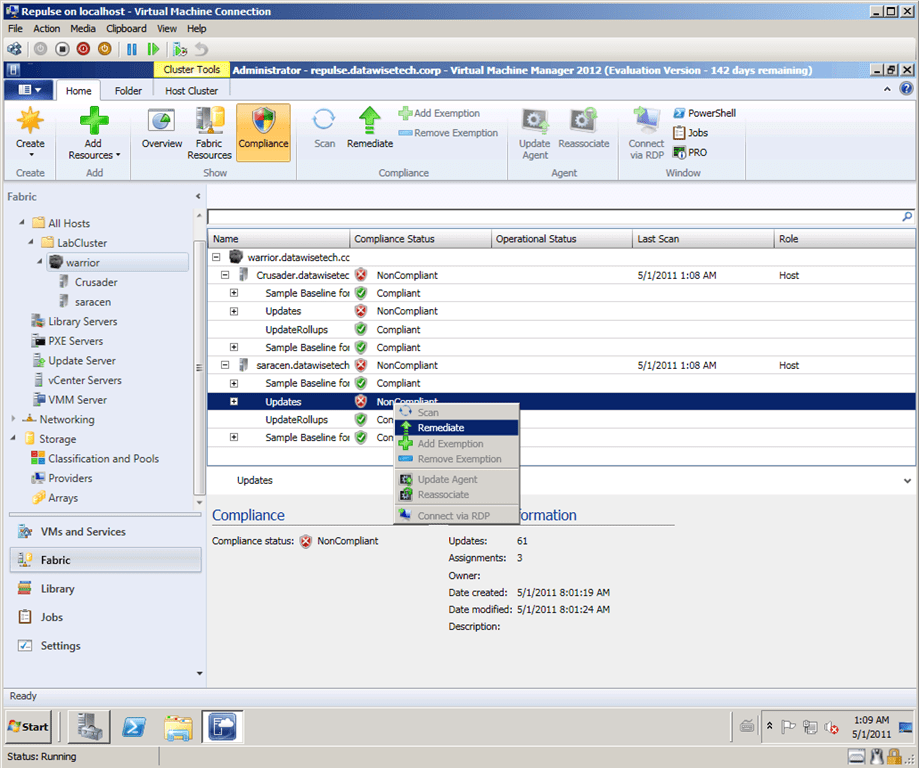

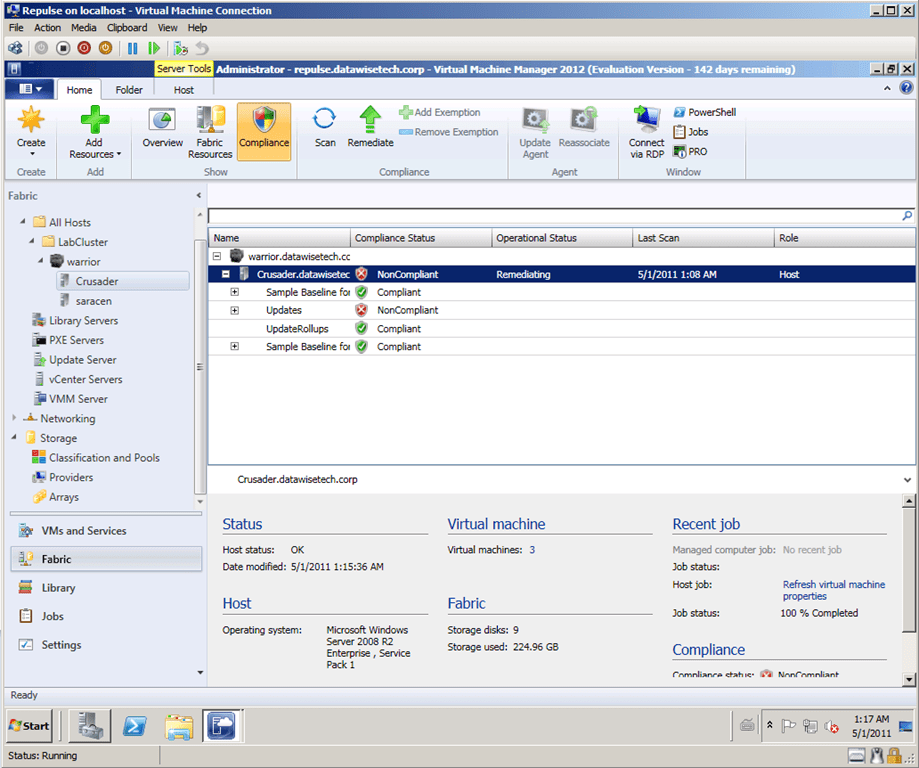

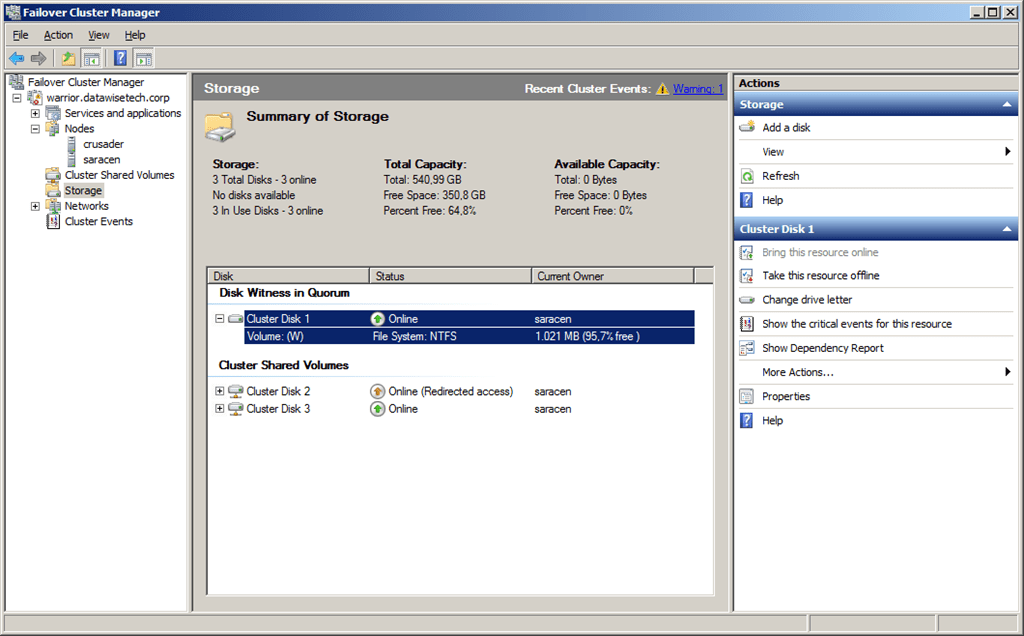

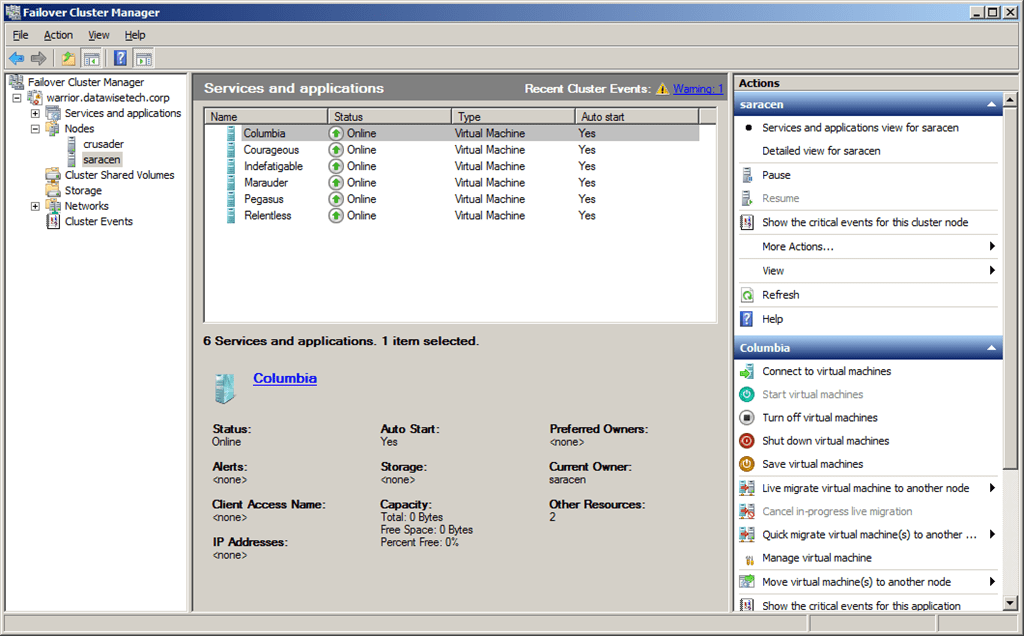

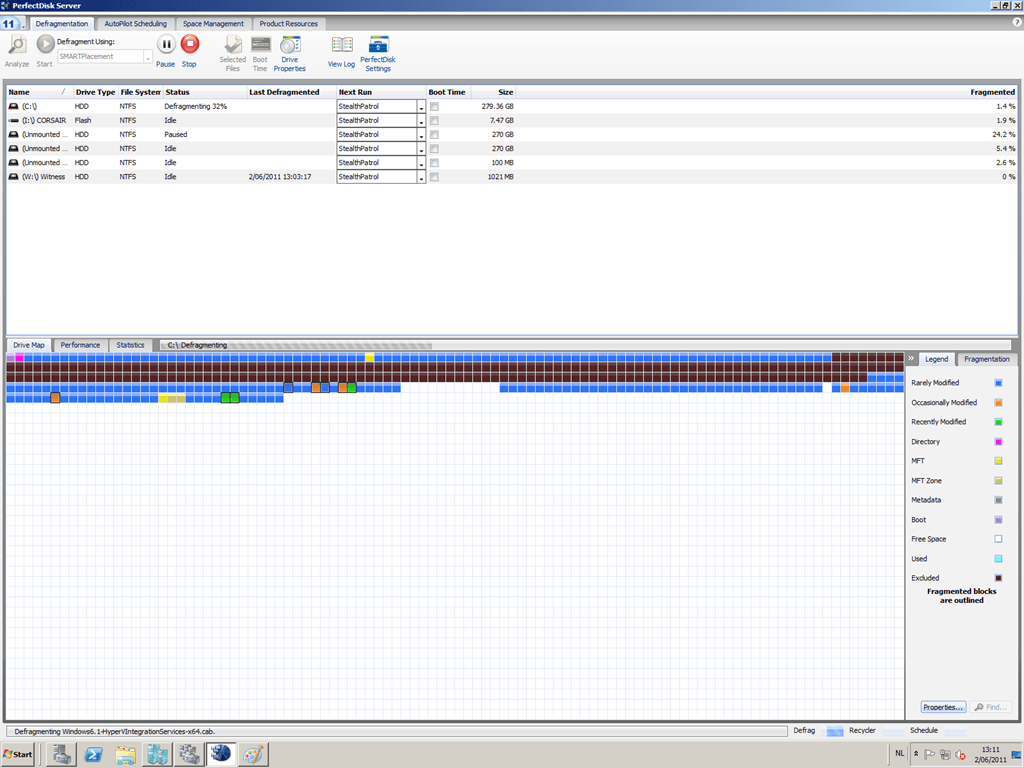

To conclude I’ll leave you with some screenshots from my lab, take during the defragmentation of a Hyper-V cluster node.

As you can see the CSV storage is put into redirected access:

And our machines remain online and available:

This is because we started to defrag it on the Hyper-V cluster node:

Here you can see that the guest files are indeed being defragmented, in this case, the VHD for the guest server Columbia (red circle at the bottom):

Here you can see that the guest files are indeed being defragmented, in this case, the VHD for the guest server Columbia (red circle at the bottom):