I was recently configuring a Windows Server 2012 File server cluster to provide SMB transparent failover with continuous available file shares for end users. So, we’re not talking about a Scale Out File Server here.

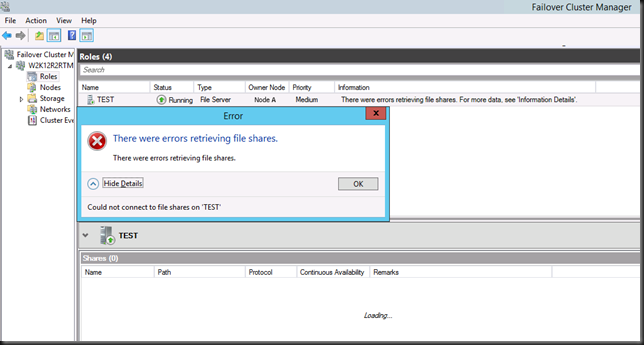

All seemed to go pretty smooth until we hit a problem. when the role is running on Node A and you are using the GUI on Node A this is what you see:

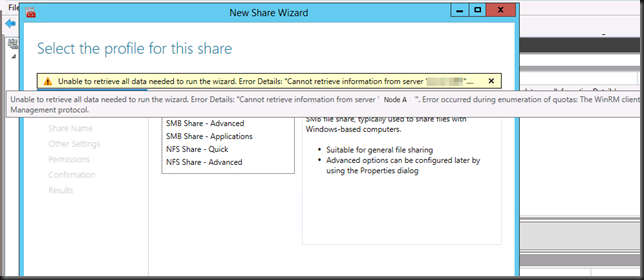

When you try to add a share you get this

"Unable to retrieve all data needed to run the wizard. Error details: "Cannot retrieve information from server "Node A". Error occurred during enumeration of SMB shares: The WinRM protocol operation failed due to the following error: The WinRM client sent a request to an HTTP server and got a response saying the requested HTTP URL was not available. This is usually returned by a HTTP server that does not support the WS-Management protocol.”

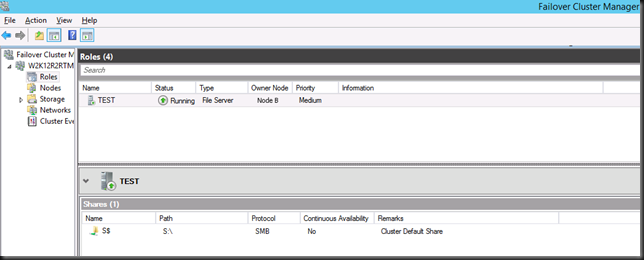

When you failover the file server role to the other node, things seem to work just fine. So this is where you run the GUI from Node A while the file server role resides on Node B.

You can add a share, it all works. You notice the exact same behavior on the other node. So as long as the role is running on another node than the one on which you use Failover Cluster Manager you’re fine. Once you’re on the same node you run into this issue. So what’s going on?

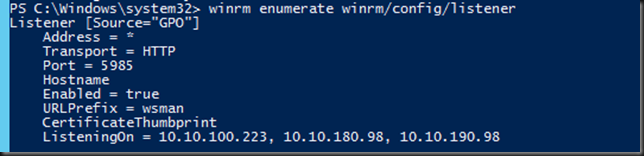

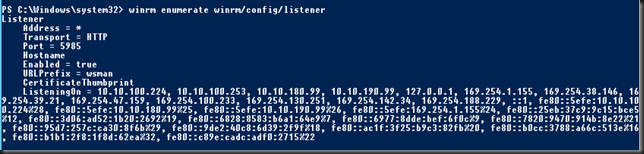

So what to do? It’s related to WinRM so let’s investigate that.

So the WinRM config comes via a GPO. The local GPO for this is not configured. So that’s not the one, it must come from the domain.The IP addresses listed are the node IP and the two cluster networks. What’s not there is local host 127.0.0.1, the cluster IP address or any of the IPV6 addresses.

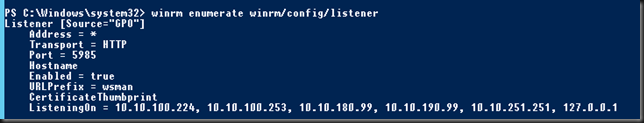

I experimented with a lot of settings. First we ended up creating an OU in the OU where the cluster nodes reside on which we blocked inheritance. We than ran gpupdate /target:computer /force on both nodes to make sure WinRM was no longer configure by the domain GPO. As the local GPO was not configured it reverted back to the defaults. The listener show up as listing to all IPv4 and IPv6 addresses. Nice but the GPO was now disabled.

This is interesting but, things still don’t work. For that we needed to disable/enable WinRM

Configure-SMRemoting -disable

Configure-SMRemoting –enable

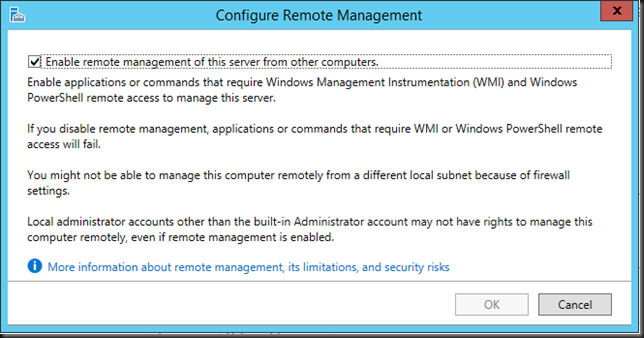

or via server manager

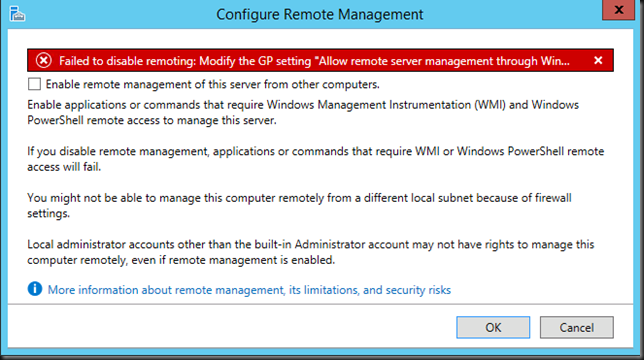

That fixed it, and we it seems a necessity to to. Do note that to disable/enable remote management it should not be configured via a GPO or it throws an error like

or

Some more testing

We experimented by adding 127.0.0.0-172.0.0.1 an enabling the GPO again. We then saw the listener did show the local host, cluster & file role IP address but the issue was back. Using * in just IPv 4 did not do the trick either.

What did the trick was to use * in the filter for IPv 6 and keep our original filters on IPv4. The good news is that having removed the GPO and disabling/enabling WinRM the cluster IP address & Filer Role IP address are now in the list. That could be good for other use cases.

This is not ideal, but it all works now.

What we settled for

So we ended up with still restricting the GPO settings for IPv4 to subnet ranges and allowing * for IPv6. This made sure that even when we run the Failover Cluster Manager GUI from the node that owns the file server role everything still works.

One workaround is to work from a remote host, not from a cluster member, which is a good practice anyway.

The key takeaway is that when Microsoft says they test with IPv6 enabled they literally mean for everything.

Note

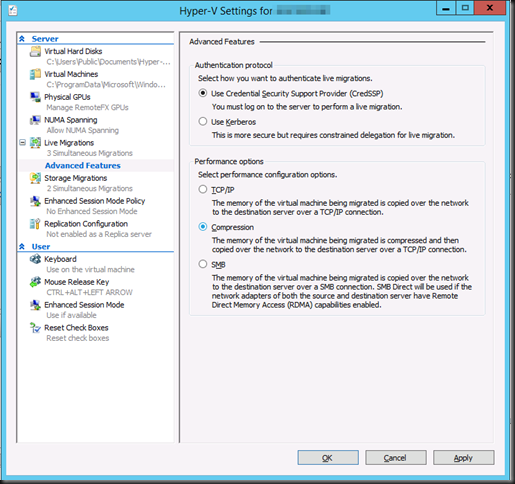

There is a TechNet article on WinRM GPO Settings for SCVMM 2012 RC where they advice to set both IPv4 and IPv6 to * to avoid issues with SCVMM operations. How to Add Trusted Hyper-V Hosts and Host Clusters in VMM

However, we found that IPv6 is the key requirement here, * for just IP4 alone did not work.