This is a 4th post in a series of 4. Here’s a list of all parts:

- Introducing 10Gbps Networking In Your Hyper-V Failover Cluster Environment (Part 1/4)

- Introducing 10Gbps With A Dedicated CSV & Live Migration Network (Part 2/4)

- Introducing 10Gbps & Thoughts On Network High Availability For Hyper-V (Part 3/4)

- Introducing 10Gbps & Integrating It Into Your Network Infrastructure (Part 4/4)

In my blog post “Introducing 10Gbps & Thoughts On Network High Availability For Hyper-V (Part 3/4)” in a series of thoughts on 10Gbps and Hyper-V networking a discussion on NIC teaming brought up the subject of 10Gbps for virtual machine networks. This means our switches will probably no longer exist in isolation unless those virtual Machines don’t ever need to talk to anything outside what’s connected to those switches. This is very unlikely. That means we need to start thinking and talking about integrating the 10Gbps switches in our network infrastructure. So we’re entering the network engineers their turf again and we’ll need to address some of their concerns. But this is not bad news as they’ll help us prevent some bad scenarios.

Optimizing the use of your 10Gbps switches

As not everyone runs clusters big enough, or enough smaller clusters, to warrant an isolated network approach for just cluster networking. As a result you might want to put some of the remaining 10Gbps ports to work for virtual machine traffic. We’ve already pointed out that your virtual machines will not only want to talk amongst themselves (it’s a cluster and private/internal networks tend to defeat the purpose of a cluster, it just doesn’t make any sense as than they are limited within a node) but need to talk to other servers on the network, both physical and virtual ones. So you have to hook up your 10Gbps switches from the previous example to the rest of the network. Now there are some scenarios where you can keep the virtual machine networks isolated as well within a cluster. In your POC lab for example where you are running a small 100% virtualized test domain on a cluster in a separate management domain but these are not the predominant use case.

But you don’t only have to have to integrate with the rest of your network, you may very well want to! You’ve seen 10Gbps in action for CSV and Live Migration and you got a taste for 10Gbps now, you’re hooked and dream of moving each and every VM network to 10Gbps as well. And while your add it your management network and such as well. This is nothing different from the time you first got hold of 1Gbps networking kit in a 100 Mbps world. Speed is addictive, once you’re hooked you crave for more

How to achieve this? You could do this by replacing the existing 1Gbps switches. That takes money, no question about it. But think ahead, 10Gbps will be common place in a couple of years time (read prices will drop even more). The servers with 10Gbps LOM cards are here or will be here very soon with any major vendor. For Dell this means that the LOM NICs will be like mezzanine cards and you decide whether to plug in 10Gbps SPF+ or Ethernet jacks. When you opt to replace some current 1Gbps switches with 10gbps ones you don’t have to throw them away. What we did at one location is recuperate the 1Gbps switches for out of band remote access (ILO/DRAC cards) that in today’s servers also run at 1Gbps speeds. Their older 100Mbps switches where taken out of service. No emotional attachment here. You could also use them to give some departments or branch offices 1gbps to the desktop if they don’t have that yet.

When you have ports left over on the now isolated 10Gbps switches and you don’t have any additional hosts arriving in the near future requiring CSV & LM networking you might as well use those free ports. If you still need extra ports you can always add more 10Gbps switches. But whatever the case, this means up linking those cluster network 10Gbps switches to the rest of the network. We already mentioned in a previous post the network people might have some concerns that need to be addressed and rightly so.

Protect the Network against Loops & Storms

The last thing you want to do is bring down your entire production network with a loop and a resulting broadcast storm. You also don’t want the otherwise rather useful spanning tree protocol, locking out part of your network ruining your sweet cluster setup or have traffic specifically intended for your 10Gbps network routed over a 1Gbps network instead.

So let us discuss some of the ways in which we can prevent all these bad things from happening. Now mind you, I’m far from an expert network engineer so to all CCIE holders stumbling on to this blog post, please forgive me my prosaic network insights. Also keep in mind that this is not a networking or switch configuration course. That would lead us astray a bit too far and it is very dependent on your exact network layout, needs, brand and model of switches etc.

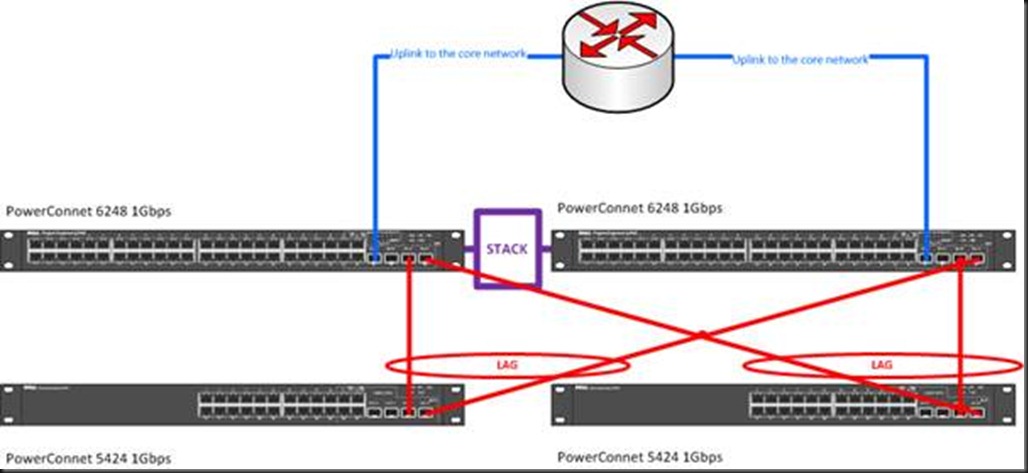

As discussed in blog post Introducing 10Gbps With A Dedicated CSV & Live Migration Network (Part 2/4) you need a LAG between your switches as the traffic for the VLANs serving heartbeat, CSV, Live Migration, virtual machines but now also perhaps the host management and optional backup network must flow between the switches. As long as you have only two switches that have a LAG between them or that are stacked you have not much risk of creating a loop on the network. Unless you uplink two ports directly with a network cable. Yes, that happens, I once witnessed a loop/broadcast storm caused by someone who was “tidying up” spare CAT5E cables buy plugging all ends up into free port switches. Don’t ask. Lesson learned: disable every switch port not in use.

Now once you uplink those two or more 10Gbps switches to your other switches in a redundant way you have a loop. That’s where the Spanning Tree protocol comes in. Without going into detail this prevents loops by blocking the redundant paths. If the operational path becomes unavailable a new path is established to keep network traffic flowing. There are some variations in STP. One of them is Rapid Spanning Tree Protocol (RSTP) that does the same job as STP but a lot faster. Think a couple of seconds to establish a path versus 30 seconds or so. That’s a nice improvement over the early days. Another one that is very handy is the Multiple Spanning Tree Protocol (MSTP). The sweet thing about the latter is that you have blocking per VLANs and in the case of Hyper-V or storage networks this can come in quite handy.

Think about it. Apart from preventing loops, which are very, very bad, you also like to make sure that the network traffic doesn’t travel along unnecessary long paths or over links that are not suited for its needs. Imagine the Live Migration traffic between two nodes on different 10Gbps switches travelling over the 1Gbps uplinks to the 1Gbps switches because the STP blocked the 10Gbps LAG to prevent a loop. You might be saturating the 1Gbps infrastructure and that’s not good.

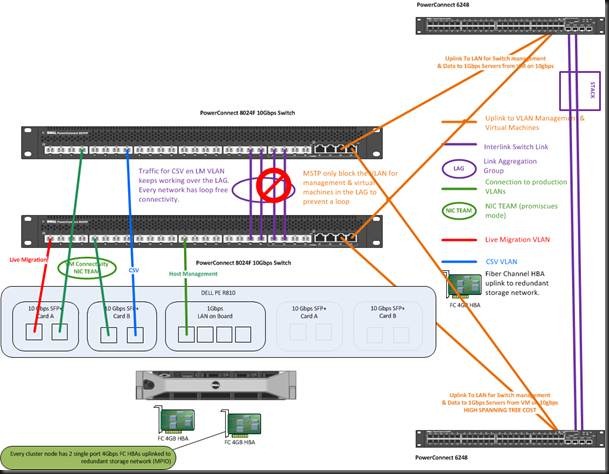

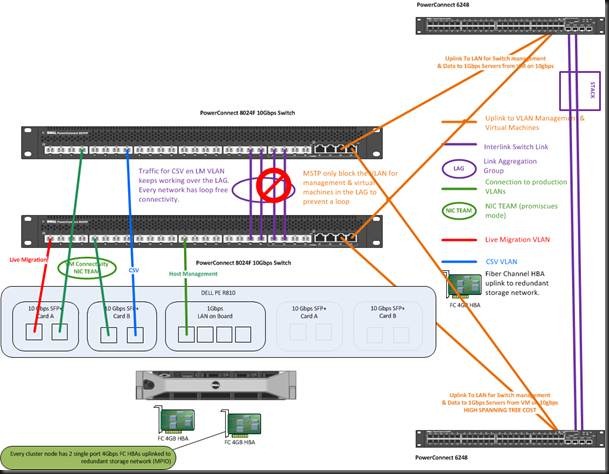

I said MSTP could be very handy, let’s address this. You only need the uplink to the rest of the network for the host management and virtual machine traffic. The heartbeat, CSV and Live Migration traffic also stops flowing when the LAG between the two 10Gbps switches is blocked by the RSTP. This is because RSTP works on the LAG level for all VLANs travelling across that LAG and doesn’t discriminate between VLAN. MSTP is smarter and only blocks the required VLANS. In this case the host management and virtual machines VLANS as these are the only ones travelling across the link to the rest of the network.

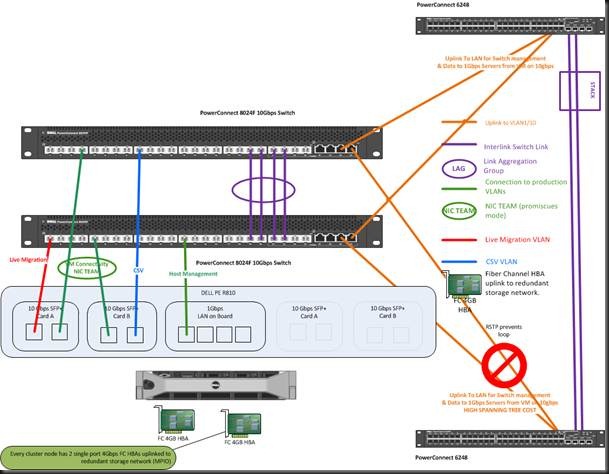

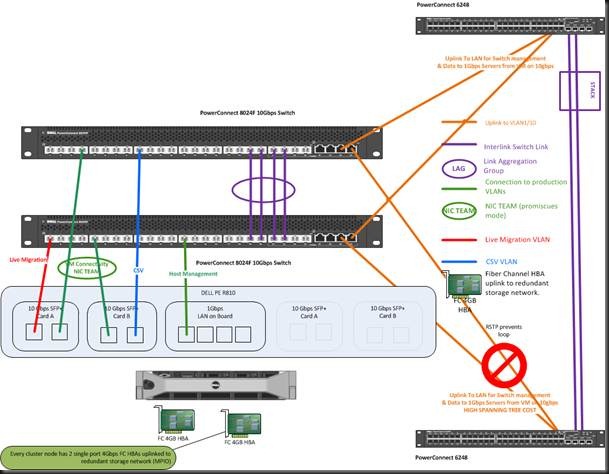

We’ll illustrate this with some picture based on our previous scenarios. In this example we have the cluster network going to the 10Gbps switches over non teamed NICs. The same goes for the virtual machine traffic but the NICs are teamed, and the host management NICS. Let’s first show the normal situation.

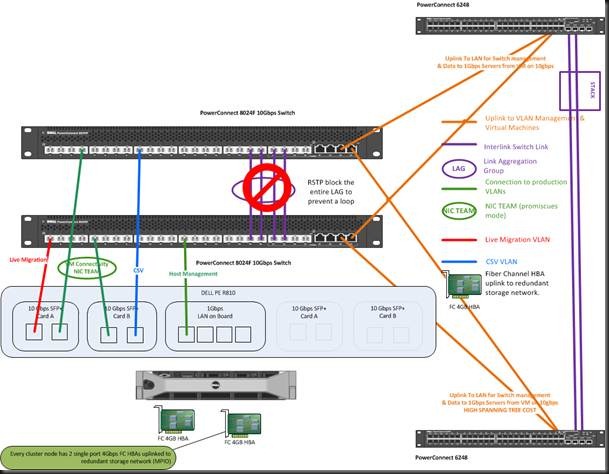

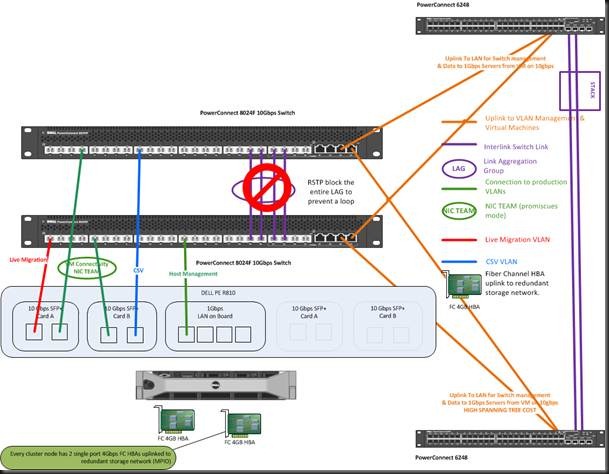

Now look at a situation where RSTP blocks the purple LAG. Please do note that if the other network switches are not 10Gbps that traffic for the virtual machines would be travelling over more hops and at 1Gbps. This should be avoided, but if it does happens, MSTP would prevent an even worse scenario. Now if you would define the VLANS for cluster network traffic on those (orange) uplink LAGs you can use RSTP with a high cost but in the event that RSTP blocks the purple LAG you’d be sending all heartbeat, CSV and Live Migration traffic over those main switches. That could saturate them. It’s your choice.

In the picture below MSTP saves the day providing loop free network connectivity even if spanning tree for some reasons needs to block the LAG between the two 10Gbps switches. MSTP would save your cluster network traffic connectivity as those VLAN are not defined on the orange LAG uplinks and MSTP prevents loops by blocking VLAN IDs in LAGs not by blocking entire LAGs

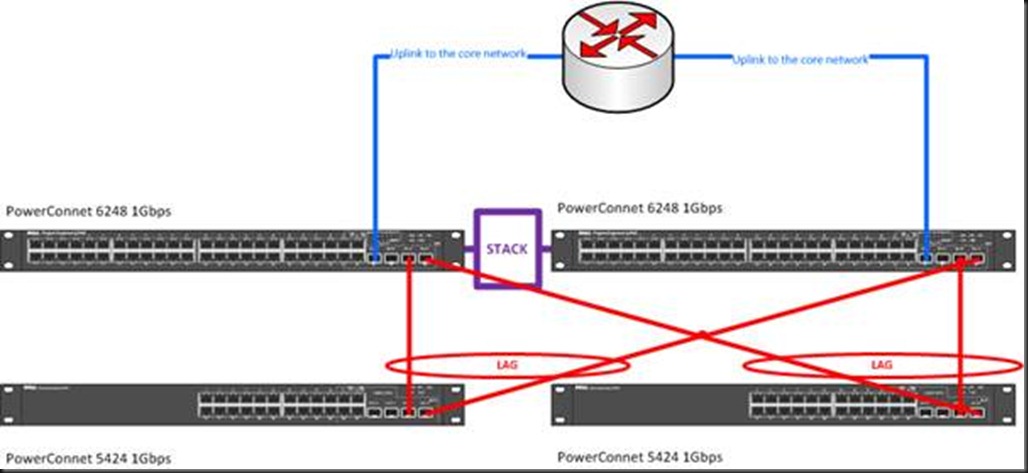

To conclude I’ll also mention a more “star like” approach in up linking switches. This has a couple of benefits especially when you use stackable switches to link up to. They provide the best bandwidth available for upstream connections and they provide good redundancy because you can uplink the lag to separate switches in the stack. There is no possibility for a loop this way and you have great performance on top. What’s not to like?

Well we’ve shown that each network setup has optimal, preferred network traffic paths. We can enforce these by proper LAG & STP configuration. Other, less optimal, paths can become active to provide resiliency of our network. Such a situation must be addressed as soon as possible and should be considered running on “emergency backup”. You can avoid such events except for the most extreme situations by configuring the RSTP/MSTP costs for the LAG correctly and by using multiple inter switch links in every LAG. This does not only provide for extra bandwidth but also protects against cable or port failure.

Conclusion

And there you have it, over a couple of blog posts I’ve taken you on a journey through considerations about not only using 10Gbps in your Hyper-V cluster environments, but also about cluster networks considerations on a whole. Some notes from the field so to speak. As I told you this was not a deployment or best practices guide. The major aim was to think out loud, share thoughts and ideas. There are many ways to get the job done and it all depends on your needs an existing environment. If you don’t have a network engineer on hand and you can’t do this yourself; you might be ready by now to get one of those business ready configurations for your Hyper-V clustering. Things can get pretty complex quite fast. And we haven’t even touched on storage design, management etc.. The purpose of these blog was to think about how Hyper-V clustering networks function and behave and to investigate what is possible. When you’re new to all this but need to make the jump into virtualization with both feet (and you really do) a lot help is available. Most hardware vendors have fast tracks, reference architectures that have a list of components to order to build a Hyper-V cluster and more often than not they or a partner will come set it all up for you. This reduces both risk and time to production. I hope that if you don’t have a green field scenario but want to start taking advantage of 10Gbps networking; this has given you some food for thought.

I’ll try to share some real life experiences,what improvements we actually see, with 10Gbps speeds in a future blog post.

![]()