Configuring live migration settings on a cluster

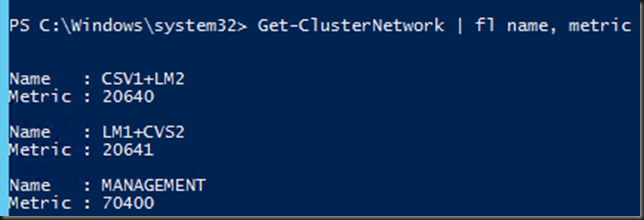

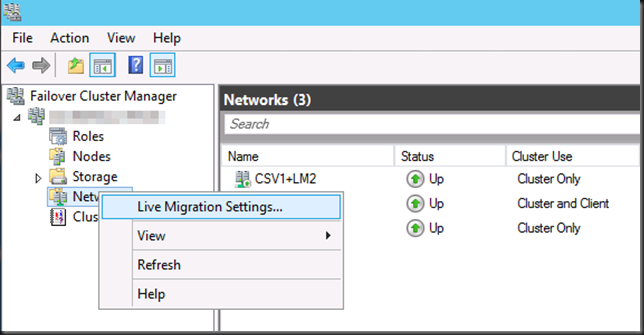

In the cluster under Networks, Live Migration Settings you can select what networks are available for live migration and their order of preference.

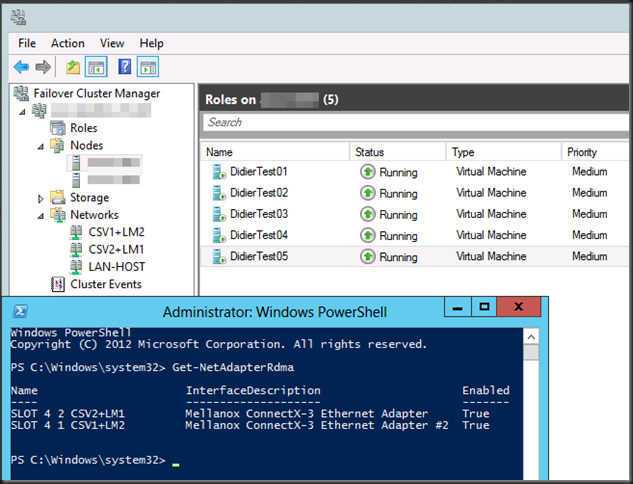

Basically this setting determines what NICs can be used. It also determines and in what order of preference the available networks can be used by Live Migration. It does not determine bandwidth aggregation or failover. All it does is provide the order in which the redundant networks will be used. It’s up to the cluster service NETFT, Multichannel or SMB Direct to provide the bandwidth aggregation if possible As you can see we use LM/CSV over SMB and as our two NICS are RDMA capable 10Gbps NIC, multichannel will discover RDMA capabilities & leverage SMB Direct if it can be establish otherwise it will just stick with multichannel. If you would team that NIC shows up as just one network. Also not that if you lose a NIC during live migration it might fail for some VMs under certain scenarios, but you cluster nodes will maintain the capability & recover. The names of the network reflect this: LM1+CSV2 & CSV1+LM2 will be used both but if for some reason multichannel goes completely south the names reflect the metrics of these networks. The lowest is CSV1+LM2 and the second lowest is LM1+CSV2, reflect on how NETFT will select to use which automatically based on the metrics. I.e. It’s “self documentation” for human consumption.

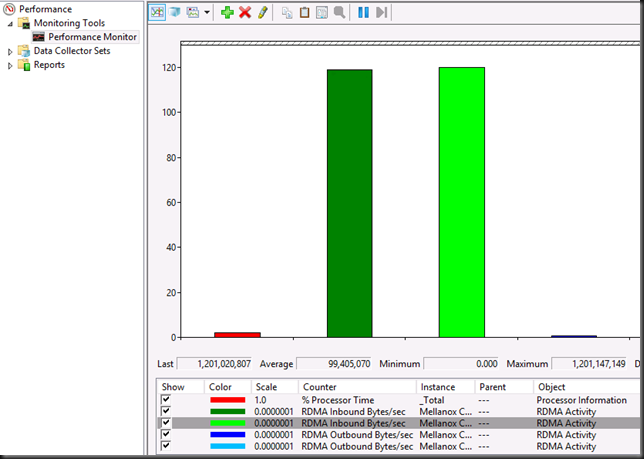

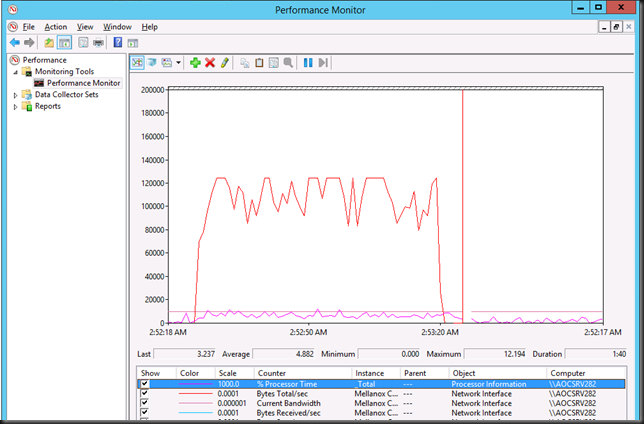

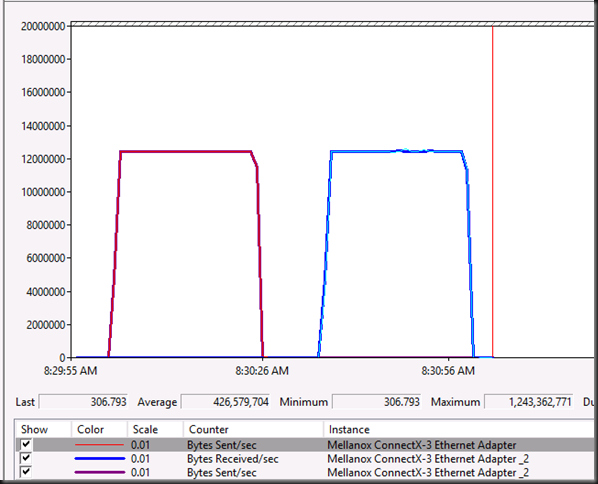

Sometimes you might get surprising results. An example. If you’ve selected SMB for Live Migration and you have selected only one of the NICs here. Still when you look at perform you might see both being used! What’s happening is that multichannel will kick in (and use two or more similar NICs when it finds them and if applicable move to RDMA.

Still when you look at perform you might see both being used! What’s happening is that multichannel will kick in (and use two or more similar NICs when it finds them and if applicable move to RDMA.

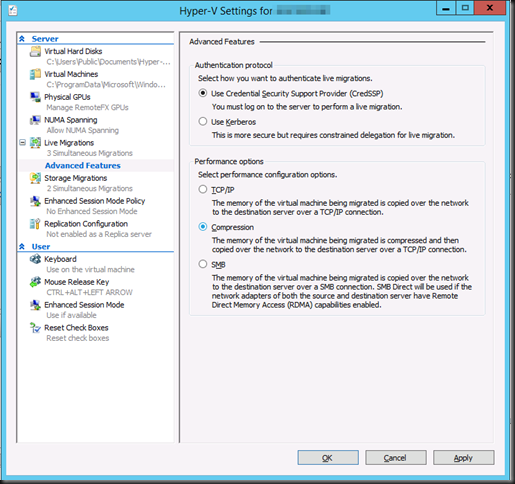

So here we select SMB for the live migration type and the two equally capable 10GBps NICs available for live migration it will use them, even if you selected only one of them in the cluster network settings for live migration.

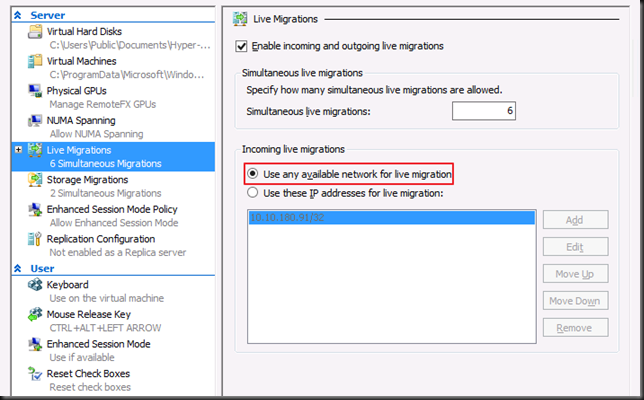

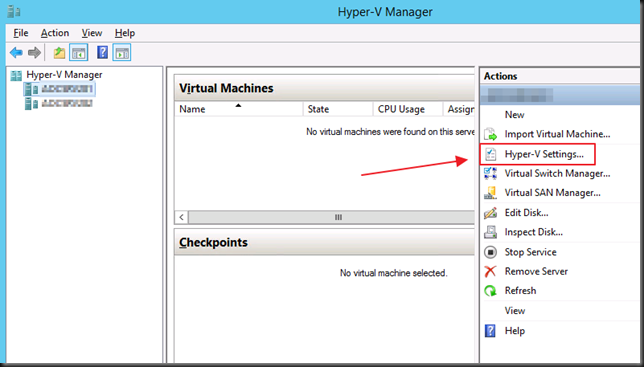

Why is that? Well, there is still another location where live migration settings are defined and that is in Hyper-V Manager. Take a look at the screenshot below.

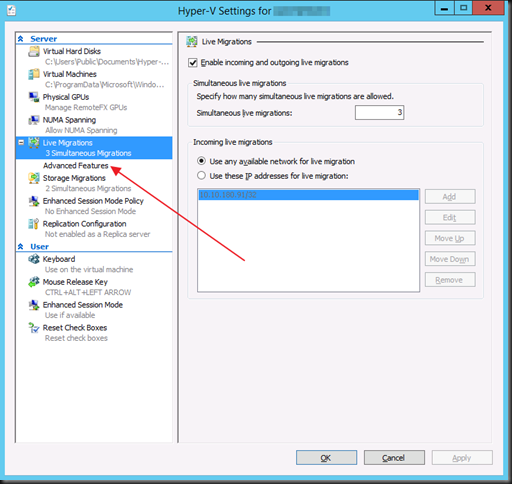

The Incoming live migration settings is set to “Use any available network for live migration”. If you have this on it will still leverage both as when one is used multichannel drag the other one into action anyway, no matter what you set in network settings for live migration in the Cluster GUI (it set to use only one and dimmed out).

Do note that on Hyper-V Manager the settings for live migrations specify “Incoming live migrations”. That leads us to believe that it’s the target, the node where the VMs are migrated to that determines what NICs get used. But let’s put this to the test.

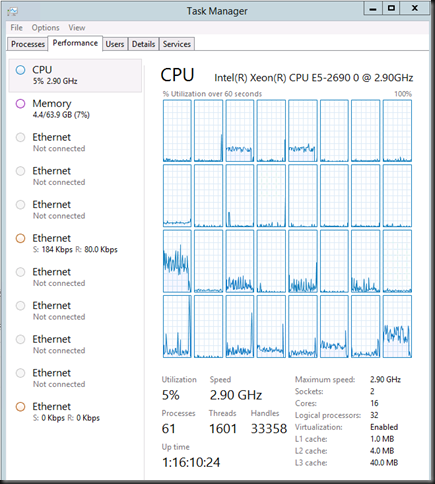

Testing A Hyper-V cluster with two nodes – Node A and Node B

On the cluster network settings you select only one network.

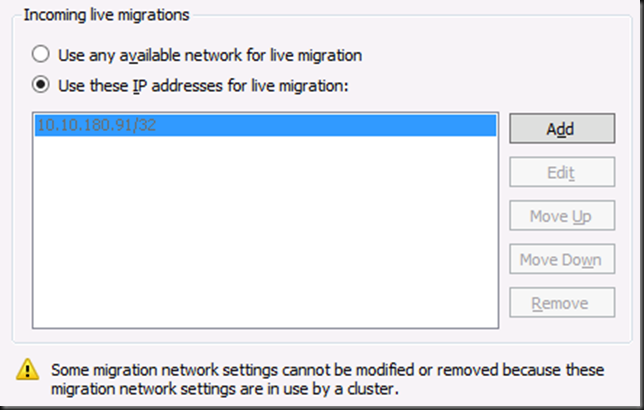

On Hyper-V cluster Node A you have configured the following for live migration via Hyper-V Manager to “Use these IP addresses for live migration”. You cannot add or remove networks, the networks used are defined by the cluster.

On Hyper-V cluster Node B you have configured the following for live migration via Hyper-V Manager to “Use any available network for live migration”.

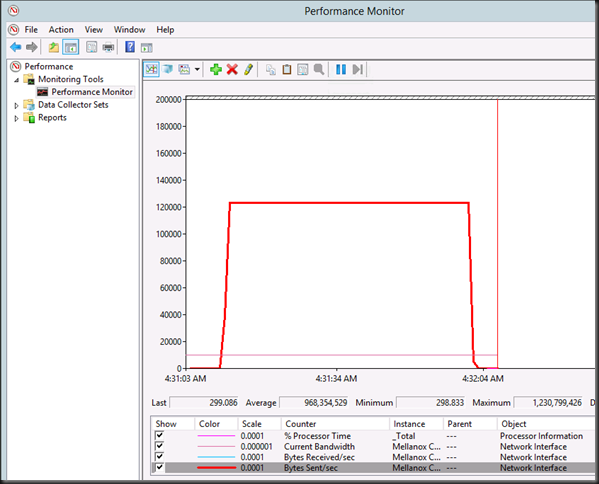

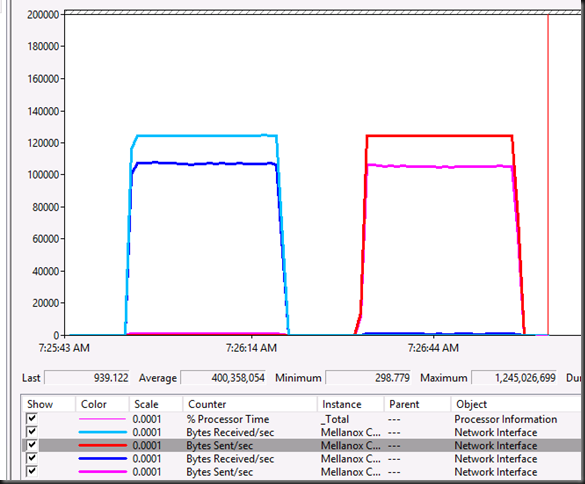

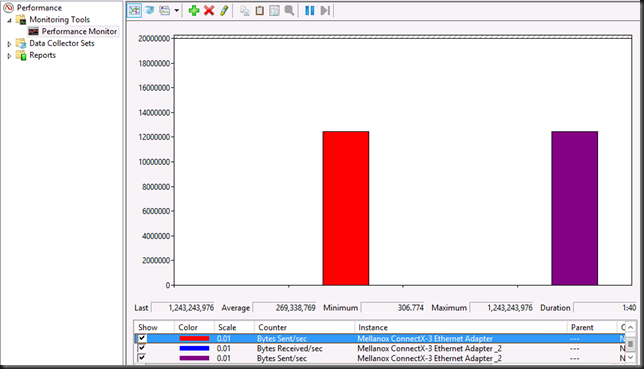

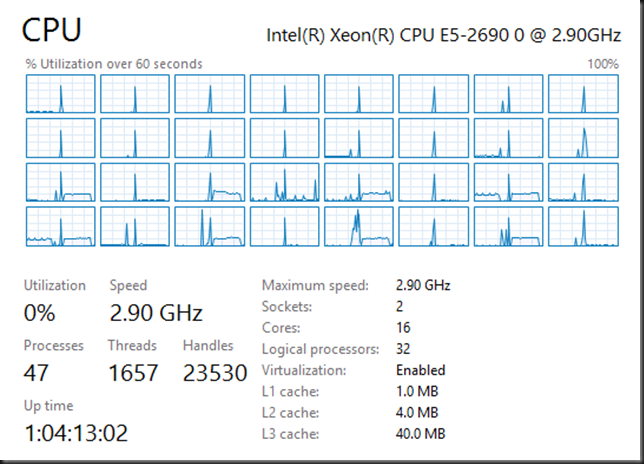

As we now kick of a live migration from node A to node B we’ll see both NICs being used. Why well because Node B is the target and Node B has the setting “Use any available network for live migration”. So why then only these 2, why not pick up any other suitable NICs? Well we’ve configured the live migration on both nodes to use SMB. As this cluster is RDMA capable that means it will leverage multichannel/SMB direct. The auto configuration will select the best, equally capable NICs for this and that’s these two in our scenario. Remember the capabilities of the NICs have to match. So no mixtures of 1 * 1Gbps and 1 *10Gbps or 1 * multichannel and 1 SMB Direct.

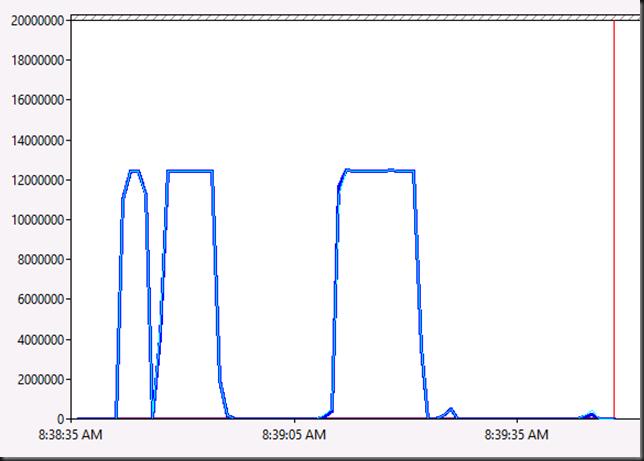

The confusion really sets in that even if live migrate from Node B to A it will also use both NICs! Hum, that is “Incoming Live Migrations” is not always “correct” it seems, not when using SMB as a performance option at least. Apparently multichannel will kick in in both directions.

If you set both to Node A and Node B to “Use these IP addresses for live migration” and leave the cluster network setting with only one network it does only use one, even with SMB as a performance option for live migration. This is expected.

Note: I had one interesting hiccup while testing this configuration: when doing the latter one of the VMs failed in live migration of the entire host. I ran it again and that one VM still used both networks. All others went well during host migration with just one being used. That was a bit of a huh ![]() moment and it sure tripped me up & kept me busy for a while. I blame RDMA and the position of the planets & constellations.

moment and it sure tripped me up & kept me busy for a while. I blame RDMA and the position of the planets & constellations.

Things aren’t always what they seem at first and it’s good to keep that in mind. The moment you think you got if figured out, you’re wrong ![]() . So look again & investigate.

. So look again & investigate.