Introduction

With Windows Server 2012 and Windows 8 (and Windows 7 RDP client 8.0) with some updates we got support for RDP to use UDP for data transport. This gave us a great experience over less reliable to even rather bad networks.

Anecdote: I was in an area of the world where there was no internet access available bar a very bad and lousy Wi-Fi connection at the shop/cafeteria. That was just fine, I wasn’t there for the great Wi-Fi access at all. But I needed to check e-mail and that wasn’t succeeding in any way, the network reliability was just too bad. I got the job done by using RDP to connect to a workstation back home (across the ocean on another continent) and check my e-mail there. Not a super great experience but UDP made it possible where nothing else worked. I was impressed.

Changes in RDP over UDP behavior in Windows 10 and Windows 2016

When connecting to Windows Server 2016 or a Windows 10 over a RD Gateway we see 1 HTTP and only one UDP connection being established for a session. We used to see 1 HTTP and 2 UDP connections per session with Windows 8/8.1 and Windows Server 2012(R2)

It doesn’t matter if your client is running RDP 8.0 or RDP 10.0 or whether the RD Gateway itself is running Windows Server 2012 R2 or Windows Server 2016. The only thing that does matter is the target that you are connecting to.

Also, this has nothing to do with a Firewall or so acting up, we’re testing with and without with the same IP etc. Let’s take a quick look at some examples and compare.

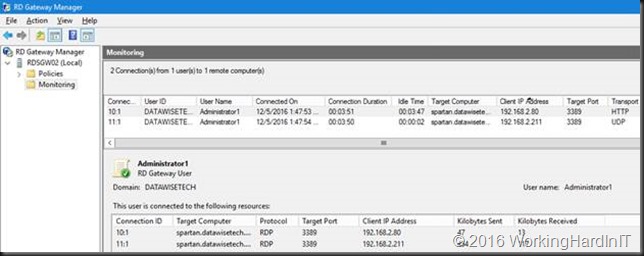

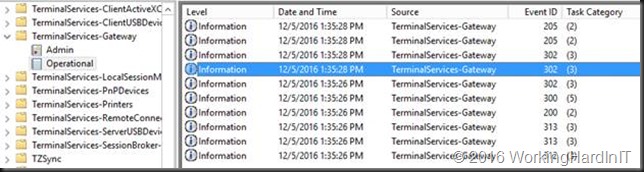

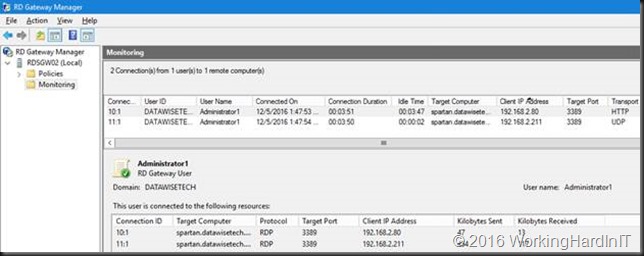

When connecting to Windows 10 or Windows Server 2016 we see that 1 UDP connection is established.

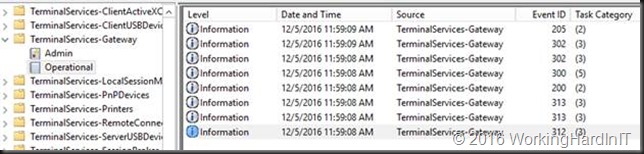

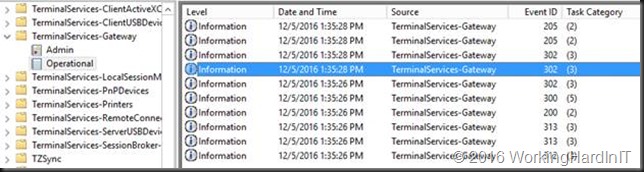

In total, there are 8 events logged for a successful connection over the RDG Gateway.

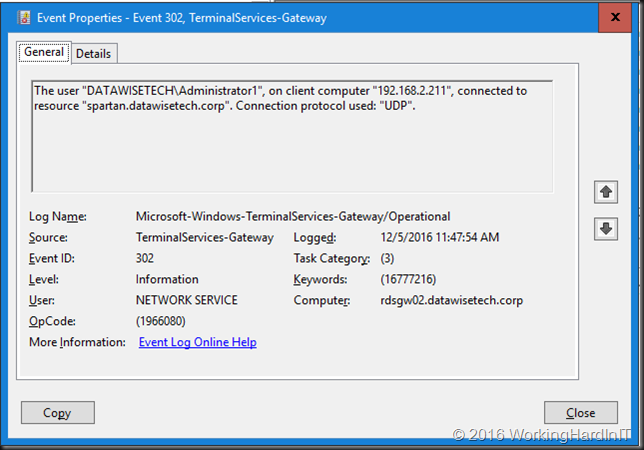

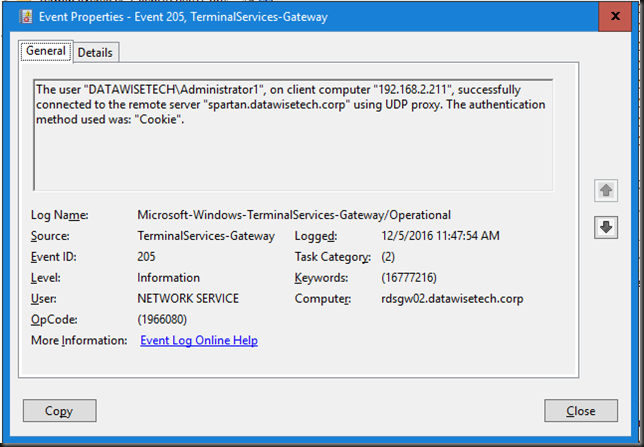

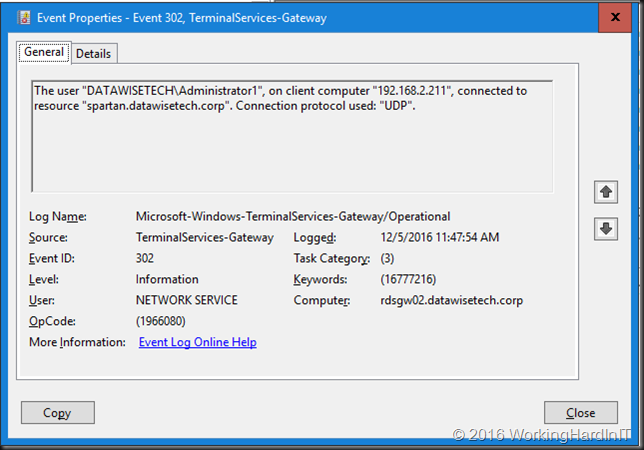

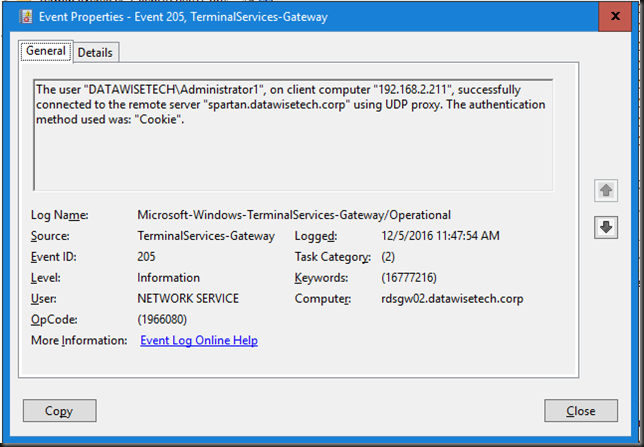

You’ll find 2 event ID 302 events (1 for a HTTP connection and 1 for a UDP connection) as well as 1 Event ID 205 events for the UDP proxy usage.

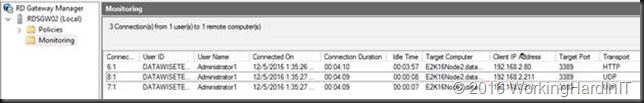

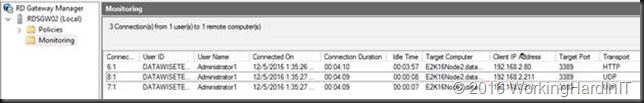

In the RD Gateway manager, monitoring we can see 1 HTTP and the 1 UDP connections for one RDP Session to a Windows 2016 Server.

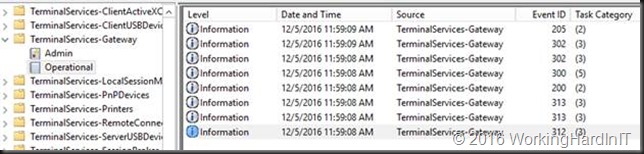

When connecting to Windows 8/8.1 or Windows Server 2012 (R2) we see that 2 UDP connections are established.

In total, there are 10 events logged for a successful connection over the RDG Gateway:

You’ll find 3 event ID 302 events (1 for a HTTP connection and 2 for a UDP connection) as well as 2 Event ID 205 events for the UDP proxy usage.

In the RD Gateway manager, monitoring we can see 1 HTTP and the 2 UDP connections for one RDP Session to a Windows 2012 R2 Server.

So, RDP wise something seems to have changed. But I do not know the story and why.