Introduction

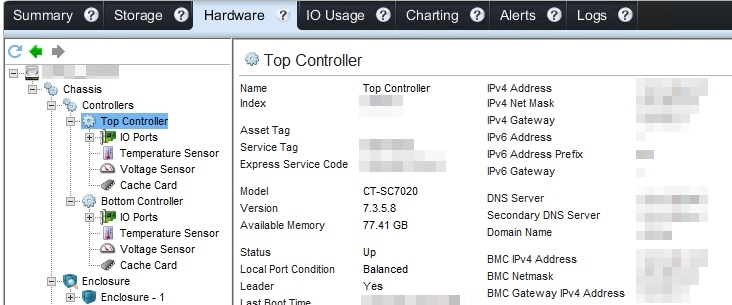

When you have upgraded your SC Series SAN to SCOS 7.3 (7.3.5.8.4 at the time of writing, see https://blog.workinghardinit.work/2018/SCX08/13/sc-series-scos-7-3/ ) you are immediately ready to start utilizing the SCOS 7.3 distributed spares feature. This is very easy and virtually transparent to do.

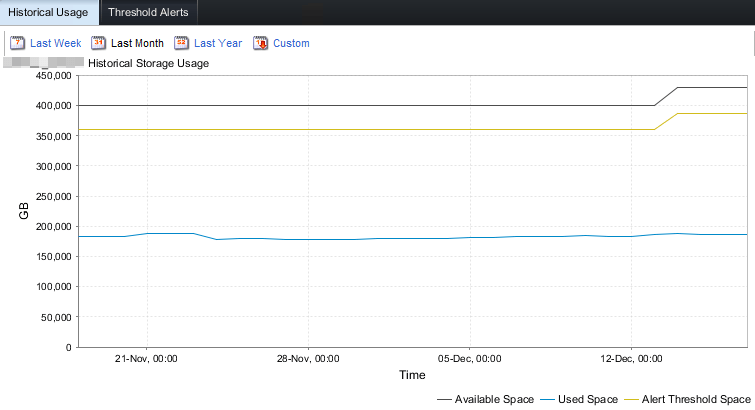

You will actually notice a capacity and treshhold jump in your SC array when you upgraded to 7.3. The system now know the spare capacity is now dealt with differently.

How?

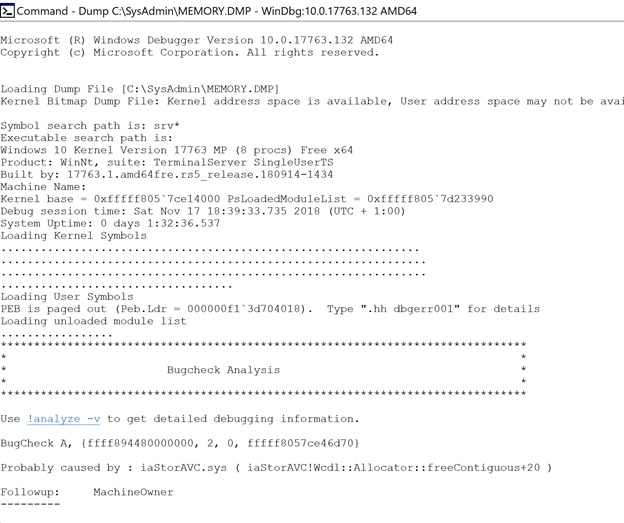

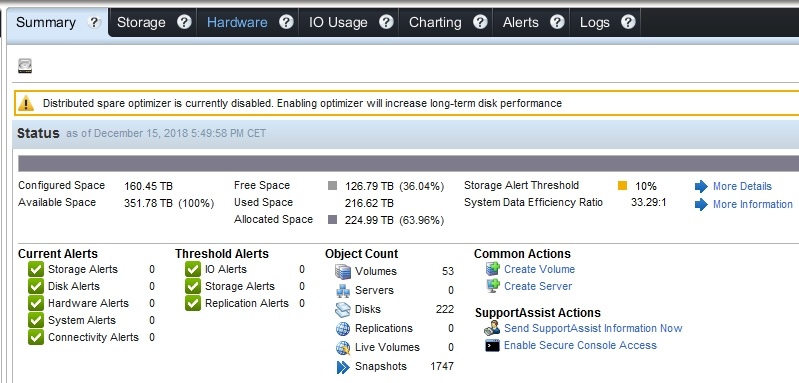

After upgrading you’ll see a notification either in Storage Manager or in Unisphere Central that informs you about the following:

“Distributed spare optimizer is currently disabled. Enabling optimizer will increase

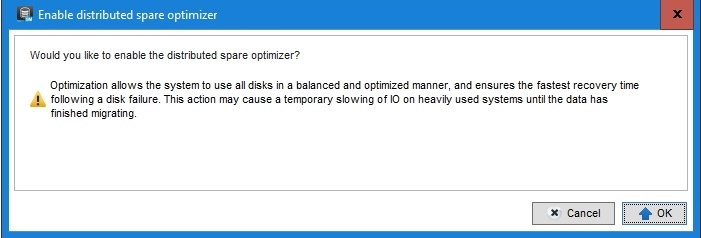

Once you click enable you’ll be asked if you want to proceed.

When you click “OK” the optimizer is configured and will start its work. That’s a one way street. You cannot go back to classic hot spares. That is good to know but in reality, you don’t want to go back anyway.

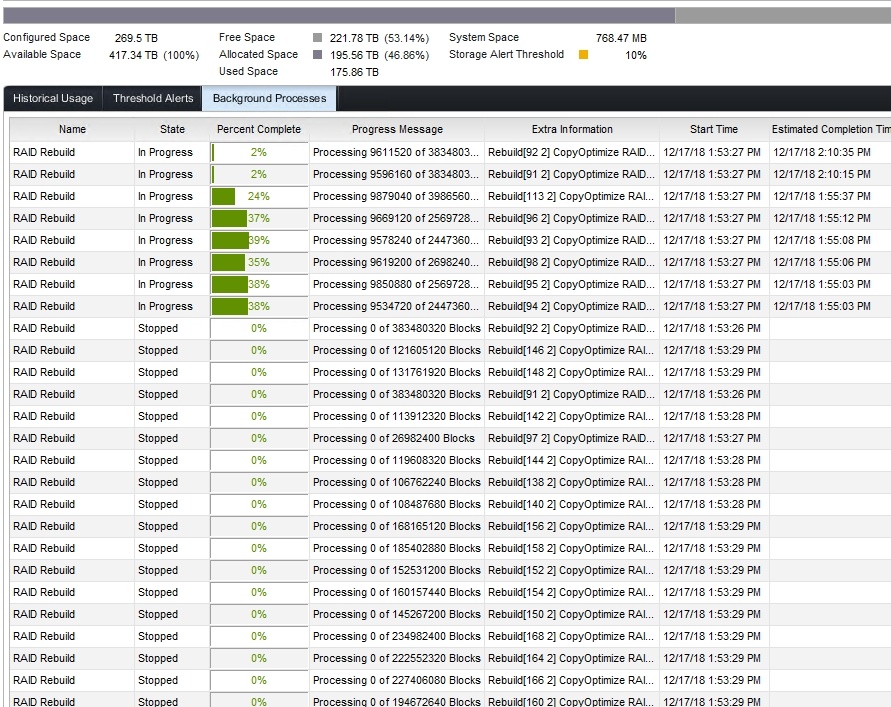

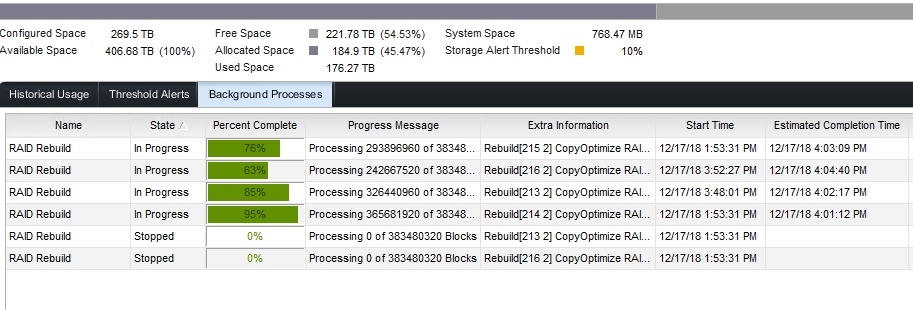

In “Background Processes” you’ll be able to follow the progress of the initial redistribution. This goes reasonabely fast and I did 3 SANs during a workday. No one even noticed or complained about any performance issues.

The benefits are crystal clear

The benefits of SCOS 7.3 Distributed Spares are crystal clear:

- Better performance. All disks contribute to the overall IOPS. There are no disks idling while reserved as a hot spare. Depending on the number of disks in your array the number of hot spares adds up. Next up for me is to rerun my

base line performance test and see if I can measure this. - The lifetime of disks increases. On each disk, a portion is set aside as sparing capacity. This leads to an extra amount of under-provisioning. The workload on each of the drives is reduced a bit and gives the storage controller additional capacity from which to perform wear-leveling operations thus increasing the

life span . - Faster rebuilds. This is the big one. As we can now read AND write to many disks the rebuild speed increases significantly. With ever bigger disks this is something you need and what was long overdue. But it’s here! It also allows for fast redistribution when a failed disk is replaced. On top of that when a disk is marked suspect the before it fails. A copy of the data takes place to spare capacity and only when that is done is the orginal data on the failing disk taken out fo use and is the disk marked as failed for replacement. This copy operation is less costly than a rebuild.