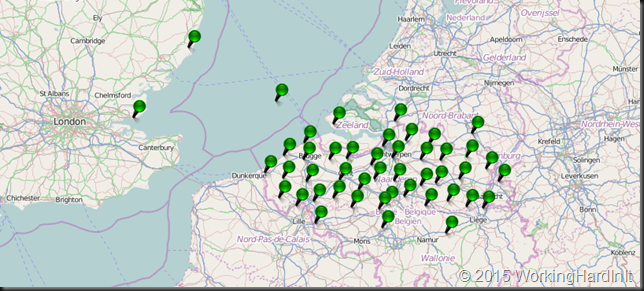

Diminished services on a GPS positioning network

The past couple of days there had been latencies negatively affecting a near real time GPS positioning service that allow the users the correct their GPS measurements in real time.

That service is really handy when you’re a surveyor and it safes money by avoiding extra GIS post processing work later. It becomes essential however when you are relying on your GPS coordinates to farm automatically, fly aerial photogrammetry patterns, create mobile mapping data, build dams or railways, steer your dredging ships and maneuver ever bigger ships through harbor locks.

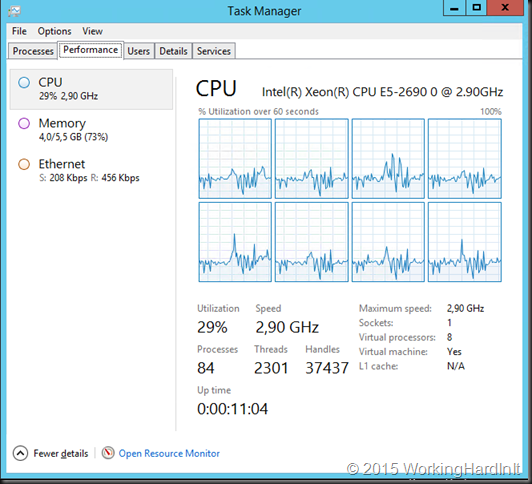

It was clear this needed to be resolved. After checking for network issues we pretty much knew that the recently spiking CPU load was the cause. Partially due to the growth in users, more and more use cases and partially due to a new software version that definitely requires a few more CPU cycles.

The GPS positioning service is running on multiple virtual machines, on separate LUNS, on separate hosts, those hosts are on separate racks. All this is being replicated to a second data center. They have high to continuous availability with Microsoft Failover Clustering and leverage Kemp Loadmaster load balancing. Together with the operations team we moved the load away from every VM, shut it down, doubled the vCPU count and restarted the VM. Rinse and repeat until all VMS have been assigned more vCPUs.

The results where a dramatic improvement in the response times and services response times that went back to normal.

They can move fast and efficient

All this was done fast. They have the power to decide and act to resolve such issues on our own responsibility. Now the fact that they operate in tight night team that span over bureaucracy, hierarchies and make sure that people who need to involved can communicate fats en effective (even if they are spread over different locations) makes this possible. They have a design for high availability and a vertically integrated approach to the solution stack that spans any resource (CPU, Memory, Storage, Network and Software) combined with a great app owner and rock solid operational excellence (Peopleware) to enable the Site Resilience side of the story. Fast & efficient.

I’m proud to have help design and deliver this service and I’ll be ready and willing to help design vNext of this solution in the near future. We moved it from hardware to a virtualized solution based on Hyper-V in 2008 and have not regretted one minute of it. The operational capabilities it offers are too valuable for that and banking on Hyper-V has proved to be a winner!

Would Hot Add CPU Capability have made this easier?

Yes, faster for sure ![]() . The process they have now isn’t that difficult. Now would I not like hot add vCPU capabilities in Hyper-V? Yes, absolutely. I do realize however that not every application might be able to handle this without restarting making the exercise a bit of a moot point in those cases.

. The process they have now isn’t that difficult. Now would I not like hot add vCPU capabilities in Hyper-V? Yes, absolutely. I do realize however that not every application might be able to handle this without restarting making the exercise a bit of a moot point in those cases.

Why some people have not virtualized yet I do not know (try and double the CPUs on your hardware servers easily and fast without leaving the comfort of your home office). I do know how ever that you are missing out on a lot of capabilities & operational benefits.