Last Friday I was working on some Windows Server 2012 Hyper-V networking designs and investigating the benefits & drawbacks of each. Some other fellow MVPs were also working on designs in that area and some interesting questions & answers came up (thank you Hans Vredevoort for starting the discussion!)

You might have read that for low cost, high value 10Gbps networks solutions I find the switch independent scenarios very interesting as they keep complexity and costs low while optimizing value & flexibility in many scenarios. Talk about great ROI!

So now let’s apply this scenario to one of my (current) favorite converged networking designs for Windows Server 2012 Hyper-V. Two dual NIC LBFO teams. One to be used for virtual machine traffic and one for other network traffic such as Cluster/CSV/Management/Backup traffic, you could even add storage traffic to that. But for this particular argument that was provided by Fiber Channel HBAs. Also with teaming we forego RDMA/SR-IOV.

For the VM traffic the decision is rather easy. We go for Switch Independent with Hyper-V Port mode. Look at Windows Server 2012 NIC Teaming (LBFO) Deployment and Management to read why. The exceptions mentioned there do not come into play here and we are getting great virtual machine density this way. With lesser density 2-4 teamed 1Gbps ports will also do.

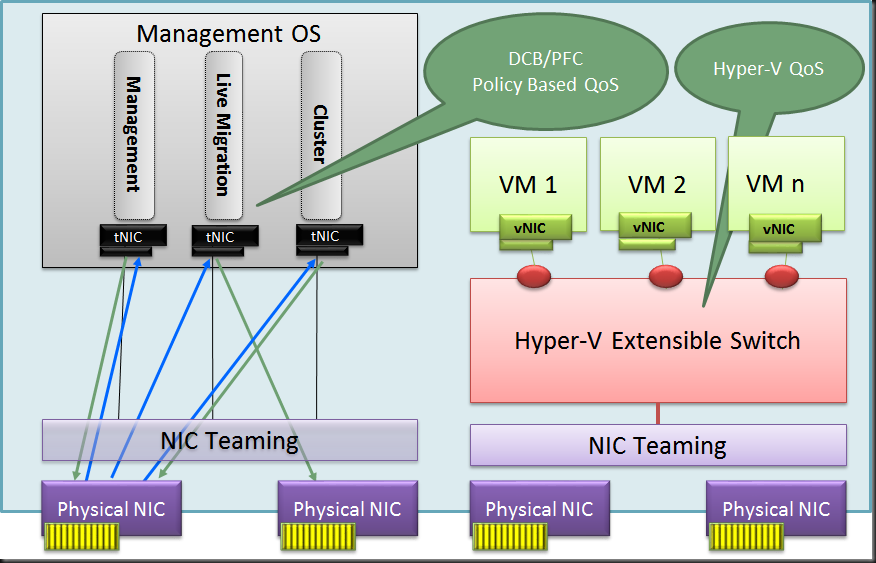

But what about the team we use for the other network traffic. Do we use Address hash or Hyper-V port mode. Or better put, do we use native teaming with tNICs as shown below where we can use DCB or Windows QoS?

Well one drawback here with Address Hash is that only one member will be used for incoming traffic with a switch independent setup. Qos with DCB and policies isn’t that easy for a system admin and the hardware is more expensive.

So could we use a virtual switch here as well with QoS defined on the Hyper-V switch?

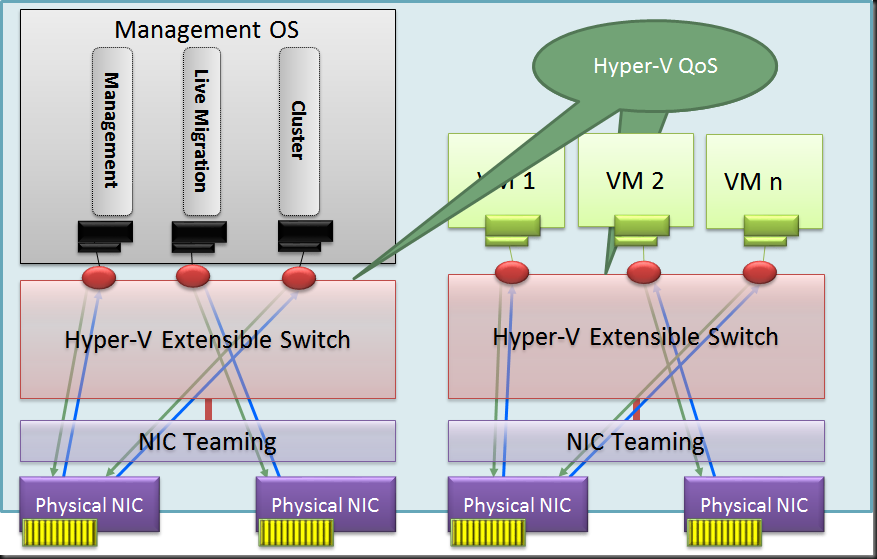

Well as it turns out in this scenario we might be better off using a Hyper-V Switch with Hyper-V Port mode on this Switch independent team as well. This reaps some real nice benefits compared to using a native NIC team with address hash mode:

- You have a nice load distribution of the different vNIC’s send/receive traffic over a single member of the NIC team per VM. This way we don’t get into a scenario where we only use one NIC of the team for incoming traffic. The result is a better balance between incoming and outgoing traffic as long an none of those exceeds the capability of one of the team members.

- Easy to define QoS via the Hyper-V Switch even when you don’t have network gear that supports QoS via DCB etc.

- Simplicity of switch configuration (complexity can be an enemy of high availability & your budget).

- Compared to a single Team of dual 10Gbps ports you can get a lot higher number of VM density even they have rather intensive network traffic and the non VM traffic gets a lots of bandwidth as well.

- Works with the cheaper line of 10Gbps switches

- Great TCO & ROI

With a dual 10Gbps team you’re ready to roll. All software defined. Making the switches just easy to use providers of connectivity. For smaller environments this is all that’s needed. More complex configurations in the larger networks might be needed high up the stack but for the Hyper-V / cloud admin things can stay very easy and under their control. The network guys need only deal with their realm of responsibility and not deal with the demands for virtualization administration directly.

I’m not saying DCB, LACP, Switch Dependent is bad, far from. But the cost and complexity scares some people while they might not even need. With the concept above they could benefit tremendously from moving to 10Gbps in a really cheap and easy fashion. That’s hard (and silly) to ignore. Don’t over engineer it, don’t IBM it and don’t go for a server rack phD in complex configurations. Don’t think you need to use DCB, SR-IOV, etc. in every environment just because you can or because you want to look awesome. Unless you have a real need for the benefits those offer you can get simplicity, performance, redundancy and QoS in a very cost effective way. What’s not to like. If you worry about LACP etc. consider this, Switch independent mode allows for nearly no service down time firmware upgrades compared to stacking. It’s been working very well for us and avoids the expense & complexity of vPC, VLT and the likes of that. Life is good.