In my blog post on Workaround for Exchange 2010 & Outlook 2003 Shared Calendars Connectivity Issues "The connection to the Microsoft Exchange Server is unavailable. Outlook must be online or connected to complete this action." I mention the excellent work of the Microsoft support Engineer on the case. Well he’s published a very content rich blog about all this and other possible causes of the error notification on his TechNet blog: Things you need to know about “The connection to the Microsoft Exchange server in unavailable. Outlook must be online or connected to complete this action” prompts in an Outlook 2003–Exchange 2010 world. It’s a must read for all people dealing with this error message.

Category Archives: Uncategorized

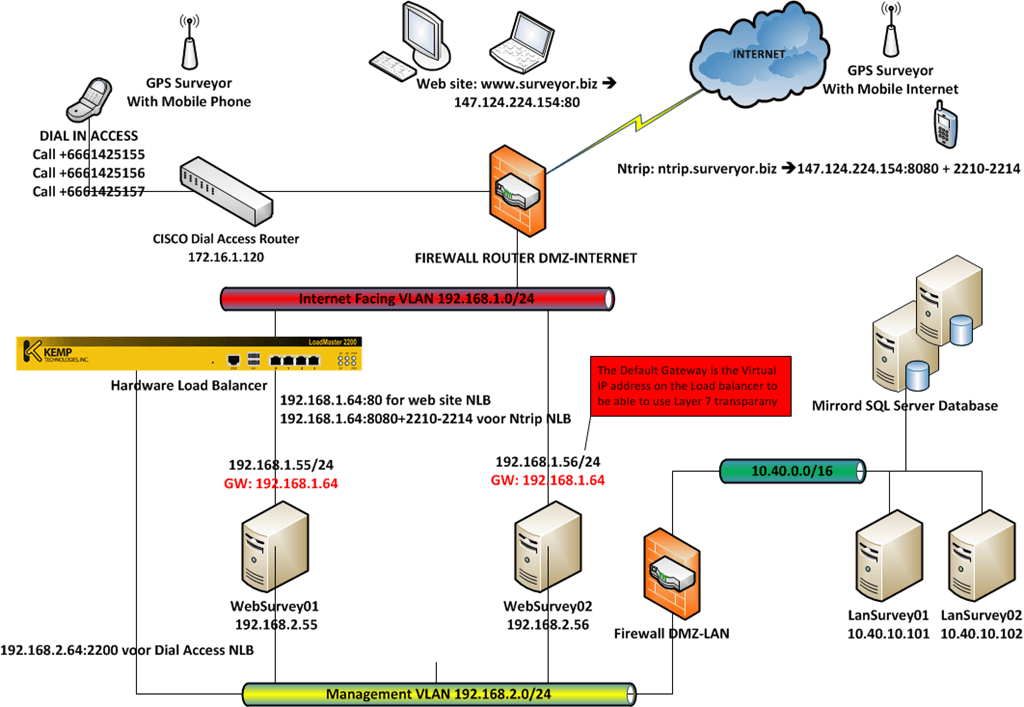

A Hardware Load Balancing Exercise With A Kemp Loadmaster 2200

I recently had the opportunity to get my hands on a hardware load balancer for a project where, due to limitations in the configuration of the software, Windows Network Load Balancing could not be used. The piece of kit we got was a LoadMaster 2200 by Kemp Technologies. A GPS network/software services solution (NTRIP Caster) for surveyors needed load balancing, not only for distributing the load, but also to help with high availability. The software could not be configured to use a Virtual IP address of a Windows Load Balancer cluster. That meant when had to take the load balancing of the Windows server nodes. I had been interested in Kemp gear for a while now (in function of some Exchange implementations) but until recently I did not get my hands on a LoadMaster.

We have two networks involved. One the 192.1683.2.0/24 network serves as a management, back-office network to which the dial access calls are routed and load-balanced to 2 separate servers WebSurvey01 and WebSurvey02 (running VMs running on Hyper-V). The Other network is 192.168.1.0/24 and that serves the internet traffic for the web site and the NTRIP data for the surveyors, which is also load balanced to WebSurvey01 and WebSurvey02. The application needs to see the IP addresses of the clients so we want transparency. To achieve this we need to use the gateway of the VIP on the Kemp load balancer as the gateway. That means we can’t connect to those apps from the same subnet, but this is not required. The clients dial in or come in from the internet. A logical illustration (it’s not a complete overview or an exact network diagram) of such a surveyor’s network configuration is shown below.

Why am I using layer 7 load balancing? Well, layer 4 is a transport layer (which is transparent but not very intelligent) and as such is not protocol aware while layer 7 is an application layer and is protocol aware. I want the latter as this gives me the possibility to check the health of the underlying service, filter on content, do funky stuff with headers (which allows us to give the clients IP to the destination server => X-Forwarded-For header when using layer 7), load balance traffic based on server load or service etc. Layer 7 not as fast as layer 4, as there is more things to do, code to run, but when you don’t overload the device that not a problem as it has plenty of processing power.

The documentation for the KEMP LoadMaster is OK. But I really do advise you to get one, install it in a lab and play with all the options to test it as much as you can. Doing so will give you a pretty good feel for the product, how it functions, and what you can achieve with it. They will provide you with a system to do just that when you want. If you like it and decide to keep it, you can pay for it and it’s yours. Otherwise, you can just return it. I had an issue in the lab due to a bad switch and my local dealer was very fast to offer help and support. I’m a happy customer so far. It’s good to see more affordable yet very capable devices on the market. Smaller projects and organizations might not have the vast amount of server nodes and traffic volume to warrant high-end load balancers but they have needs that need to be served, so there is a market for this. Just don’t get in a “mine is bigger than yours” contest about products. Get one that is the best bang for the buck considering your needs.

One thing I would like to see in the lower end models is a redundant hot-swappable power supply. It would make it more complete. One silly issue they should also fix in the next software update is that you can’t have a terminal connection running until 60 seconds after booting or the appliance might get stuck at 100% CPU load. Your own DOS attack at your fingertips. Update: I was contacted by KEMP and informed that they checked this issue out. The warning that you should not have the vt100 connected during a reboot is an issue the used to exist in the past but is no longer true. This myth persists as it is listed on the sheet of paper that states “important” and which is the first thing you see when you open the box. They told me they will remove it from the “important”-sheet to help put the myth to rest and your mind at ease when you unbox your brand new KEMP equipment. I appreciate their follow up and very open communication. From my experience, they seem to make sure their resellers are off the same mindset as they also provided speedy and correct information. As a customer, I appreciate that level of service.

The next step would be to make this he setup redundant. At least that’s my advice to the project team. Geographically redundant load balancing seems to be based on DNS. Unfortunately, a lot of surveying gear seems to accept only IP addresses so I’ll still have to see what possibilities we have to achieve that. No rush, getting that disaster recovery and business continuity site designed and setup will take some time anyway.

They have virtual load balancers available for both VMware and Hyper-V but not for their DR or Geo versions. Those are only on VMware still. The reason we used an appliance here is the need to make the load balancer as independent as possible of any hardware (storage, networking, host servers) used by the virtualization environment.

The Dilbert® Life Series: Enterprise Architecture Revisited One Year Later

The Dilbert® Life series is a string of post on corporate culture from hell and dysfunctional organizations running wild. This can be quite shocking and sobering. The amount of damage that can be done by "merely" taking solid technology, methodologies, people and organizations, which you then abuse the hell out of, is amazing. A sense of humor will help when reading this. If you need to live in a sugar coated world were all is well and bliss and think all you do is close to godliness, stop reading right now and forget about the blog entries. It’s going to be dark. Pitch black at times actually, with a twist of humor, if you can laugh at yourself that is. And no, there is no light to shine on things, not even when you lite it. You see, pointing a beam in to the vast empty darkness of human nature doesn’t make you see anything. You do realize there is an endless, vast and cold emptiness out there. This is not unlike the cerebral content of way to many people I come across by in this crazy twilight zone called “the workplace”. I believe some US colleagues refer to those bio carbon life forms as “sheeple”.

Last year my very first blog post (https://blog.workinghardinit.work/2010/01/16/hello-world/) was about the one and only meeting I ever had with the Enterprise Architecture consultants that came in to help out at place where I do some IT Infrastructure Fu. Now one year, lots of time, money, training and Power Point slide decks after that meeting, the results on the terrain are nowhere to be seen. Sure there were lots of meetings, almost none of which I attended unless they dragged enterprise architecture into an IT related meeting on some other also vague action items like the IT strategy that was never heard of again. They’ve also created some new jobs specifications and lots of lip service and they’ll probably hire some more consultants to help out in 2011. But for now the interaction with and impact of any Enterprise Architecture on their IT infrastructure is nowhere to be found.

We put a good infrastructure plan in place for them. It’s pretty solid for 2011, pretty decent for 2012 and more like a road map for the time span 2013-2014. Meaning it’s flexible as in IT the world can change fast, very fast. But none of all this has come to be due to insights, needs, demands or guidance of any enterprise architecture, IT strategy or business plan. No, it’s past experience and gut feeling, knowing the culture of the organization etc. Creating strategies, building architectures is difficult enough in the best of circumstances. Combine this with fact that there is a bunch of higher pay grade roles up for grabs and the politics become very dominant. Higher pay grades baby? What do I need to get one? Skills and expertise in a very critical business area of cause! Marketing yourself as a trusted business advisor, taught leader and architect becomes extremely important. As you can imagine getting the job done becomes a lot more difficult and not because of technical reasons. My predictions for 2011 are that by the end the year those pay grades will have been assigned. Together with a boatload of freshly minted middle management, who’ll be proud as hell and will need to assert their new found status, they’ll start handing out work to their staff. Will that extra work materialize into results or only hold them back from making real progress? Well, we’ll need to wait for 2012 to know as 2011 will be about politics.

Basically from the IT infrastructure point of view and experience we have not yet seen an Enterprise Architecture and I don’t think they’ll have one in the next 12 months. Perhaps in 24 to 36 months but by then the game plan in IT infrastructure will be up and running. So realistically, I expect, if it leads anywhere against expectations, the impact of an Enterprise Architecture will be for 2014 and beyond. Which means an entirely new ball game and that will need a revised architecture. The success of the effort will no doubt be that they detected the need to change. This sounds uncomfortable similar to the IT strategy plan they had made. So for now we’ll do for them what’ we’ve always done. We’ll work with one year plans, two to three year roadmaps combined with a vision on how to improve the IT infrastructure. The most important thing is to stay clear of ambition and politics. Too much of that makes for bad technical decisions.

You got to love corporate bull. They don’t lie, no sir, they just sell bull crap. Which is worse, truth or lies don’t even matter, just the personal agendas. Liars at least, by the very fact of lying, acknowledge the value of truth, so much in fact, they’d rather have you not knowing it. Most consultancy firms send out kids that are naïve enough to believe the scripts and don’t even realize they are talking crap. They are told over and over again they are right, the best and they like to believe this so much they really do. It’s a bit like civil servants at the EU. Pay people double their market value, sweet talk their ego’s all day long and they will become prophets for the religion of the day. No, I’m not saying Enterprise Architecture is bull crap. I’m saying that way too many people & companies claiming to do enterprise architecture are turning it into exactly that. IT strategies, architectures that are so empty and void of content that all those binders are thrown in a drawer never to be seen again. A fool with a tool is still but a fool. Agile methodologies or tools don’t make your programmers agile gurus just like owning a race car doesn’t make you a race car pilot. All of this has happened before, and all of this will happen again. Every new, innovative process, methodology or concept falls victim to this. The money grabbing sales crowd gets there paws on it and starts selling it as competitive advantage or even innovation in a bottle to the corporate sheeple & management failures that should know better. They end with less money, loads of wasted time and a shitload of dead trees. As a side node, this whole “* Architect” thing has runs it’s inflationary course. We need a new professional status currency once more. Take care and keep laughing ![]() !

!

Tech Ed 2010 Tuesday November 9th & Wednesday November 10th

Well after somewhat of a disappointing start due to cutbacks (we got some sort of a laundry bag this year) and some very hungry vendors & partners in the exhibition hall (they ate the entire lunch of the pre conference delegates)* I’ve spend two very busy days at Tech Ed Europe.

* I should return the favor by getting a vast amount of high quality T-Shirts and other swag but I don’t have the time. I should outsource this but Azure is not yet offering a swag hunting as a service (SHAAS) in the cloud.

First of all I did a lot of sessions on Lync, SCVMM vNext, High Availability, Virtualization, Exchange, …. and in between I had some very informational discussions with storage vendors (I still have some to go) and apart from the technical info it’s fun to see how they deal with critical customer feedback or doubts.

Another fun part was running into twitter and blog acquaintances. Pretty cool people in the IT world. Helpful, easy going, very knowledgeable and fun guys and gals.

The TLC (Technical Learning Center) was staffed very well with a bunch of experts, both MVP’s, partners and Microsoft personnel. I had very engaging and motivating talks with lots of them.

System Center Virtual Machine Manager vNext is looking very promising. But beyond the publicly available information I’m under NDA.

I do think they should watch the quality levels of their sessions. Either we need more 400 level session or we need to deal with the inflation going on. The 400 seems to be new 300 sometimes. another option would be introducing 500 level sessions ![]()

This post will be posted with some delay as I have no internet where I’m writing this