Protecting your data is paramount

It is often stated about storage engineers that they have only one rule and that is to never lose data. This is true and it pretty much determines all of their actions. But in reality, this is a top priority for all of us. It has always been like this, but in a full stack world, it is truer than ever. The prime directive of anyone working in ICT, in any role, from helpdesk to CIO, from IT Pro to developer, is to keep services up and running. You have to do this in order to be able to deliver value to the customers. That is how you make a living.

And guess what? In order for services to be up and running, you also need to have any data required protected, available and usable. It’s no good to have developer corrupt the data logically or physically, have a CISO force encryption but lose the keys to the kingdom, or have ransomware render your backups useless. The list of bad things that can and do happen is long and sobering.

It’s no good to have data available you cannot use or read. This is true for all data no matter its nature or where it resides. While funny to a point to the people who are not involved or affected, stating that technically the data is not lost as you know where it is but you just can never access it again is no good at all and far from a joke.

The reality is that bad things do happen no matter how well you try to prevent it. So, you should never bank on solitary solutions. Always give yourself multiple options. From the good old 3-2-1 rule and to having air-gap studies; to not banking on one type of storage, technology, location, process or person, it is all part of adequate to excellent protection. If the business truly and honestly states its data is the gold that makes them thrive, they must act to protect it accordingly.

Are you going then?

No, unfortunately, I’m am otherwise engaged on the days of the conference. Didier, how can you say that to invest in attending VeeamON 2019 when you are not even going yourself? 1st of all, I’d go if I did not have prior engagements – I have done so in the past. I learned a lot and I’m still in touch with customers and Veeam employees I met there. Secondly, an investment does not always involve my physical person being on the scene. It is about getting the right knowledge and insights to the right people in the right places. That means sending colleagues, employees, customers to conferences and training and not always yourself. You develop the skills, knowledge, and capabilities of your organization. Data availability is a primary concern and a goal that I can only achieve when it does not depend solely on me. That too is investing. Helping others learn, grow and succeed help everyone out. Needless to say, I wish I was able to go.

Attend VeeamON 2019

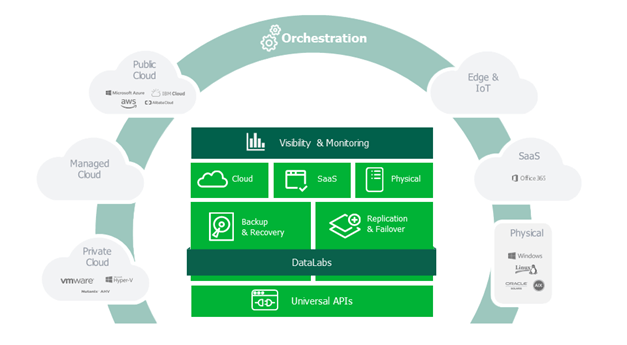

Given the important task of keeping data available and the responsibility to protect and having the ability to recover it when things go wrong, you have a business case to attend VeeamON 2019. In today’s diverse, complex, and fragmented IT ecosystem that data is spread across more places, in different layers and in more elaborate solutions than ever before. This makes data protection and availability a challenge.

When business happens at the speed of light you also have to make data available at that pace when required in different locations for various use cases. Data mobility is a reality and this also has to happen in a secure and workable manner.

We can use all the help we can get to make this happen. The good news is you do not have to go it alone. Attend VeeamON 2019 to give yourself a head start. Invest in your business and your staff. Help them succeed. Register!

You can tap into the collective brain of all the attendees. Talk to knowledgeable and experienced practitioners. Learn from your peers and Veeam experts. Gain insights from others on how to approach the challenges you face. Have that discussion to gain a better understanding of what you can do and how. Get your compass aligned so you can confirm you are right on track to deliver what is asked for you. After all, it is not a small or benign job, to have to ensure the safety of your organizations 21st-century gold, its data. Discover what Veeam is doing in R&D to help you meet future requirements where ever your date lives.

Don’t forget that others can learn from you as well. Consider responding to the request for proposals. Share what you know and have learned. Contribute and learn whilst teaching. Invest in attending VeeamON 2019.