Last Friday I was working on some Windows Server 2012 Hyper-V networking designs and investigating the benefits & drawbacks of each. Some other fellow MVPs were also working on designs in that area and some interesting questions & answers came up (thank you Hans Vredevoort for starting the discussion!)

You might have read that for low cost, high value 10Gbps networks solutions I find the switch independent scenarios very interesting as they keep complexity and costs low while optimizing value & flexibility in many scenarios. Talk about great ROI!

So now let’s apply this scenario to one of my (current) favorite converged networking designs for Windows Server 2012 Hyper-V. Two dual NIC LBFO teams. One to be used for virtual machine traffic and one for other network traffic such as Cluster/CSV/Management/Backup traffic, you could even add storage traffic to that. But for this particular argument that was provided by Fiber Channel HBAs. Also with teaming we forego RDMA/SR-IOV.

For the VM traffic the decision is rather easy. We go for Switch Independent with Hyper-V Port mode. Look at Windows Server 2012 NIC Teaming (LBFO) Deployment and Management to read why. The exceptions mentioned there do not come into play here and we are getting great virtual machine density this way. With lesser density 2-4 teamed 1Gbps ports will also do.

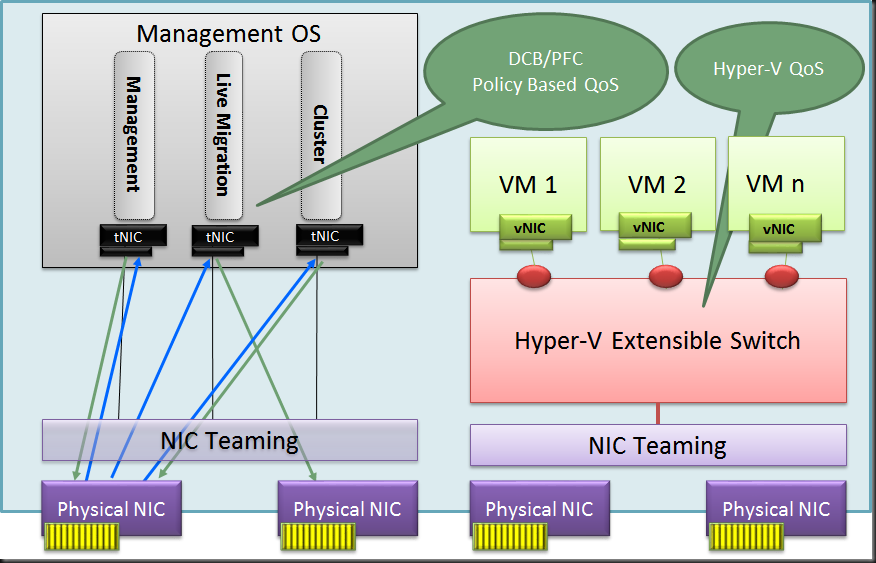

But what about the team we use for the other network traffic. Do we use Address hash or Hyper-V port mode. Or better put, do we use native teaming with tNICs as shown below where we can use DCB or Windows QoS?

Well one drawback here with Address Hash is that only one member will be used for incoming traffic with a switch independent setup. Qos with DCB and policies isn’t that easy for a system admin and the hardware is more expensive.

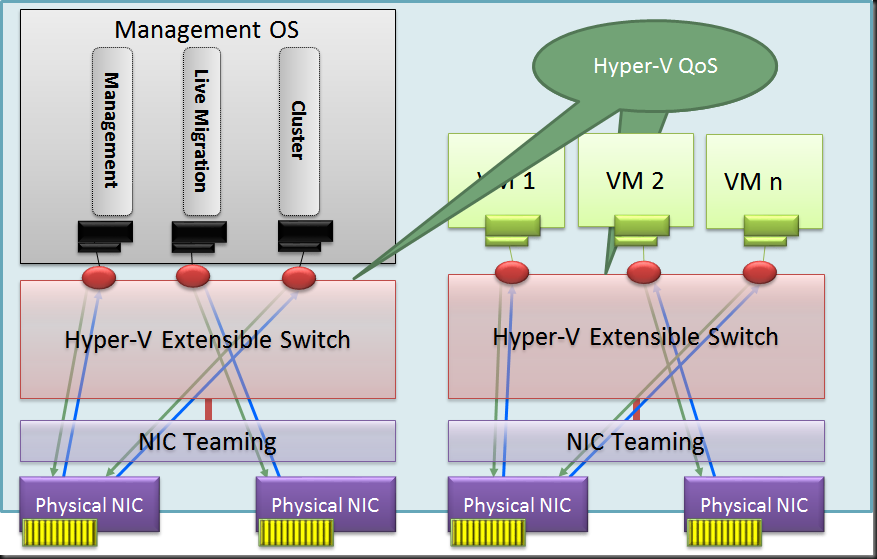

So could we use a virtual switch here as well with QoS defined on the Hyper-V switch?

Well as it turns out in this scenario we might be better off using a Hyper-V Switch with Hyper-V Port mode on this Switch independent team as well. This reaps some real nice benefits compared to using a native NIC team with address hash mode:

- You have a nice load distribution of the different vNIC’s send/receive traffic over a single member of the NIC team per VM. This way we don’t get into a scenario where we only use one NIC of the team for incoming traffic. The result is a better balance between incoming and outgoing traffic as long an none of those exceeds the capability of one of the team members.

- Easy to define QoS via the Hyper-V Switch even when you don’t have network gear that supports QoS via DCB etc.

- Simplicity of switch configuration (complexity can be an enemy of high availability & your budget).

- Compared to a single Team of dual 10Gbps ports you can get a lot higher number of VM density even they have rather intensive network traffic and the non VM traffic gets a lots of bandwidth as well.

- Works with the cheaper line of 10Gbps switches

- Great TCO & ROI

With a dual 10Gbps team you’re ready to roll. All software defined. Making the switches just easy to use providers of connectivity. For smaller environments this is all that’s needed. More complex configurations in the larger networks might be needed high up the stack but for the Hyper-V / cloud admin things can stay very easy and under their control. The network guys need only deal with their realm of responsibility and not deal with the demands for virtualization administration directly.

I’m not saying DCB, LACP, Switch Dependent is bad, far from. But the cost and complexity scares some people while they might not even need. With the concept above they could benefit tremendously from moving to 10Gbps in a really cheap and easy fashion. That’s hard (and silly) to ignore. Don’t over engineer it, don’t IBM it and don’t go for a server rack phD in complex configurations. Don’t think you need to use DCB, SR-IOV, etc. in every environment just because you can or because you want to look awesome. Unless you have a real need for the benefits those offer you can get simplicity, performance, redundancy and QoS in a very cost effective way. What’s not to like. If you worry about LACP etc. consider this, Switch independent mode allows for nearly no service down time firmware upgrades compared to stacking. It’s been working very well for us and avoids the expense & complexity of vPC, VLT and the likes of that. Life is good.

Pingback: Microsoft Most Valuable Professional (MVP) – Best Posts of the Week around Windows Server, Exchange, SystemCenter and more – #25 - Dell TechCenter - TechCenter - Dell Community

Pingback: Microsoft Most Valuable Professional (MVP) – Best Posts of the Week around Windows Server, Exchange, SystemCenter and more – #25 - TechCenter - Blog - TechCenter – Dell Community

Hi

Great post, but I have one question about this: why using two separate vSwitches, one for management, cluster and live migration, and one, dedicated for virtual machines? Is it a bad idea in your opinion to use one ‘big’ Hyper-V extensible switch where you attach all interfaces on, also for the management OS (so management, cluster and livemigration all on the same vSwitch as VMs)?

Regards

Stijn Verhoeven

No it’s not bad. It all depends on the need. You could create one big 4 port team for example but that’s putting all eggs in one basket for risk and for QoS configuration. Keeping them seperated can be usefull if you want to use up the bandwith of an entire meber for example for Live Migration. You could also add backups and storage into the mix. It’s just one scenario where the concept of using a vSwitch for non VM traffic is considered and why that can be useful. Perhaps you have 2*10Gbps an 2*1Gbps and the decisions will fall along those lines. I for example keep the management NIC totally seperated if I have no other means (DRAC/ILO or KVM over IP to get to the server) just to make sure that I have a seperate way to get to the machine. Some mighht consider this expensive overkill. It’s all based on needs and that’s very much dependent on workload, type of apps etc. But I liek to make sure I have options when things go South. Always have a way out or in next to the main door.The power of it all is sometimes overwhelming but it does allow for the best possible soltion for the case at hand. And whatever you do, keek in mind the KISS principle with a twist of Einstein “as simple as possible”.

Thx for the reply. Gives me another point of view regarding the Converged Fabric setup. Will take this in consideration in the next designs I have to make ;-). The separation of backup traffic is idd a good argument for this kind of configuration.

Really nice post! I am working on a project right now involving a converged network design and right now we are testing out the LACP with Macaddresses option. Its working really well but we are finding that automating the deployment on the network side is a little difficult because a single port comprising the LACP team during PXE is not in a forwarding state to allow the server to PXE boot from WDS before the OS has actually been deployed because the port-channel has not yet been negotiated. This has caused me to consider switch independent which may be be more friendly for bare-metal deployment because the switch ports the host is plugged into do not require LACP negotiation. We also created two additional virtual switches for iSCSI-A and iSCSI-B to allow for VMs to connect to the iSCSI network where needed and utilize MPIO over the two fault domains, each one has a -ManagementOS vNIC for the Hyper-V host to connect to iSCSI storage as well – we are considering this the non-converged part of the design.

One of the other things I am finding really interesting is moving into the VMM deployment of this. While its currently straightforward to deploy a converged network design using PowerShell moving into deploying it from VMM (which I am currently researching) has proven to require a Hyper-V networking to VMM networking translation guide ;-).

Nice post! Thanks for sharing!

Cool, nice to hear you liked it

Als long as you have a team underneath the vSwich you can do iSCSI to the guest with MPIO without needing seperate vSwitches. See here: http://blogs.technet.com/b/askpfeplat/archive/2013/03/18/is-nic-teaming-in-windows-server-2012-supported-for-iscsi-or-not-supported-for-iscsi-that-is-the-question.aspx SCVMM is indeed another universe in terminology sometimes and it begs for greenfield … in many cases.

Thanks for the reply. Yes I agree we could have used NIC teaming, a single vSwitch and VLAN tagged vNICs to differentiate between the two iSCSI VLANs. To maintain separate physical paths for each iSCSI fault domain (VLAN x and VLAN y) one iSCSI physcial NIC is plugged into a physical switch serving VLAN x while the other is plugged into another physical switch serving VLAN y (dedicated iSCSI switches) > this logic is followed down to the storage as well. What we gain from this is absolute guarantee that 50 % of the iSCSI traffic is taking a specific physical path and NIC teaming never pinned both MPIO traffic flows down the same physical path at any given time which could result in a delay of storage connectivity if that specific path failed. – While this failure scenario is highly improbably the real reason we did it was for vendor support from the storage vendor 🙂

Sorry for all the rambling to explain my scenario, on this specific scenario a picture truly would be worth a thousand words.

Thanks again for your response!

Thanks for the feedback. I think I got it even without the picture 🙂

Pingback: Server King » Dell’s Digest for April 23, 2013

Nice write up. I’m in a unique situation where i have all the advanced switching and 10g NICs. I’m having difficulty selecting a design. I have IBM blade centers with 10G virtual fabric switches. My Blades have quad 10Gbe ports (w/option for vNic’s, which split 10Gbe port into 4×2.5Gb, bandwidth manageable ports). Following the Einstein-Kiss rule. Im leaning towards, 2 x 10Gbe using the emulex iSCSI hba and MPIO for iSCSI (cards dual purpose + DCB QoSing, leaves open the door for SR-IOV on these as well). Then either LACP the two other 10Gbe’s and Hyper-V vNic-ing & QoS’ing VM-Data/CSV/LM/Mgmt. Or using IBM’s vNic solution, breaking the dual nics into two pairs of quad physical nics with allotted bandwidths (i.e.Mgmt (10%)CSV/LM(35%)VM-Data(55%)), teaming them also (result w/teamed bandwidth Mgmt 2Gb,CSV/LM 7Gb,VM-Data 11Gb). My confusion or maybe “IBM-ing” it, is with this in mind that im the only person, im the networking, systems, desktops and everything guy with a team of A+ hardware techs. However, im well versed in switching and networking and the configuration aspect is mute. My thought process is telling me to go with the later design as i can take better advantage of RSS for the CSVLM team and VMQ for the VM-Data team. My confusion might lie in not realizing that maybe i can do RSS and VMQ on a single team as long as i dont assign the same cores? Also, even though i cant take advantage of the minimum bandwidth feature, wouldnt offloading or eliminating QoS from the hypervisor save CPU time?

Any guidance would be appreciated. I’ve already dissected all of Aiden Finns book, blogs and articles. I’ve been through your blog and many others.

Hi,

The moment you create a Virtual Switch you’ll leverage DVMQ but forgo RSS & vice versa. The thing you’ll certainly want to test if IBM’s vNIC solution for cutting up the NICs plays well with the windows teaming/converged networking. If it does the latter is indeed an attractive solution. You need need the NIC for iSCSI storage and with some serious testing & QoS i’d be very hesitant to combine iSCSI with CSV/LM. CSV wise it’s also attractive to have that on other hardware as CSV can save your baconn when the host looses storage connectivity.

Hope this help a bit.

Thanks Didier,

The vNic’s play well with NIC teaming. However, I lose the minimum bandwidth feature and must assign predetermined bandwidth. So to understand you better, on the servers that have quad NICs (2 of them iSCSI HBA), I should not use the HBA NIC’s and assign 60% minimum QoS through DCB to iSCSI and the rest to LAN traffic (for CSV/LM)? This would simplify the config of the other 2 NICs to be setup as a simple 2 NIC converged VM-Data team. I understand your concern about CSV and my Bacon, however these are blades in an IBM BladeCenter H. So if theres an uplink failure to the storage network, all NIC’s and blades will be effected. If theres a failure on the blade, the NIC’s will all be dead and CSV redirect cant do anything for me. I have an even tighter configuration where some of my blades only have dual 10Gb NIC’s and they are also iSCSI HBA and I have no choice but to share LAN and iSCSI traffic. Am I overthinking the setup by worrying about a converged team w/Hyper-v switch overloading one core during LM? (Have in mind, i have FS’s, printer servers, 2000mbx Exch, total 10000ish users) My BladeCenters have the IBM virtual fabric 10G switch, which ive configured an 80Gb LACP uplink dedicated to iSCSI traffic from the blades. I just don’t want to be doing it just because i can and have the features.

Thanks

-Rooz

Then you should just test to see if it’s OK for the iSCI NICs and take it from there. If so, you’ve achieved your goal and by the looks of it you’re well on your way.

Hi guys, anyone using SMB for live migration? I think smb dont follow the livemigration restriction defined and use all network interface… this result of my cluster being destroyed because it fill the management network . What would be a good configuration on a 10GB quad port to avoid smb to eat everything?… Would apply QOS to management will help? I would like SMB to take only one team or 2 dedicated NIC LM interface.. but smb restriction is a pain to configure…

Yes SMB for LM works very well both over Multichannel & RDMA. I use it with great success. How easy can it get to configure SMB Live Migration constriction: one PowerShell command? Follow Microsoft guidelines and you’ll be fine. There are many good designs, Some are dicussed on my blog and some can be found with a quick internet search:

http://blogs.msdn.com/b/virtual_pc_guy/archive/2013/12/31/faster-live-migration-which-option-should-you-choose.aspx

http://blogs.msdn.com/b/virtual_pc_guy/archive/2013/12/30/faster-live-migration-with-rdma-in-windows-server-2012-r2.aspx

Good luck