Introduction

I a previous post I mentioned the use 64TB volumes in Windows Server 2012 in a supported scenario. That’s a lot of storage and data. There’s a cost side to this all and it also incurs some risk to have all that data on one volume. Windows 2012 tries to address the cost issue with commodity storage in combination with the excellent resilience of storage space to reduce both cost and risk. Apart from introducing ReFS they also did some work on NFTS to help with reliability. We already discussed the use of the flush command in Windows Server 2012 64TB NTFS Volumes and the Flush Command. Now we’ll look at the new approach for detecting and repairing corruptions in NTFS which optimizes uptime through on line repair and keeps off line repairs minimized and very short thanks to spot fixing.

On top of these improvements studying this process taught me two very interesting things:

- The snapshot size limit is also a reason why NFTS volumes are not bigger than 64TB. See the explanation below!

- Cluster Shared Volumes an CSVFS enable continuous availability even when spot fix is run! See below for more details.

So read on and find out why I’m not worried about the 50TB & 37TB LUNs we use for Disk2Disk backups.

Hitting the practical boundaries of check disk (CHKDSK)

While NTFS has been able to handle volumes up to 256TB in size, this was never used in real life due t the fact that most people don’t have that amount of storage available (or need to have) and that the supported limited was 16TB. With Windows 2012 this has become 64TB. That’s just about future proof enough for the time being I’d say ![]() . In real life the practical volume size has been smaller than this die to a number of reasons.

. In real life the practical volume size has been smaller than this die to a number of reasons.

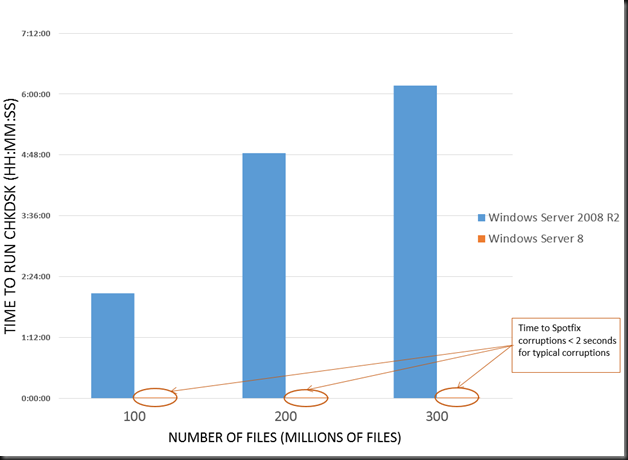

There is the limitation of basic disks which are solved with GPT, but that has it’s own requirements. The there are the storage arrays on which the biggest LUN you can create varies from 2TB tot 16, 50TB or more depending on the type, brand and model. Another big concern was based on potentially long CHKDSK execution time. No that the volumes size is the factor here, it’s the number of files on the volume that dictates how long CHKDSK will run. But volume size and number of files very often go hand I hand.

While Microsoft has been reducing the execution time of with every windows release since Windows 2000 the limit of additional improvements that could be made with the current approach have reach a practical limit. Faced with ever bigger volumes, a huge number of files and ever more “Always On” services, requiring very high to continuous availability, things needed to change drastically.

A vastly improved check disk (CHKDSK) for a new era

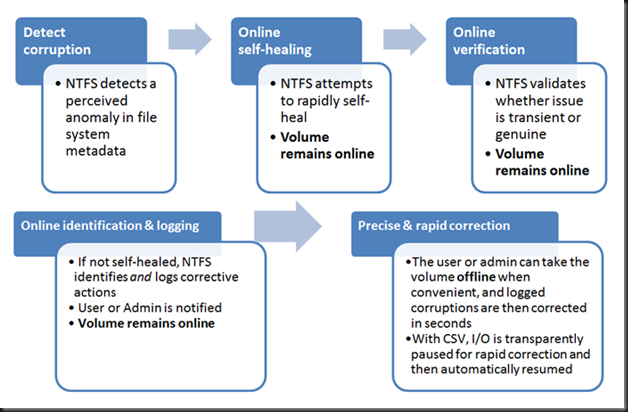

This change came through a new approach for detecting and repairing corruptions in NTFS that consists of:

- Enhanced detection and handling of corruptions in NTFS via on-line repair

- Change the CHKDSK execution model to separate analysis and repair phases

- File system health monitored via Action Center and Server Manager

Enhanced NTFS Corruption Handling

NTFS now logs information on the nature of a detected corruption that cannot be repaired on line. This is maintained in new metadata files:

- $Verify

- $Corrupt

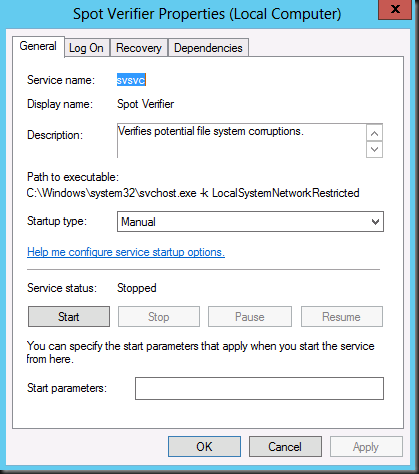

The new “Verification” component confirms the validity of a detected corruption to eliminated unnecessary CHKDSK runs due to a transient hiccup. There’s a service involved here call “Spot Verifier”:

The on-line repair capability that was introduced with the “Self-healing” feature in Vista and was limited to Master File Table (MFT) related corruptions has been greatly enhanced and extended. That means it can now handle a broader range of corruptions across multiple metadata files which means nearly all of the most common corruptions can be fixed by an on-line repair

The New CHKDSK Process & Phases

The phases are:

The analysis phase is performed online on a volume snapshot, so there is no down time for the services and users.

IMPORTANT NOTE: You read that right! The analysis phase is performed online on a volume snapshot. Now when you know that the maximum supported size of a Windows volume snapshot is 64TB you also know that except for stress & performance testing of 256TB LUNS there is another limitation in play. The size of the snapshot to make the new chkdsk process work! If you have volumes bigger than 64Tb, this process can and will use a hardware snapshot if there is a hardware VSS Provider that supports snapshots bigger than 64 TB. So the this new chkdsk process in Windows Server 2012 will also work for volumes bigger than 64TB. But within the Microsoft Windows Server 2012 stack, 64TB is the top limit or you lose this new chkdsk functionality. Interesting stuff!

If a corruption is detected, there will be a first attempt at Online Self-Healing via the self-healing API. Now if self-healing cannot repair the error the Online Verification “‘(Spot Verification” kicks in to verify that the error is not a glitch. When it is verified that any detected corruption that cannot be fixed on line is identified and logged to a new NTFS metadata file: $Corrupt. After this the, the administrators are notified so at a time of their choosing the volume can be taken offline to do the repairs

The Offline repair phase (spot fixing) only runs when all else has failed and can’t be avoided. The volume can be taken offline, either manually or scheduled, at a time the administrator’s chooses. Spot fix only repairs logged corruptions to minimize volume unavailability.

Cluster Shared Volumes bring us Continuous Availability in Windows Server 2012 as the process leverages clustering and CSVFS functionality in this case to make sure you don’t have to bring the volume down, IO is just temporarily stalled:

- Scan runs & adds detected corruptions to $Corrupt

- Every minute the cluser IsAlive check runs on a cluster which also ….

- Enumerates $corrupt system file to identify corrupt files via fsutil, if so action is taken

- CSV namespace is paused to CSVFS & Underlying NTFS is dismounted

- Spotfix runs for maximal 15 seconds, if needed this repeats every 3 minutes

- It corruption repair will take too long it will be marked to run at off line moment and not do the above.

It normally takes no longer than a few seconds, often a lot less, to repair corruptions off line now, which is benign on a modern physical server that runs through its memory configuration, BIOS/EUFI, Controller. Even on laptops and virtual machines this is very short and doesn’t really add much to the boot time as you can see in the picture below, it’s often not even noticeable.

Using this new functionality

The user is notified via the Windows User Interface. The phases of repair are also displayed in the Action Center & Server Manager and the user can take appropriate action.

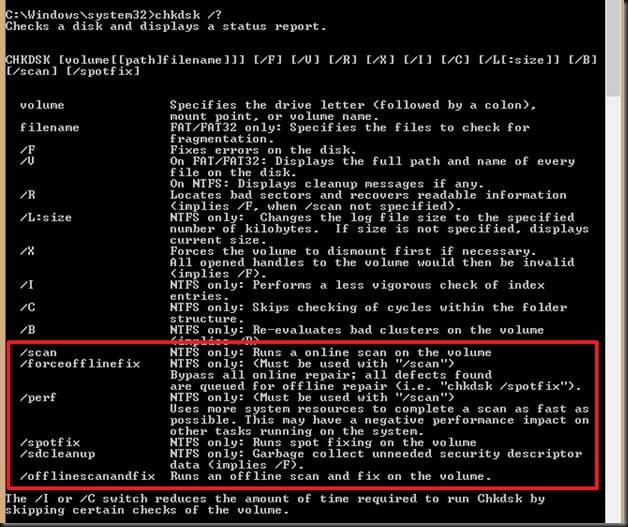

The chkdsk command line has had options added that leverage this model

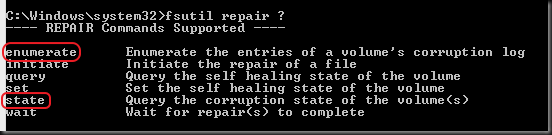

The fsutil repair command has also some new options added:

You can also control the action via PowerShell with the storage cmdlet Repair-Volume. Acton can be run as a job and the parameters -scan, -spotfix, -offlinescanandfix are pretty obvious by now. See http://technet.microsoft.com/en-us/library/hh848662.aspx

Pingback: Is Spanning VMDKs Using Windows Dynamic Disks a Good Idea?

So the maximum logical or virtual volume size for a deduplication volume is 64TB? Or is this the limit for the underlying physical size oc the chunk storage and reparse points?

The limit is 64TB as that the maximum size VSS can handle. See https://blog.workinghardinit.work/2014/07/01/windows-2012-r2-data-deduplication-leverages-shadow-copies-lastoptimizationresultmessage-a-volume-shadow-copy-could-not-be-created-or-was-unexpectedly-deleted/ I have verfied this in testing. Hope this helps. Happy new year!

Pingback: Backup Storage Part 5: Realization of a failure | Eric's Blog