You will all have heard about rolling cluster upgrades from Windows Server 202 R2 to Windows Server 2016 by now. The best and recommend practice is to do a clean install of any node you want to move to Windows Server 2016. However an in place upgrade does work. Actually it works better then ever before. I’m not recommending this for production but I did do a bunch just to see how the experience was and if that experience was consistent. I was actually pleasantly surprised and it saved me some time in the lab.

Today, if you want to you can upgrade your Windows Server 2012 R2 hosts in the cluster to Windows Server 2016.

The main things to watch out for are that all the VMs on that host have to be migrated to another node or be shut down.

You can not have teamed NICs on the host. Most often these will be used for a vSwitch, so it’s smart and prudent to note down the vSwitch (or vSwitches) name and remove them before removing the NIC team. After you’ve upgraded the node you can recreate the NIC team and the vSwitch(es).

Note that you don’t even have to evict the node from the cluster anymore to perform the upgrade.

I have successfully upgrade 4 cluster this way. One was based on PC hardware but the other ones where:

- DELL R610 2 node cluster with shared SAS storage (MD3200).

- Dell R720 2 node cluster with Compellent SAN (and ancient 4Gbps Emulex and QLogic FC HBAs)

- Dell R730 3 node cluster with Compellent SAN (8Gbps Emulex HBAs)

Naturally all these servers were rocking the most current firmware and drives as possible. After the upgrades I upgraded the NIC drivers (Mellanox, Intel) and the FC drivers ‘(Emulex) to be at their supported vendors drivers. I also made sure they got all the available updates before moving on with these lab clusters.

Issues I noticed:

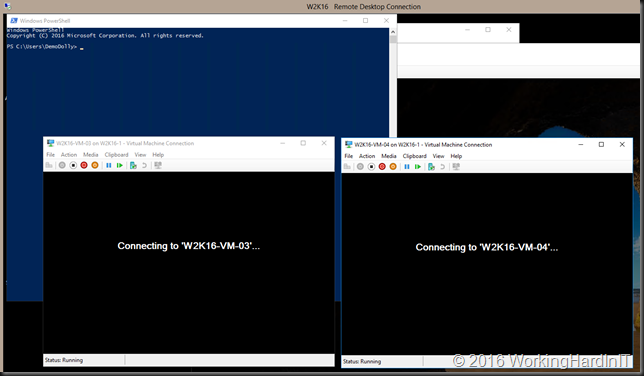

- The most common issue I saw was that the Hyper-V manager GUI was not functional and I could not connect to the host. The fix was easy: uninstall Hyper-V and re-install it. This requires a few reboots. Other than that it went incredibly well.

- Another issue I’ve seen with upgrade was that the netlogon service was set to manual which caused various issues with authentication but which is easily fixed. This has also been reported here. Microsoft is aware of this bug and a fixed is being worked on.

.

Did the same thing, ended up rebuilding the cluster with clean installs. Once thing we noticed while updating the fNIC drivers is if we didnt evict the node first, it would create a c:\clusterstorage.000 folder. My advice, evacuate the node, evict, update, then bring it back in.

Haven’t seen that yet. Any info on what NIC they are and firmware level?

Pingback: Cluster Operating System Rolling Upgrade Leaves Traces - Working Hard In IT

Just did an in-place upgrade of a stand-alone 2008 r2 std host to 2012 r2 and finally 2016. I also had to reinstall the hyperv role because the MMc wouldn’t connect. Other than that, it worked amazingly well.

Pingback: Windows Data Deduplication and Cluster Operating System Rolling Upgrades - Working Hard In IT