Introduction

With Windows Server 2012 R2, using both RDMA and the Hyper-V vSwitch on the same host required separate physical network adapters (pNICs). There are 2 reasons for this.

- First a vSwitch is generally created with a native Windows NIC team. Such a NIC team does not expose RDMA capabilities.

- Second is that in Windows Server 2012 R2 you cannot expose RDMA capabilities via a vSwitch, even when you are using a non-teamed RDMA capable NIC.

As a result, the need for RDMA required more NICs on the Hyper-V hosts and/or a fully converged had some serious drawbacks. As servers have been quite capable and our VMs serve ever more intensive workloads this was not dramatic. Leveraging 2*10Gbps for a vSwitch and 2*10Gbps for redundant RDMA / SMB Direct traffic have long been one of my favorite designs. It leaves room for other traffic, such as backups, and it allows for high VM density. But with 40Gbps NICs that is overkill and a tad expensive in many scenarios, even when connecting to a SOFS share for Hyper-V storage, so 4*40Gbps on a Hyper-V host is not something I ever saw in real life.

Windows Server 2016 can expose RDMA capabilities via a vSwitch even without SET

What many people seem to have missed is that reason 2 has gone in Windows Server 2016 Hyper-V. Reason 1 still holds true. But that has been solved by Switch Embedded Teaming (SET). This means that you actually do not need SET to leverage RDMA with an vSwitch in Windows Server 2016 Hyper-V. You can do this as follows:

#Create a vSwith New-VMSwitch -Name RDMACapable-vSwitch -NetAdapterName "NODE-A-S4P1-SW12P05-SMB1" #Now add a host vNIC for the SMB Direct Traffic Add-VMNetworkAdapter -SwitchName RDMACapable-vSwitch -Name SMB1 -ManagementOS #Enable RDMA on it Enable-NetAdapterRDMA "vEthernet (SMB1)" #Grab that vNIC on the management OS and set the VLAN - PFC requires tagged VLANs $NicSMB1 = Get-VMNetworkAdapter -Name SMB1 -ManagementOS Set-VMNetworkAdapterVLAN -VMNetworkAdapter $NicSMB1 -Access -VlanID 110

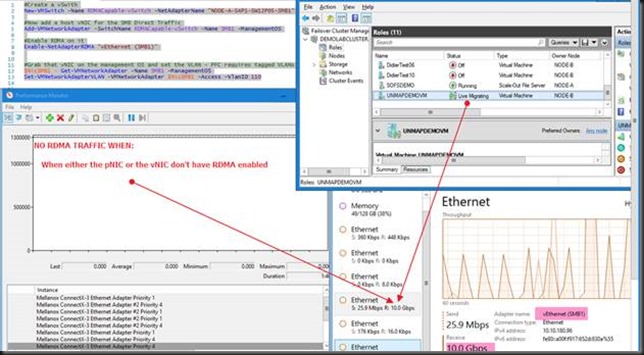

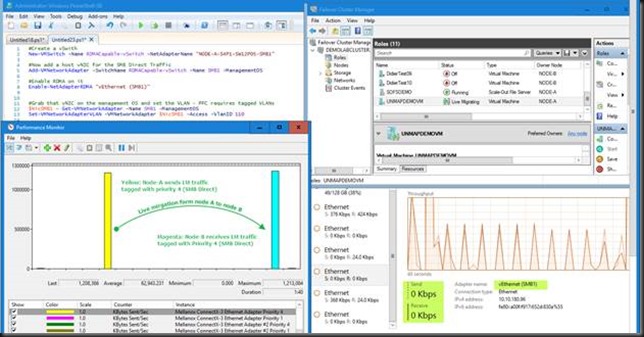

Below is what this looks like. We have one vNIC on the management OS leveraging RDMA/SMB Direct consuming all 10Gbps if the NIC we connected to the vSwith. This is a nice lab demo but you can see this isn’t perhaps the best idea in real life.

Other things to note

Do realize this still requires the pNIC to be RDMA capable. This is not some sort of soft RoCE or other software RDMA magic as of today. The pNIC also has to have RDMA enabled or virtual NIC won’t be able to leverage RDMA but fall back to SMB (Multichannel only) instead of SMB Direct. Likewise, RDMA has to be enabled on the vNIC as well. So don’t forget, RDMA must be enabled on both the pNIC and the vNIC for this to work.

DCB’s PFC/ETS requires a tagged VLAN to carry the priority, do don’t forget to tag the vNIC. There is actually no need to tag the pNIC as long as the switch port has the tagged VLAN set – most likely as a trunk or in general mode. If you don’t tag consistently across the entire network stack you’ll have network issues anyway and RDMA performance will be bad if it works at all.

Finally, don’t forget this is example is not using VMM /Network Controller and as such is using Set-VMNetworkAdapterVLAN and not Set-VMNetworkAdapterIsolation.

In real life, we need better and more than a single NIC vSwitch

The caveat here is that, while you have a converged setup, you have no redundancy for the vSwitch (there is no team). This also means that you’re are limited to a single NIC in regards to throughput for that vSwith. Depending on the needs of the solutions that might be perfectly fine. It it’s not – in most real-world scenarios you’ll need redundancy – you have to use SET in a converged scenario. That’s what we’ll take a look at in part 2. Then there is the question about QoS as you don’t want SMB Direct traffic to consume to much bandwidth at will. That’s still another issue to discuss and address.

So what would be the best idea to setup if you want 2x SMB RDMA vmNICs in a VM ? Tie both vmNICs to an RDMA Capable vSwitch which is tied to a SET of 2 RDMA pNICs, or create to single vSwitches, one per pNIC, for each vmNIC ?

Aha, that’s part 2. In a design 2 2 NICs, the former, the latter would be a mess & overhead for managing redundant VM traffic with converged networking.

With a setup that has 4pnics, 2 of which are dedicated to east west traffic, is there any point to RDMA being enabled on those? Presumably it would be effective on the north south pNics which are SET Teamed and used for VM traffic and mgmt traffic?