Simplified SMB Multichannel and Multi-NIC Cluster Networks

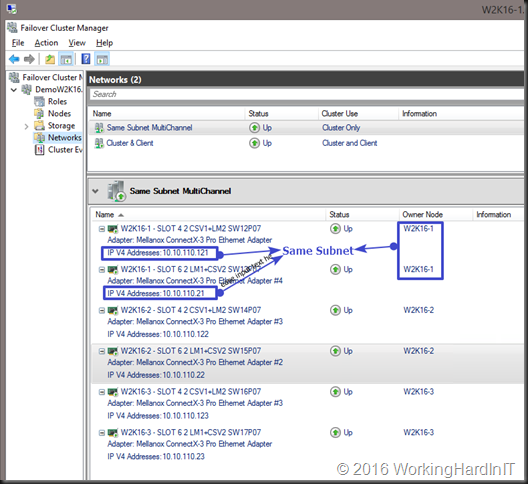

One of the seemingly small feature enhancements in Windows Server 2016 Failover clustering is simplified SMB multichannel and multi-NIC cluster networks. In Windows 2016 failover clustering now recognizes and uses multiple NICs on the same subnet for cluster networking (Cluster & client access).

Why was this introduced?

The growth in the capabilities of the hardware ( Compute, memory, storage & networking) meant that failover clustering had to leverage this capability more easily and for more use cases than before. Talking about SMB, that now also is used for not “only” CSV and live migration but also for Storage Spaces Direct and Storage Replica.

- It gives us better utilization of the network capabilities and throughput with Storage Spaces Direct, CSV, SQL, Storage Replica etc.

- Failover clustering now works with multichannel as any other workload without the extra requirement of needing multiple subnets. This is more important that it seems to me at first. But in many environment getting another VLAN and/or extra subnet is a hurdle. Well that hurdle has gone.

- For IPv6 Link local Subnets it just works, these are auto configured as cluster only networks.

- The cluster Validation wizard won’t nag about it anymore and knows it’s a valid failover cluster configuration

See it in action!

You can find a quick demo of simplified SMB multichannel and multi-NIC cluster networks on my Vimeo channel here

In this video I demo 2 features. One is new and that is virtual machine compute resiliency. The other is an improved feature, simplified SMB multichannel and multi NIC cluster networks. The Multichannel demo is the first part of the video. Yes, it’s with RDMA RoCEv2, you know I just have to do SMB Direct when I can!

You can read more about simplified SMB multichannel and multi-NIC cluster networks on TechNet in here. Happy Reading!

What about L3 Fault Tolerance?

SMB3 works over layer 3 as long as he app works over layer 3 you’re good.

For S2D on Server 2016.

Example: all my nodes have 4 25 GbE wtih RDMA and 2 10GbE no RDMA.

2 10GbE for Host management and guest traffic.

If I simply assign each 25GbE NIC an IP in the same subnet, will the Cluster automatically setup SMB multi-channel that will load balance and provide fault tolerance for S2D Storage, CSV, and Live migration across all 4 25GbE (technically 100GbE) NICs or do I still need to configure SET, vLANs and etc.?

Yup pretty much. It’s pretty flexible, When you have NIC you can dedicate to East-West traffic you don’t need SET for them.

In my tests on Server 2019 S2D Hyperconverged Cluster I had trouble with Cluster Validation failing. Nodes could not reach each other on UDP Port 3343. Firewall was disabled.

I used one flat Vlan and IPv6 link local adresses. SMB Multichannel worked fine, but Cluster Validation Wizard threw said UDP error.

Example

3 RDMA vNics per Node

Example Node #1,

IPv6 link local addressing

each connected to the same Vlan

SMB1 IPv6 link local address, vlan 221

SMB2 IPv6 link local address, vlan 221

SMB3 IPv6 link local address, vlan 221

-> Fail. UDP Port 3343 not reachable

I tried multiple Vlans, each with IPv6 link local adressing, upon validation failed with “networks are segmented” errors.

Example

3 RDMA vNics per Node

Example Node #1,

IPv6 link local addressing

each connected to one separate Vlan

SMB1 IPv6 link local address, vlan 221

SMB2 IPv6 link local address, vlan 222

SMB3 IPv6 link local address, vlan 223

-> Fail. Same address range is segmented, Validation Wizard throws error

I then tried manual adressing, which gives me control on subnets.

Example

3 RDMA vNics per Node

Example Node #1,

every NIC in the same subnet

each connected to the same Vlan

SMB1 10.221.1.54 / 16, vlan 221

SMB2 10.221.2.54 / 16, vlan 221

SMB3 10.221.3.54 / 16, vlan 221

-> Fail. UDP Port 3343 not reachable

Example Node #1,

one logical subnet per NIC

each connected to the same Vlan

SMB1 10.221.1.54 / 24, vlan 221

SMB2 10.221.2.54 / 24, vlan 221

SMB3 10.221.3.54 / 24, vlan 221

-> Success

Conclusion: You can use only one Vlan for multiple SMB multichannel NICs on your failovercluster node, but you will still need to manually define IP subnets for Cluster Validation Wizard to report success.

Alternatively, you could use one (1) link local subnet per Cluster. But this would defeat SMB multichannel operation of course.

It will work, just validation complaining, but there are some network designs where using multiple subnet avoids sub optimal traffic flows, so I prefer 2 subnets.

I seem to have encountered some weirdness in Switch Embedded Teaming. I tried again and this scenario works now, including Cluster Validation Wizard:

Example

3 RDMA vNics per Node

Example Node #1,

every NIC in the same subnet

each connected to the same Vlan

SMB1 10.221.1.54 / 16, vlan 221

SMB2 10.221.2.54 / 16, vlan 221

SMB3 10.221.3.54 / 16, vlan 221

-> Success. Cluster fully validated

I previously configured static (manually generated) MAC addresses for all vNics. This time, I used dynamic MACs. It seems to work. Let’s see for how long. 🙂

I’ve faced a problem. SMB Multichannel doesn’t work on IPv6 link-local addresses. Connections appear in Get-SmbMultichannelConnection but traffic is going between only one pair of NICs.

But it works correctly on IPv4 or IPv6 site local addresses. Traffic is going beetwen all pairs of NICs.

Does anybody know, why?

You might have the same issue with IPv4 have you tried after reboot of all nodes involved. Multichannel sometimes breaks after changing settings and is fix with reboot (disable/enable NICs not sufficient).

Hmm, I’ve founded the problem was appeared only on Windows Server 2019.

On the Windows Server 2016 environment SMB Multichannel works perfect on IPv6 link-local.

It’s looked like a bug.

Microsoft confirms the bug. It will be fixed in KB995352.