Introduction

My good buddy and fellow MVP Aidan Finn has promoted disabling advanced features by default in order to avoid downtime for a long time and with good reason. I agree with the fact that too many implementations of features such as UNMAP, ODX, VMQ are causing us issues. This has to improve and meanwhile something has to be done to avoid the blast & fallout of such issues. In this trend Windows Server 2016 is taking steps towards disabling capabilities by default.

Windows 2016 & RDMA/RoCE

In Windows 2012 (R2), RDMA was enabled by default on ConnectX-3 adapters. This is great when you’ve provisioned a lossless fabric for them to use and configure the hosts correctly. As you know by now RoCE requires DCB Priority flow control and optionally ETS to deliver stellar performance.

If SMB 3 detects that RDMA cannot work properly it will fall back top TCP (that’s what that little TCP “standby” connection is for in those SMB Multichannel/Direct drawings. Not working correctly can mean that and RoCE/RDMA connection cannot be establish or fails under load.

To make sure that people get the behavior they desire and not run into issues the idea is to move to have RDMA disabled by default in Windows Server 2016 when DCB/PFC is disabled. At least for the inbox drivers. This is mentioned in RDMARoCE Considerations for Windows 2016 on ConnectX-3 Adapters. When you want it you’ll have to enable it on purpose meaning that they assume you’ve also set up a lossless Ethernet fabric and configured your hosts.

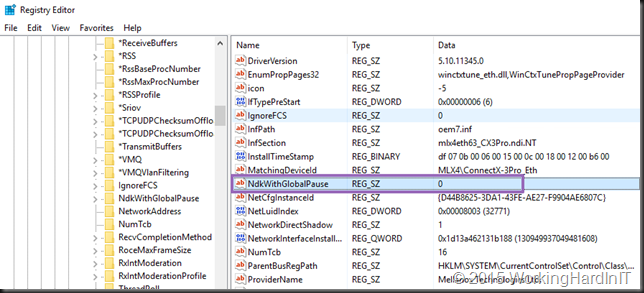

If you don’t want this there is a way to return to the old behavior and that’s a registry key called “NDKWithGlobalPause”. When this key is set to “1” you are basically forcing the NIC to work with Global Pause. Nice for a lab, but not for real world production with RDMA. We want it to be 0, which is the default.

My Lab Experience

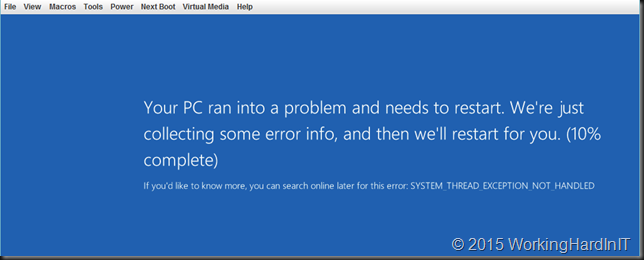

Now setting this parameter to 1 for testing on the Mellanox drivers (NOT inbox) in a running lab server it caused a very nice blue screen. Now granted, I’m playing with the 5.10 drivers which are normally not meant for Windows 2016 TPv4. I’m still trying to understand the use & effects of this setting but for now I’m not getting far.

This does not happen on Windows 2012 R2 by the way. I should be using the http://www.mellanox.com/page/products_dyn?product_family=129 for Windows 2016 TPv4 testing, even if that one still says TPv3. This beta driver enables:

- NDKPI 2.0

- RoCE over SR-IOV

- Virtualization and RoCE/SMB-Direct on the same port

- VXLAN Hardware offload

- PacketDirect

Conclusion

In an ideal world all these advanced features would be enabled out of the box to be used when available and beneficial. Unfortunately, this idea has not worked out well in the real world. Bugs in operating systems, features, NIC firmware and drivers, in storage array firmware and software as well as in switch firmware have made for too many issues for too many people.

There is a push to disable them all by default. The reason for this clear. Avoid down time, data corruption etc. While I can understand this and agree with the practice to avoid issues and downtime it’s also a bit sad. I do hope that work is being done to make sure that these performance and scalability features become truly reliable and that we don’t end up disabling them all, never to turn them on again. That would mean we’re back to banking on pure raw power or growth for performance & efficiency gains. What does that mean for the big convergence push? If we can’t get these capabilities to be reliable enough for use “as is” now, how much riskier will it become when they are all stacked on top of each other in a converged setup? I’m not to stoked about having ODX, UNMAP, VMQ, RMDA etc. turned off as a “solution” however. I want them to work well and not lose them as a “fix”, that’s unacceptable. When I do turn them on and configure the environment correctly I want them to work well. This industry has some serious work to do in getting there. All this talk of software defined anything will not go far outside of cloud providers if we remain at the mercy of firmware and drivers. In that respect I have seen many software defined solutions get a reality check as early implementations are often a step back in functionality, reliability and capability. It’s very much still a journey. The vision is great, the promise is tempting, but in a production environment I tend to be conservative until I have proven myself it works for us.

Nice conclusion Didier! It will be a costly purchase for any company to get the most out of their network and still compete with other clouds. Whether you are using more expensive proven/mature technology or you’re beta testing cheaper technology in your production environment 🙂

Especially when features are turned off by default which causes a shrinking user base as a result. Without decent testing and feedback in all kind of high performance scenarios you will be left with a product that will never reaches it’s full (stable) potential.

In the end it’s all about SLA’s and money customers want to pay for your IT product. There is still a huge gap between public and private cloud costs and hybrid is there to stay for a long time so we can still make a huge difference. VMware is far ahead of Microsoft incoperating these network features in a more stable virtualization environment. If Microsoft really want to make a succes of Azure and the hybrid path to it then we have to make a louder sound and bigger statement urging Microsoft to streamline Windows and Hyper-V in cooperation with hardware vendors to the point of the compatibility and maturity standards already set by competitors.