Fixing slow RoCE RDMA performance with WinOF-2 to WinOF

In this post, you join me on my quest of fixing slow RoCE RDMA performance with traffic initiated on WinOF-2 system to or from a WinOF system. I was working on the migration to new hardware for Hyper-V clusters. This is in one of the locations where I transition from 10/40Gbps to 25/50/100Gbps. The older nodes had ConnectX-3 Pro cards for SMB 3 traffic. The new ones had ConnectX-4 Lx cards. The existing network fabric has been configured with DCB for RoCE and has been working well for quite a while. Everything was being tested before the actual migrations. That’s when we came across an issue.

All the RDMA traffic workes very well except when initiated from the new servers. So ConnectX-3 Pro to ConnectX-3 Pro works fine. So does ConnectX-4 to ConnectX-4. When initiating traffic (send/retrieve) from a ConnectX-3 Pro server to a ConnectX-4 host it is also working fine.

However, when I initiated traffic (send/retrieve) from a ConnectX-4 server to a ConnectX-3 host the performance was abysmally slow. 2.5-3.5 MB/s is really bad. This need investigation. It smelled a bit like an MTU size issue. As if one side was configured wrong.

The suspects

As things are bad only when traffic was initiated from a ConnectX-4 host I suspected a misconfiguration. But that all checked out. We could reproduce it on every ConnectX-4 to any ConnectX-3.

The network fabric is configured to allow jumbo frames end to end. All the Mellanox NICs have an MTU size of 9014 and the RoCE frame size is set to Automatic. This has worked fine for many years and is a validated setup.

If you read up on how MTU sizes are handled by Mellanox you would expect this to work out well.

InfiniBand protocol Maximum Transmission Unit (MTU) defines several fix size MTU: 256, 512, 1024, 2048 or 4096 bytes.

The driver selects “active” MTU that is the largest value from the list above that is smaller than Eth MTU in the system (and takes in the account RoCE transport headers and CRC fields). So for example with default Ethernet MTU (1500 bytes) RoCE will use 1024 and with 4200 it will use 4096 as an “active MTU”. The “active_mtu” values can be checked with “ibv_devinfo”.

RoCE protocol exchanges “active_mtu” values and negotiates it between both ends. The minimum MTU will be used.

So what is going on here? I started researching a bit more and I found this in the release notes of the WinOF-2 2.20 driver.

Description: In RoCE, the maximum MTU of WinOF-2 (4k) is greater than the maximum MTU of WinOF (2k). As a result, when working with MTU greater than 2k, WinOF and WinOF-2 cannot operate together.

Well the ConnectX-3 Pro uses WinOF and the ConnectX-4 uses WinOF-2 so this is what they call a hint. But still, if you look at the mechanism for negotiating the RoCE frame size this should negotiate fine in our setup, right?

The Fix

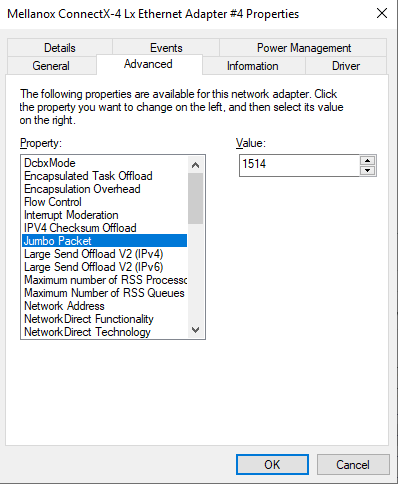

Well, we tried fixing the RoCE frame size to 2048 on both ConnectX-3 Pro and ConnectX-4 NICs. We left the NIC MTU at 9014. That did not help at all. What did help in the end wat set the NIC MTU sizes to 1514 (default) and set the Max RoCE frame size to automatic again? With this setting, all scenarios in all direction do work as expected.

Personally, I think the negotiation of the RoCE frame size, when set to automatic should figure this out correctly with the NIC MTU size set to 9014 as well. But I am happy I found a workaround where we can leverage RDMA during the migration, After that is done we can set the NIC MTU sizes back to 9014 when there is no more need for ConnectX-4 / ConnectX3 RDMA traffic.

Conclusion

The above behavior was unexpected based on the documentation. I argue the RoCE frame size negotiation should work thing out correctly in each direction. Maybe a fix will appear in a new WinOF-2 driver. The good news is that after checking many permutations I found came up with a fix. Luckily, we can leave jumbo frames configured across the network fabric. Reverting the NIC interfaces MTU size to 1514 on both side and leaving the RoCE frame size on auto on both sides works fine. So that is all that’s needed for fixing slow RoCE RDMA performance with WinOF-2 to WinOF. This will do just fine during the co-existence period and we keep it in mind whenever we encounter this behavior again.

The read up on MTU link is dead, great post though,

The link has been fixed. Thanks for letting me know it was dead.

Did you experience a performance de-/increase bumping the MTU to 9014 ? I’ve tested all MTUs extensively using Connect-X 4 cards but have been completely unable to measure any increase (or decrease) in performance at all ?

Marginal 1-2% with RDMA for our workloads as the biggest benefits of Jumbo frames is a reduction of CPU cycles (up to 20%) which is a non-issue with RDMA. However, if RDMA fails or you have workloads that do no leverage RDMA going of the NICs/Stack it does help and is why I always configure it when We have the stack end to end under control. I blogged enough on that subject over the years.