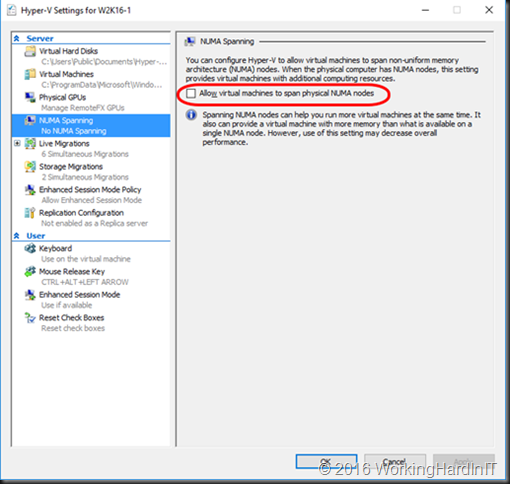

When it comes to NUMA Spanning and Virtual NUMA in Hyper-V or anything NUMA related actually in Hyper-V virtualization this is one subject that too many people don’t know enough about. If they know it they often could be helped by some more in depth information and examples on anything NUMA related in Hyper-V virtualization.

Some run everything on the defaults and never even learn more l they read or find they need to dive in deeper for some needs or use cases. To help out many with some of the confusion or questions they struggled with in regards to Virtual NUMA, NUMA Topology, NUMA Spanning and their relation to static and dynamic memory.

As I don’t have the time to answer all questions I get in regards to this subject I have written an article on the subject. I’ve published it as a community effort on the StarWind Software blog and you can find it here: A closer look at NUMA Spanning and virtual NUMA settings

I think t complements the information on this subject on TechNet well and it also touches on Windows Server 2016 aspects of this story. I hope you enjoy it!

Based on your article, you state:

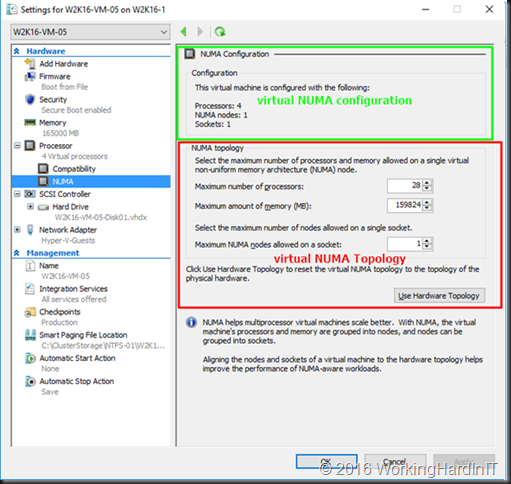

“You can change the memory up and down a bit without issues but when we try to change the memory size to a lesser amount so that it could be served by a single NUMA node it fails.” (near the bottom). Do you happen to know if this is really true for sure ? As I understood this new tech, Memory can only be changed down to the amount that’s actually not being used in the VM. In case of this example 2 vNUMA, 2socket, 2vCPUs you would expect it to be able to lower way beyond 1 vNUMA simply because they could lower blocks of mem. used on “each” vNUMA node to get there, just provided it’s free to begin with. Free meaning free, not even in use for caches and the like. Did you perhaps turn out to not be able to lower it below 2NUMA simply because one or both of the two vNUMA nodes didn’t have enough free mem. to lower it down to the amount requested divided by 2 ? This sounds a bit restricted to me. I understand you can’t grow it beyond 1 vNUMA, but lowering 2vNUMAs below the size of 1 vNUMA could simply be solved by lowering BOTH vNUMAs, each half of requested lower size, couldn’t it ? Or is this too simple thinking ? 🙂

It was TPv4 testing, not “assuming or thinking”. The test VM was “alone” on that large host. Just like adding memory or CPU to a VM can change the NUMA config so will reducing memoru / CPU, and that’s not an “online” event. That were the results. Now we have TPv5 (and I’m seeing some changes already – moving up has worked with changing v Numa config but down still doesn’t work without error) and RTM also still has to come out. Play with it, you’ll se funcky behavior, promissing results, but when you have a memory change that affects vNUMA config it’s not working in all scenarios.