Recently I was asked to take a look at why UNMAP was not working predictably in a Windows Server 2012 R2 Hyper-V environment. No, this is not a horror story about bugs or bad storage solutions. Fortunately, once the horror option was of the table I had a pretty good idea what might be the cause.

San snapshots are in play

As it turned out everything was indeed working just fine. The unexpected behavior that made it seem that UNMAP wasn’t working well or at least at moments they didn’t expected it was caused by the SAN snapshots. Once you know how this works you’ll find that UNMAP does indeed work predictably.

Snapshots on SANs are used for automatic data tiering, data protection and various other use cases. As long as those snapshots live, and as such the data in them, UNMAP/Trim will not free up space on the SAN with thinly provisioned LUNs. This is logical, as the data is still stored on the SAN for those snapshots, hard deleting it form the VM or host has no impact on the storage the SAN uses until those snapshots are deleted or expire. Only what happens in the active portion is directly impacted.

An example

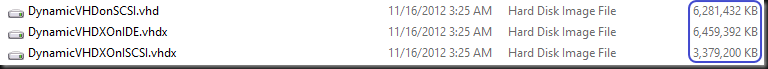

- Take a VM with a dynamically expanding VHDX that’s empty and mapped to drive letter D. Note the file size of the VHDX and the space consumed on the thinly provisioned SAN LUN where it resides.

- Create 30GB of data in that dynamically expanding virtual hard disk of the virtual machine

- Create a SAN snapshot

- Shift + Delete that 30GB of data from the dynamically expanding virtual hard disk in the virtual machine. Watch the dynamically expanding VHDX grow in size, just like the space consumed on the SAN

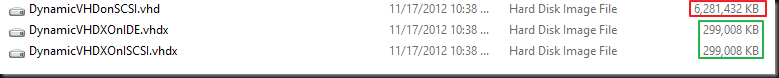

- Run Optimize-Volume D –retrim to force UNMAP and watch the space consumed of the Size of the LUN on the SAN: it remains +/- the same.

- Shut down the VM and look at the size of the dynamic VHDX file. It shrinks to the size before you copied the data into it.

- Boot the VM again and copy 30GB of data to the dynamically expanding VHDX in the VM again.

- See the size of the VHDX grow and notice that the space consumed on the SAN for that LUN goes up as well.

- Shift + Delete that 30GB of data from the dynamically expanding virtual hard disk in the virtual machine

- Run Optimize-Volume D –retrim to force UNMAP and watch the space consumed of the Size of the LUN on the SAN: It drops, as the data you delete is in the active part of your LUN (the second 30GB you copied), but it will not drop any more than this as the data kept safe in the frozen snapshot of the LUN is remains there (the first 30GB you copied)

- When you expire/delete that snapshot on the SAN we’ll see the size on the thinly provisioned SAN LUN drop to the initial size of this exercise.

I hope this example gave you some insights into the behavior

Conclusion

So people who have snapshot based automatic data tiering, data protection etc. active in their Hyper-V environment and don’t see any results at all should check those snapshot schedules & live times. When you take them into consideration you’ll see that UNMAP does work predictably, all be it in a “delayed” fashion ![]() .

.

The same goes for Hyper-V checkpoints (formerly known as snapshots). When you create a checkpoint the VHDX is kept and you are writing to a avhdx (differencing disk) meaning that any UNMAP activity will only reflect on data in the active avhdx file and not in the “frozen” parent file.