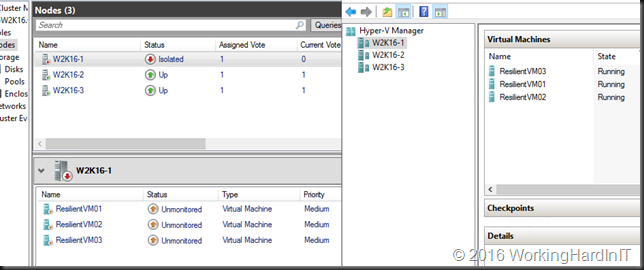

I have blogged about Virtual Machine Resiliency in Windows 2016 Failover Clustering before in Testing Virtual Machine Compute Resiliency in Windows Server 2016

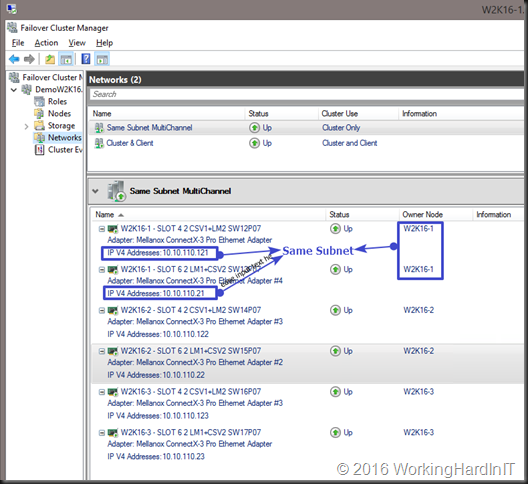

Those test and demos were done with block lever storage, CSV on Fibre Channel, iSCSI or shared SAS. Today we’ll look at the experience when you’re running your VMs on a continually available file share on a Scale Out File Server (SOFS). This configuration offers the best possible experience.

Why well, when the cluster node is in Isolated mode this has no impact on the SOFS share as this is a resource external to the Hyper-V cluster. In other words it remains on line. This means that the VMs, even if they have lost their high availability during the time the node is Isolated, they keep running. After all there is nothing wrong with Hyper-V itself. With block level CSV storage you lose access to the storage as that a cluster resource and the node got isolated. That’s why the VMs go into a paused critical state during a transient failure with block level storage but they don’t when you’re using SOFS.

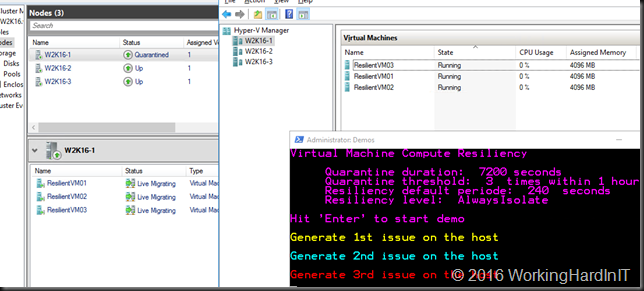

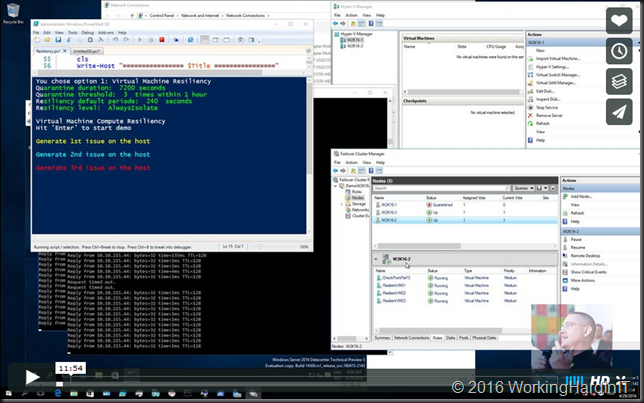

The virtual machine compute resiliency feature in action shows you that the VMs service a transient failure without issues. Your services need never know something was up. Even when the transient failure is reoccurring that doesn’t mean it will cause down time. The node will be quarantined and if it come backup the workload will be live migrated away.

You can watch a video of this in action here on Vimeo:

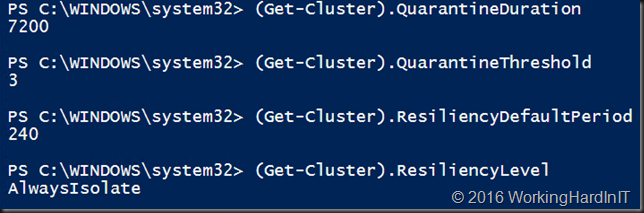

The quarantine threshold and duration as well as the resiliency period and can be tweaked to your environment to get the best possible results.

SMB 3 for the win! This is yet one more convincing argument to start looking into SOFS and leveraging the capabilities of SMB3. Remember that you can run as SOFS cluster against your existing shared storage to get started if you can get the IOPS/latency you require. But also look into storage spaces, especially storage spaces direct which avoids some of the drawback SANs have in such a scenario. High time for storage vendors to really scale out, implement SMB 3 well and complete and keep the great added value features they already have in their offering. It’s this or becoming yet a bit more irrelevant in todays storage scene in the Microsoft ecosystem.