This is going to be a busy night …

Monthly Archives: May 2012

Windows Server 2012 Release Candidate Available For Download!

Update:The downloads are now available for TechNet and MSDN subscribers as well.

Excellent news. Windows Server 2012 Release Candidate is available for download at http://technet.microsoft.com/en-us/evalcenter/hh670538.aspx?wt.mc_id=TEC_108_1_3

I’m downloading it as I write this blog post ![]()

I did not see it yet on the downloads for subscribers of TechNet or MSDN but I’m sure they will be available there soon as well.

Start your lab servers, we’re in for some serious upgrading and testing the next couple of days. I’ve been looking forward to this.

Update 2012/05/31 21:00 :The downloads are now available for TechNet and MSDN subscribers as well.

Windows Server 2012 Supports Data Center TCP (DCTCP)

In the grand effort to make Windows Server 2012 scale above and beyond the call of duty Microsoft has been addressing (potential) bottle necks all over the stack. CPU, NUMA, Memory, storage and networking.

Data Center TCP (DCTCP) is one of the many improvements by which Microsoft aims to deliver a lot better network throughput with affordable switches. Switches that can mange large amounts of network traffic tend to have large buffers and those push up the prices a lot. The idea here is that a large buffer creates the ability to deal with burst and prevents congestions. Call it over provisioning if you want. While this helps it is far from ideal. Let’s say it a blunt instrument.

To mitigate this issue Windows Server 2012 is now capable dealing with network congestion in a more intelligent way. It does so by reacting to the degree & not merely the presence of congestion using DCTCP. The goals are:

- Achieve low latency, high burst tolerance, and high throughput, with small buffer switches (read cheaper).

- Requires Explicit Congestion Notification (ECN, RFC 3168) capable switches. This should be no showstopper you’d think as it’s probably pretty common on most data center / rack switches but that doesn’t seem to be the case for the real cheap ones where this would shine …

- Algorithm enables when it makes sense to do so (low round trip times, i.e. it will be used inside the data center where it makes sense, not over a world wide WAN or internet).

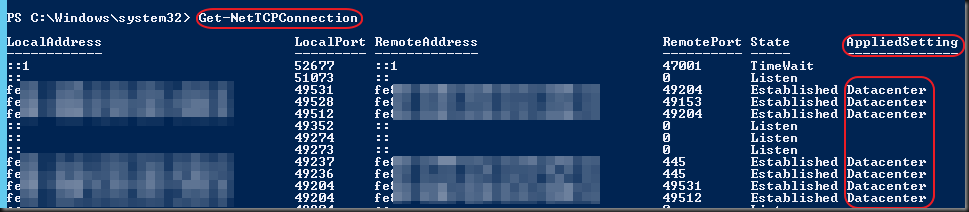

To see if it is applied run Get-NetTcpConnection:

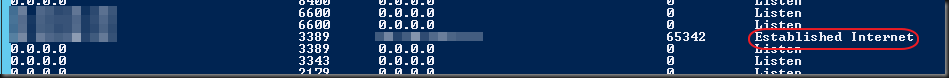

As you can see this is applied here on a DELL PC8024F switch for the CSV and LM networks. The internet connected NIC (connection of the RDP session) shows:

Yup, it’s East-West traffic only, not North-South where it makes no sense.

When I was prepping a slide deck for a presentation on what this is, does and means I compared it to the green wave traffic light control. The space between consecutive traffic lights is the buffer and the red light are stops the traffic has to deal with due congestion. This leaves room for a lot of improvement and the way to achieve this is traffic control that intelligently manages the incoming flow so that at every hop there is a green light and the buffer isn’t saturated.

Windows Server 2012 in combination with Explicit Congestion Notification (ECN) provides the intelligent traffic control to realize the green wave.

The result is very smooth low latency traffic with high burst tolerance and high throughput with cheaper small buffer switches. To see the difference look at the picture below (from Microsoft BUILD)of what this achieves. Pretty impressive. Here’s a paper by Microsoft Research on the subject

TRIM/UNMAP Support in Windows Server 2012 & Hyper-V/VHDX

Introduction

I’m very exited about the TRIM/UNMAP support in Windows Server 2012 & Hyper-V with the VHDX file. Thin provisioning is a great technology. It’s there is more to it than just proactive provisioning ahead of time. It also provides a way to make sure storage allocation stays thin by reclaiming freed up space form a LUN. Until now this required either the use of sdelete on windows or dd for the Linux crowd, or some disk defrag product like Raxco’s PerfectDisk. It’s interesting to note here that sdelete relies on the defrag APIs in Windows and you can see how a defragmentation tool can pull off the same stunt. Take a look at Zero-fill Free Space and Thin-Provisioned Disks & Thin-Provisioned Environments for more information on this. Sometimes an agent is provided by the SAN vendor that takes care of this for you (Compellent) and I think NetApp even has plans to support it via a future ONTAP PowerShell toolkit for NTFS partitions inside the VHD (https://communities.netapp.com/community/netapp-blogs/msenviro/blog/2011/09/22/getting-ready-for-windows-server-8-part-i). Some cluster file system vendors like Veritas (symantec) also offer this functionality.

A common “issue” people have with sdelete or the like is that is rather slow, rather resource intensive and it’s not automated unless you have scheduled tasks running on all your hosts to take care of that. Sdelete has some other issue when you have mount points, sdelete can’t handle that. A trick is to use the now somewhat ancient SUBST command to assign a drive letter to the path of the mount point you can use sdelete. Another trick would be to script it yourself see. Mind you can’t just create a big file in a script and delete it. That’s the same as deleting “normal” data and won’t do a thing for thing provisioning space reclamation. You really have to zero the space out. See (A PowerShell Alternative to SDelete) for more information on this. The script also deals with another annoying thing of sdelete is that is doesn’t leave any free space and thereby potentially endangers your operations or at least sets off all alarms on the monitoring tools. With a home grown script you can force a free percentage to remain untouched.

TRIM/UNMAP

With Windows Server 2012 and Hyper-V VHDX we get what is described in the documentation “’Efficiency in representing data (also known as “trim”), which results in smaller file size and allows the underlying physical storage device to reclaim unused space. (Trim requires physical disks directly attached to a virtual machine or SCSI disks in the VM, and trim-compatible hardware.) It also requires Windows 2012 on hosts & guests.

I was confused as to whether VHDX supports TRIM or UNMAP. TRIM is the specification for this functionality by Technical Committee T13, that handles all standards for ATA interfaces. UNMAP is the Technical Committee T10 specification for this and is the full equivalent of TRIM but for SCSI disks. UNMAP is used to remove physical blocks from the storage allocation in thinly provisioned Storage Area Networks. My understanding is that is what is used on the physical storage depends on what storage it is (SSD/SAS/SATA/NL-SAS or SAN with one or all or the above) and for a VHDX it’s UNMAP (SCSI standard)

Basically VHDX disks report themselves as being “thin provision capable”. That means that any deletes as well as defrag operation in the guests will send down “unmaps” to the VHDX file, which will be used to ensure that block allocations within the VHDX file is freed up for subsequent allocations as well as the same requests are forwarded to the physical hardware which can reuse it for it’s thin provisioning purpose. Also see http://msdn.microsoft.com/en-us/library/hh848053(v=vs.85).aspx

So unmap makes it way down the stack from the guest Windows Server 2012 Operating system, the VHDX , the hyper visor and the storage array.This means that an VHDX will only consume storage for really stored data & not for the entire size of the VHDX, even when it is a fixed one. You can see that not just the operating system but also the application/hypervisor that owns the file systems on which the VHDX lives needs to be TRIM/UNMAP aware to pull this off.

The good news here is that there is no more sdelete to run, scripts to write, or agents to install. It happens “automagically” and as ease of use is very important I for one welcome this! By the way some SANs also provide the means to shrink LUNs which can be useful if you want the space used by a volume is so much lower than what is visible/available in windows and you don’t want people to think you’re wasting space or all that extra space is freely available to them.

To conclude I’ll be looking forward to playing around with this and I hope to blog on our experiences with this later in the year. Until Windows Server 2012 & VHDX specifications are RTM and fully public we are working on some assumptions. If you want to read up on the VHDX format you can download the specs here. It looks pretty feature complete.